As artificial intelligence (AI) systems, particularly autonomous AI agents, become increasingly integrated into business operations, their ability to act independently introduces a new frontier of potential risks. Managing these risks proactively is no longer optional but a critical necessity for enterprises. Effective AI Agent Risk Management ensures these sophisticated systems operate safely, reliably, and in alignment with organizational goals and ethical standards.

What is AI Agent Risk Management?

AI Agent Risk Management is the comprehensive process of identifying, assessing, evaluating, monitoring, and controlling the potential risks associated with the deployment and operation of autonomous AI agents . These risks can range from unintended actions and data misuse to security vulnerabilities and ethical breaches , . The core objective is to safeguard against harm, ensure AI agents perform as intended, align with human values, and comply with all relevant regulations .

Why is AI Agent Risk Management Crucial?

The burgeoning capabilities of AI agents, enabling them to make decisions and perform tasks with minimal human oversight, underscore the importance of robust AI Agent Risk Management.

1. Growing Autonomy

As AI agents take on more complex responsibilities, the potential for divergence from intended behavior increases, necessitating diligent oversight .

2. Potential for Harm

Without proper controls, AI agents could cause significant harm to individuals, systems, or an organization’s reputation and financial standing , . For example, a simple-reflex agent used in a critical system, if it malfunctions, could lead to severe consequences .

3. Maintaining Trust and Reliability

For AI agents to be adopted and utilized effectively, stakeholders must trust their reliability and safety . Effective risk management builds and maintains this trust.

4. Ensuring Compliance and Ethical Operation

Organizations must ensure their AI agents comply with an ever-evolving landscape of regulations and operate within ethical boundaries. AI Agent Risk Management is key to achieving this.

Understanding the basics of AI agents is fundamental before diving deeper into risk management. Lyzr.ai offers a wealth of resources on AI Agents to build this foundational knowledge.

To better understand the shift, let’s compare traditional approaches with those necessary for AI agents:

| Feature | Traditional Risk Management | AI Agent-Based Risk Management (with Lyzr.ai potential) | Benefits of AI Approach |

|---|---|---|---|

| Data Processing | Manual, Batch | Automated, Real-time processing | Speed, efficiency, continuous insight |

| Decision Speed | Slower, Human-dependent | Rapid, Autonomous (with oversight) | Agility, proactive response |

| Error Rate | Prone to human bias/fatigue | Reduced through consistency, algorithms | Higher accuracy, less operational error |

| Predictive Power | Limited, historical | Advanced, learning-based , | Foresight, preemptive action |

| Scalability | Difficult, resource-intensive | Highly scalable with data & complexity | Handles growing risk landscapes |

| Cost Efficiency | Higher operational & manual costs | Lowered through automation, optimization | Resource optimization, reduced overhead |

Understanding the Spectrum of Risks in AI Agents

The deployment of AI agents introduces a multifaceted array of risks that organizations must anticipate and address. These risks are influenced by factors such as the agent’s intended use, its learning capabilities, and the degree of its autonomy .

1. Decision-Making Flaws and Unintended Actions

Agents might make incorrect decisions or take unexpected actions due to flawed logic, incomplete data, or unforeseen scenarios , .

2. Data Misuse and Privacy Breaches

Agents often handle sensitive data, and without stringent safeguards, this information could be misused, leaked, or exposed, especially when interacting with external Large Language Models (LLMs) , .

3. Security Vulnerabilities

AI agents can be targets for malicious attacks, such as prompt injection, which could manipulate them into revealing confidential information or performing unauthorized actions , .

4. Ethical Breaches and Bias Amplification

Agents trained on biased data can perpetuate or even amplify these biases, leading to unfair or discriminatory outcomes .

5. Operational Risks

These include challenges with system integration, dependencies on third-party models or platforms, and the skills required for effective and responsible utilization , .

6. Compliance and Regulatory Risks

Failure to adhere to industry-specific regulations (e.g., in finance or healthcare) or general data protection laws can result in severe penalties , .

7. Reputational Damage

Incidents involving AI agents, whether due to malfunction, misuse, or ethical lapses, can significantly harm an organization’s reputation .

Different types of AI agents inherently carry different risk profiles, as illustrated below:

| Agent Type | Description | Key Risk Considerations | Mitigation Focus (Potentially supported by Lyzr.ai platform features) |

|---|---|---|---|

| Simple-Reflex Agents | Act based on current percepts only, no memory of past. | Misinterpretation of stimuli, limited adaptability. | Robust sensor interpretation, clear rules, environment modeling. |

| Model-Based Reflex Agents | Maintain internal state to track aspects of the world. | Incorrect world model, flawed state updates, data drift. | Accurate modeling, continuous validation, adaptive learning. |

| Goal-Based Agents | Act to achieve explicit goals, may involve search and planning. | Inefficient goal pursuit, unintended side-effects, conflicting goals. | Clear goal definition, consequence scanning, ethical goal alignment. |

| Utility-Based Agents | Choose actions that maximize expected utility/happiness. | Miscalibrated utility functions, ethical dilemmas, value alignment. | Robust utility definition, ethical framework integration, oversight. |

| Learning Agents | Improve performance over time through experience. | Learning harmful behaviors, data poisoning, concept drift, overfitting. | Continuous monitoring, safe exploration, robust training data. |

| Hierarchical Agents | Complex tasks broken down into subtasks managed by specialized agents. | Coordination failures, cascading errors, communication breakdowns. | Robust orchestration, interface checks, resilient sub-agent design. |

For enterprises looking to leverage AI, exploring various Lyzr AI use cases can provide insight into practical applications and associated risk considerations.

The AI Agent Risk Management Framework: A Step-by-Step Approach

A structured framework is essential for systematically addressing the risks associated with AI agents. This typically involves several key stages :

1. Risk Identification

This initial step involves comprehensively cataloging all potential risks. This means determining what could go wrong when agents act autonomously, considering everything from technical malfunctions to ethical dilemmas and security threats .

2. Risk Assessment

Once risks are identified, they must be assessed based on their likelihood of occurrence and the potential severity of their impact . This helps prioritize which risks require the most urgent attention.

3. Risk Mitigation

This stage focuses on implementing controls and strategies to reduce the likelihood or impact of identified risks. This can include setting operational constraints, employing “red-teaming” to test defenses, or establishing robust monitoring systems .

4. Continuous Monitoring and Adaptation

AI Agent Risk Management is not a one-time task. It requires ongoing monitoring of agent performance, behavior, and the evolving threat landscape. Regular testing, feedback mechanisms, and performance metrics are crucial to detect and respond to new or changing risks during operation .

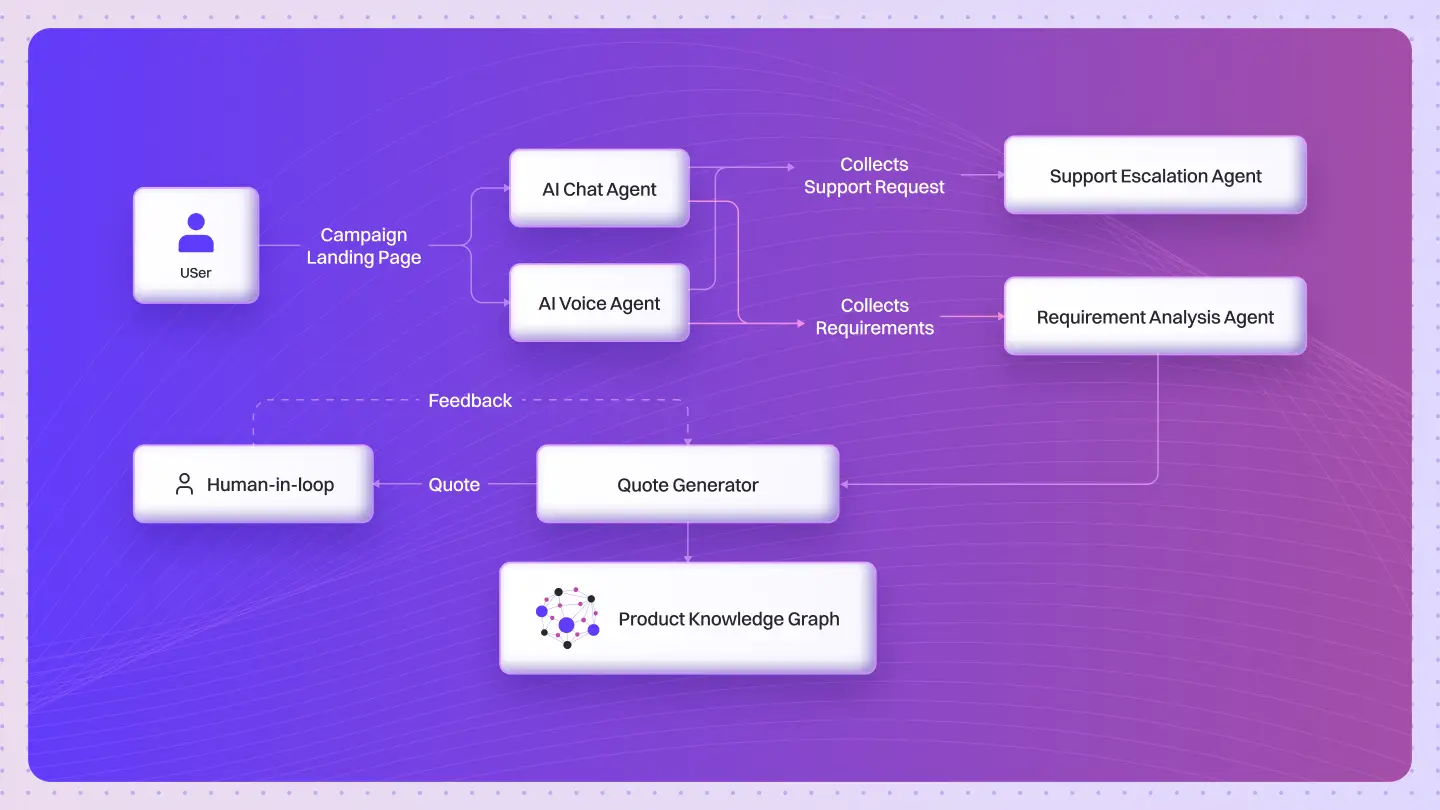

Tools often employed within these frameworks include simulation and scenario testing, human-in-the-loop decision points for critical actions, and mechanisms for explainability and detailed logging of agent activities . Further insights into the current landscape can be found in Lyzr’s State of AI Agents report.

Key Strategies and Best Practices for Effective AI Agent Risk Management

Implementing effective AI Agent Risk Management involves a combination of strategic planning and practical best practices.

1. Context-Specific Approaches

Risk management strategies must be tailored to the specific context in which an AI agent operates, including its purpose, capabilities, and environment. A generic approach is unlikely to be effective given the novelty and versatility of AI agents .

2. Human-in-the-Loop (HITL)

Incorporating human oversight, especially for critical decisions or in ambiguous situations, provides a crucial safety net . This ensures that AI autonomy is balanced with human judgment. More on how Lyzr views agent architecture can be explored through our tools like Jazon for Generative AI agents.

3. Explainability and Logging

Systems should be designed so that an agent’s decision-making processes are as transparent as possible. Detailed logs of actions and decisions are vital for auditing, debugging, and understanding agent behavior .

4. Simulation and Scenario Testing (Red Teaming)

Proactively testing agents in simulated environments against a variety of scenarios, including adversarial attacks, helps identify vulnerabilities before deployment in live settings .

5. Data Governance and Security

Strict protocols for data handling are essential. This includes ring-fencing sensitive data, anonymizing inputs where possible, and limiting agent access to only necessary information to prevent data leakage . AWS offers guidelines on responsible AI that touch upon data governance.

6. Regulatory Awareness

Build compliance checks directly into the AI development pipeline and ensure systems are designed for audit readiness. This is crucial as AI governance frameworks continue to evolve .

7. Start Small and Iterate

When deploying new AI agents, begin with a limited operational domain and gradually expand capabilities as the system proves reliable and risks are well-understood .

8. Robust Model Validation

Data scientists play a key role in validating AI models, ensuring their accuracy and alignment with governance and compliance standards . Platforms like Hugging Face promote model cards for transparency.

Below are some key techniques used to mitigate risks when deploying AI agents:

| Mitigation Technique | Description | Primary Benefit(s) | Example Application (relevant to Lyzr.ai context) |

|---|---|---|---|

| Human-in-the-Loop (HITL) | Inserting human review/approval at critical junctures. | Safety, accountability, nuanced judgment | Approving complex financial advice generated by an AI agent. |

| Explainability & Logging | Making agent decision processes transparent and auditable. | Trust, debugging, compliance reporting, bias detection | Tracing why an AI agent prioritized certain tasks in a workflow. |

| Simulation & Red Teaming | Testing agents in controlled environments against adversarial attacks. | Proactive vulnerability discovery, robustness testing | Simulating data breaches to test an AI security agent’s response. |

| Data Governance & Anonymization | Implementing strict controls on data access, usage, and de-identification. | Privacy protection, compliance, reduced data misuse risk | Anonymizing customer PII before an AI agent processes it for insights. |

| Access Control & Ring-fencing | Limiting agent permissions and operational domains. | Prevents unauthorized actions, limits blast radius | Restricting a customer service AI agent to only access FAQ databases. |

| Continuous Monitoring | Tracking agent performance, behavior, and outputs in real-time. | Early detection of anomalies, drift, or failures | Alerting when an AI agent’s response accuracy drops below a threshold. |

| Ethical Frameworks | Embedding ethical guidelines and principles into agent design and operation. | Fairness, accountability, transparency, prevents harm | Ensuring an AI hiring agent avoids biased candidate selection. |

AI Agent Risk Management in Action: Practical Applications

The principles of AI Agent Risk Management are being applied across various industries to harness the power of AI agents safely.

1. Fraud Prevention in Finance

AI agents analyze transaction patterns in real-time to detect and flag suspicious activities, initiating preventative measures like freezing accounts or blocking transactions .

2. Cybersecurity Threat Detection and Response

Agents monitor network traffic, identify anomalies indicative of breaches, and can autonomously adjust security protocols or isolate affected systems . NVIDIA discusses AI’s role in cybersecurity. To further enhance protection, integrating SAST scanning into development workforce help detect vulnerabilities in the application code early, ensuring that both network and software layers remain secure.

3. Regulatory Compliance Automation

AI agents track changes in regulations and automatically adjust internal controls and processes to ensure ongoing compliance, reducing manual effort and risk of penalties .

4. Supply Chain Risk Assessment

In complex supply chains, agents can assess vendor performance, monitor for disruptions, and ensure compliance with contractual and ethical standards .

5. Environmental, Social, and Governance (ESG) Risk Management

Agents analyze diverse data sources to identify and mitigate ESG-related risks, helping companies meet sustainability goals and regulatory requirements . McKinsey often reports on ESG and corporate responsibility.

6. Credit Risk Assessment in Banking

AI agents evaluate borrower data, credit histories, and market conditions to provide more accurate creditworthiness assessments, informing lending decisions . Exploring our AI Agents in banking article can provide more context here.

7. Operational Risk Management

Across various sectors, AI agents help identify internal process weaknesses and potential operational failures, enhancing stability and efficiency . For instance, Lyzr’s Diane for knowledge retrieval can be part of systems managing operational knowledge. Consider exploring Lyzr Case Studies for more real-world examples.

The Role of Platforms like Lyzr.ai in AI Agent Risk Management

Platforms like Lyzr.ai are instrumental in facilitating effective AI Agent Risk Management. By providing sophisticated tools and environments for developing, deploying, and managing AI agents, such platforms can embed risk mitigation capabilities directly into the agent lifecycle. Lyzr’s multi-agent platform, for example, allows for the creation of complex systems where individual agent responsibilities can be clearly defined and monitored. Features within Lyzr Studio (accessible via studio.lyzr.ai) can support robust development practices, including testing, versioning, and deployment pipelines that incorporate safety checks. Specialized SDKs from Lyzr, such as Jazon for generative tasks and Diane for enterprise knowledge retrieval, are designed to build powerful yet controllable AI solutions. Utilizing such platforms can help organizations implement strategies like data segregation, access controls, and performance monitoring more efficiently. For instance, when creating an Agentic RAG system, a platform can help manage the risks associated with inaccurate information retrieval or generation. For those looking to deepen their understanding, Lyzr may also offer Courses or a Community forum.

Future Trends in AI Agent Risk Management

The field of AI Agent Risk Management is continuously evolving alongside advancements in AI technology itself.

1. Enhanced AI-Driven Detection

AI systems will become increasingly sophisticated at detecting subtle risks and anomalies in other AI agents, leading to more proactive and automated risk identification , . OpenAI’s research into AI safety is a key area to watch.

2. Human-AI Collaboration

The future will likely see closer collaboration between human risk managers and AI systems, where AI augments human capabilities by handling large-scale data analysis and initial assessments, while humans provide oversight and make critical judgments .

3. Integration with Emerging Technologies

Technologies like blockchain may be integrated for enhanced auditability and transparency in AI agent actions, creating immutable records of decisions and data provenance .

4. Evolving Regulatory Landscapes

As AI adoption grows, regulatory bodies will develop more comprehensive frameworks for AI governance and risk management, requiring organizations to adapt continuously , . Gartner often provides insights into AI governance trends.

5. Personalized and Adaptive Risk Strategies

Risk management approaches will become more tailored to the specific risk profiles of individual AI agents and the unique contexts of their deployment, moving away from one-size-fits-all solutions .

6. Focus on Responsible AI

There will be a growing emphasis on building AI agents that are not only effective but also fair, transparent, and accountable, aligning with principles of Responsible AI as advocated by major tech leaders like Google Cloud. Similar initiatives are seen from Meta AI.

Frequently Asked Questions (FAQs)

Here are answers to some common questions.

1. What is the first step in AI Agent Risk Management?

The first step is Risk Identification, which involves determining all potential things that could go wrong when AI agents operate autonomously .

2. How does AI Agent Risk Management differ from traditional IT risk management?

It focuses on risks unique to autonomous decision-making, learning capabilities, and potential ethical implications, which are less central in traditional IT.

3. What tools or platforms can help implement AI Agent Risk Management?

Platforms like Lyzr.ai offer SDKs and environments like Lyzr Studio to build, deploy, and manage AI agents with features supporting monitoring and control.

4. What are the key tradeoffs to consider when working with AI Agent Risk Management?

Key tradeoffs often involve balancing innovation speed with safety rigor, autonomy with oversight, and development cost with comprehensive risk mitigation.

5. How are enterprises typically applying AI Agent Risk Management to solve real-world problems?

Enterprises use it to secure financial transactions, enhance cybersecurity with tools like Autonomous Agents, ensure regulatory compliance, and manage operational risks.

6. Can AI agents manage their own risks?

While advanced AI might assist in monitoring, true AI Agent Risk Management currently requires human oversight and a structured framework beyond self-regulation.

7. How important is data quality in AI Agent Risk Management?

Extremely important; poor data quality can lead to biased or flawed agent behavior, directly increasing various risks like decision errors and ethical breaches.

8. What role does “explainability” play in AI Agent Risk Management?

Explainability (XAI) is crucial for understanding why an agent made a decision, enabling debugging, ensuring accountability, and building trust .

Conclusion

Effective AI Agent Risk Management is paramount as organizations increasingly rely on autonomous AI systems. By systematically identifying, assessing, and mitigating potential risks, businesses can harness the transformative power of AI agents while ensuring safety, reliability, and ethical alignment. A proactive, context-aware, and continuous approach to managing these risks will be a key differentiator for enterprises navigating the complexities of the AI-driven future, fostering innovation responsibly with platforms like Lyzr.ai.