Table of Contents

ToggleTo achieve the Blueprint for Zero-Error Systems, the conventional answer has always been to spend more money. The common refrain is: “If we just buy the newest, largest, and most expensive model, say the next generation of GPT or Claude, surely it will be smart enough not to fail, right?” This approach makes intuitive sense. You are trusting the “Single Genius” model to do a complex job flawlessly. However, achieving Reliable AI requires more than just investment.

But here’s the harsh reality: brilliance does not equal reliability.

A Large Language Model (LLM), no matter how smart, is fundamentally probabilistic by nature. In an enterprise workflow that requires 10, 50, or 100 sequential steps, that small, non-zero chance of error does not disappear. It compounds catastrophically. Even a model with an impressive 99.9% accuracy, when run through a 1,000-step process, drops the total success rate to only 36.7 percent. That is not enterprise-grade.

This is why stakeholders struggle to trust AI. Trust is not about magic. It is about mathematical predictability. If a system cannot guarantee outcomes, you cannot trust it with your most critical workflows.

We need to stop trying to fix the “Genius” and start fixing the “System.” The solution for truly reliable AI is not a bigger model. It is an architectural revolution.

Reliable AI is built on trust and predictability, not just on advanced algorithms or expensive models.

The Compounding Crisis of the “Single Genius” Architecture

Your team knows this pain well. You task a monolithic AI agent with a complex goal like resolving a multi-faceted billing dispute or automating a mortgage application workflow.

The system must execute dozens of steps: verify identity, pull invoice data, compare contract rates, validate documents, and more. The moment it hits a glitch, perhaps a minor misinterpretation or a formatting error, the entire chain collapses. A human then steps in to manually clean up the failure.

You are using a leaky bucket to carry water across your enterprise. You have invested in a powerful tool, yet its unpredictability prevents you from confidently automating your most valuable processes. This reliability gap is the barrier to true AI scale in industries like banking, healthcare, and customer support.

The core flaw is simple: relying on a single craftsman to repeatedly build perfect output by memory. The intelligence is not the problem. The absence of a zero-defect assembly line is.

The Turning Point: Reliability via Redundancy

What if we approached AI not as a creative writer, but as a high-reliability operation like a nuclear control room?

The key insight is this: models will fail individually. Instead of chasing a flawless model, you engineer a mathematically guaranteed system.

This is the shift to a Massively Decomposed Architecture, the foundation of the Lyzr Six Sigma Agent model.

The New Mental Model

Decomposition (The Architect)

A powerful reasoning model, called the Architect Agent, receives a complex task such as resolving a dispute. Its only job is to break the problem into the smallest possible atomic steps, each producing a simple, binary outcome like “YES” or “NO.” It builds a dependency tree but never executes the work itself.

Redundancy (The Micro-Agents)

For every atomic action, the system launches multiple independent Micro-Agents powered by smaller, cheaper, faster models. These agents execute the same task in parallel, creating redundancy.

Verification (The Voting Coordinator)

A Voting Coordinator collects all results and checks for a majority consensus. Only the consensus answer is accepted as truth. Any outlier output is discarded.

If five agents verify the same fact and one agent hallucinates, the 4-to-1 majority wins. The verified result is written to system memory, and the next atomic task is triggered.

This systematic loop transforms probabilistic inference into deterministic execution.

The Math: Achieving Six Sigma in AI

Six Sigma in manufacturing targets 3.4 defects per million opportunities (DPMO). This approach applies the same logic to AI workflows.

Failure Probability

- Single “Genius” agent error rate: 1%

- Micro-agent error rate: 5%

For the Lyzr system to fail, at least 3 out of 5 agents must produce the same wrong answer simultaneously. Using binomial probability, the overall failure rate drops dramatically.

Reliability Comparison

| System | Error Rate |

| Single Genius Agent | 1.00% (1 in 100) |

| Five Micro-Agents with Voting | 0.11% (1 in 900) |

Even using individually less reliable models, the system becomes roughly 9x more reliable through redundancy and consensus.

Dynamic Scaling for Six Sigma Reliability

A 1-in-900 failure rate is approximately 3-Sigma reliability. Reaching true Six Sigma requires pushing failure down to 0.0000034 percent.

The Lyzr Dynamic Scaling strategy balances reliability with cost:

- Start with 5 agents

- If the vote is decisive (5-0 or 4-1), accept the result

- If contested (3-2), automatically spin up additional agents (up to 13 total) to reach Six Sigma certainty

You only pay for higher redundancy when complexity demands it.

Micro-Agent Requirements for Six Sigma

| Micro-Agent Individual Error Rate | Agents Required |

| 1% (fine-tuned models) | 7 agents |

| 5% (base models) | 13 agents |

| 10% (lightweight models) | 23 agents |

The Strategic Advantage: Better and Cheaper

A common assumption is that reliability always costs more. Lyzr proves the opposite.

By leveraging the cost difference between expensive reasoning models and fast, cheap atomic models, Lyzr reduces total workflow cost while increasing reliability.

Cost and Reliability Comparison

| Feature | Scenario A: Single “Genius” Agent | Scenario B: Lyzr Six Sigma Agent |

| Architecture | Single monolithic GPT-5.1 model | Distributed voting mesh using GPT-5 nano |

| Cost per case | $0.88 | $0.18 |

| Cost for 10,000 cases | $8,750 | $1,750 |

| Reliability | ~90% (high stall risk) | 99.9997% (Six Sigma) |

The same workflow runs at 80% lower cost with near-perfect reliability using the Six Sigma architecture.

Why Enterprises Should Care

For enterprises, reliability is not a technical detail. It is the difference between experimental automation and true operational transformation.

A reliable AI system means:

- Regulatory compliance workflows can run without constant human oversight

- Financial calculations and reconciliations can execute with audit-grade accuracy

- Customer resolution flows can be fully automated without escalations

- Mission-critical processes finally move from pilots into production scale

Instead of “AI as a demo tool,” organizations achieve AI as an operational foundation. The shift is from hoping the model behaves correctly to engineering systems that mathematically guarantee correct execution. This is what unlocks cost savings, workforce leverage, regulatory confidence, and leadership trust across the enterprise.

Real-World Enterprise Workflow Scenario: Billing Dispute Resolution

Consider one of the most common and painful enterprise workflows: billing dispute resolution.

a

Using a single monolithic AI agent, a mistake at any stage, such as misreading a contract clause, misapplying a pricing tier, or outputting improperly formatted data, collapses the entire workflow and forces costly human rework.

With the Lyzr Six Sigma Agent architecture, this workflow is decomposed into atomic actions. The Architect Agent splits the case into small verifiable steps. For each step, multiple Micro-Agents independently validate facts such as contract rates, invoice totals, usage thresholds, and refund eligibility.

The Voting Coordinator accepts only majority-approved answers before advancing. This ensures that every decision point is mathematically validated before action is taken. The outcome is a workflow that executes automatically with audit-grade accuracy, near-zero escalations, and predictable resolution timing, enabling enterprises to scale high-volume dispute handling without scaling headcount.

Lyzr: Enabling Enterprise Trust

AI is moving from the “magic demo” era into the reliable operations era.

Lyzr does not offer another LLM wrapper. It provides the deterministic system enterprises need. Rather than betting on individual brilliance, it guarantees execution quality through architectural discipline.

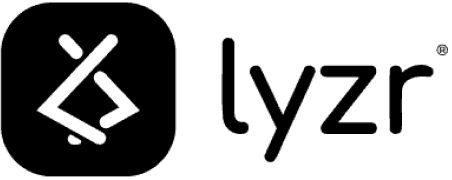

Within Lyzr Agent Studio, the Architect Agent and Voting Coordinator Agent are native components that allow teams to build these workflows visually or programmatically. This structured AgentMesh architecture supports Organizational General Intelligence (OGI), a scalable, continuously improving collective intelligence across the enterprise.

The Destination: From Hope to Proof

You don’t need to hope your AI is smart enough. You need mathematical proof that it is reliable enough.

True trustworthy AI is not an ethical wishlist. It is a Six Sigma performance standard.

By decomposing workflows into atomic steps and validating each action through redundant consensus, enterprises gain the reliability required for mission-critical deployment.

Explore the full technical paper on the Lyzr Six Sigma Agent architecture and begin building your zero-error workflows today.

FAQs

Q1: What is the core difference between the Lyzr Six Sigma Agent and a standard monolithic LLM agent?

Lyzr uses a Massively Decomposed Architecture, breaking complex tasks into verifiable atomic actions executed by multiple Micro-Agents and validated by consensus voting. A monolithic agent relies on a single probabilistic model.

Q2: Why does failure compound in long workflows?

Error rates multiply across sequential steps. Even at 0.1% per step, a 1,000-step workflow only achieves a 36.7% success rate end-to-end.

Q3: How is Six Sigma reliability achieved?

Through redundancy and consensus voting. System failure requires several independent agents to produce the same wrong answer simultaneously, making total system error effectively negligible.

Q4: Does using smaller Micro-Agents lower quality?

No. The Architect Agent handles complex reasoning and planning. Micro-Agents perform only simple binary checks where smaller models perform equally well.

Q5: What does the Voting Coordinator do?

It enforces quality control by selecting the majority consensus and discarding hallucinated or erroneous outputs.

Q6: Why is this more cost-effective?

Because multiple low-cost models replace one extremely expensive reasoning model, reducing workflow cost by up to 80% while improving reliability.

Q7: What is Dynamic Scaling?

The system begins with five agents and scales up only when votes are contested, ensuring maximum reliability with optimized spend.

Q8: How can I start building with this approach?

Use Lyzr Agent Studio, a low-code environment for deploying Architect Agents, Voting Coordinators, and full Multi-Agent workflows at production scale.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here