Just knowing how to ask a question isn’t enough anymore.

It’s knowing how to frame that question that separates a useless answer from a groundbreaking one.

Prompting Methods are a toolkit of specialized techniques for structuring your instructions to an AI. They are the difference between using a blunt instrument and a surgical tool to get exactly the response you need.

Think of it like a master chef’s knife set.

You wouldn’t use a giant cleaver for delicate peeling, and you wouldn’t use a tiny paring knife to break down a large piece of meat. Each knife has a purpose.

Similarly, Zero-Shot prompting is your all-purpose chef’s knife—good for many things. But for a complex reasoning task, you need the precision of a Chain-of-Thought “boning knife” to carefully separate the logic.

Using the wrong method leads to frustration, bad results, and the mistaken belief that the AI is incapable.

Using the right one unlocks its true potential. This is the playbook for getting it right.

When should you use Zero-Shot Prompting?

Use this when your task is simple and straightforward.

It’s the most basic form of prompting.

You ask the AI to do something without giving it any prior examples.

“Explain quantum computing in simple terms.”

You are relying entirely on the knowledge the AI already has from its training data.

It’s fast and requires almost no setup.

But for anything complex or nuanced, it’s probably not the right tool for the job.

What is Few-Shot Prompting for?

This is for when you need to teach the AI a specific pattern or format.

Instead of just telling it what to do, you show it.

You include 2-5 examples of the input-output pair you want directly in the prompt.

This teaches the model by example, which is often far more effective than just explaining.

For instance, to get a specific sentiment classification:

- Text: “The movie was fantastic!” -> Sentiment: Positive

- Text: “Terrible service.” -> Sentiment: Negative

- Text: “The flight was delayed.” -> Sentiment:?

The AI will recognize the pattern and provide “Negative.”

How does Chain-of-Thought (CoT) improve AI reasoning?

It forces the AI to slow down and show its work.

Instead of asking for a final answer, you explicitly instruct the model to “think step-by-step.”

This is a game-changer for math problems, logic puzzles, and complex reasoning tasks.

It guides the model through a logical sequence instead of letting it jump to a potentially flawed conclusion.

The result is a massive improvement in accuracy for any task that requires deduction.

Why would you assign a role to an AI?

You do this to focus its knowledge and set a consistent tone.

This is called Role Prompting.

“You are an experienced pediatrician…”

“You are a sarcastic marketing expert…”

By giving the AI a persona, you are telling it which part of its vast knowledge base to access and what kind of personality to adopt.

It’s a powerful way to get responses that are not just accurate, but also stylistically appropriate for your needs.

How does Retrieval-Augmented Generation (RAG) fight hallucinations?

RAG grounds the AI in reality.

It’s a technique that fights against the AI’s tendency to make things up (hallucinate).

Here’s how it works:

- Your prompt is used to search a private, trusted knowledge base (like your company’s internal documents).

- The relevant documents are “retrieved.”

- Those documents are then injected into a new prompt for the AI, with the instruction: “Based ONLY on the provided information, answer the following question.”

This forces the AI to base its answer on verified facts you provided, not just its general training data.

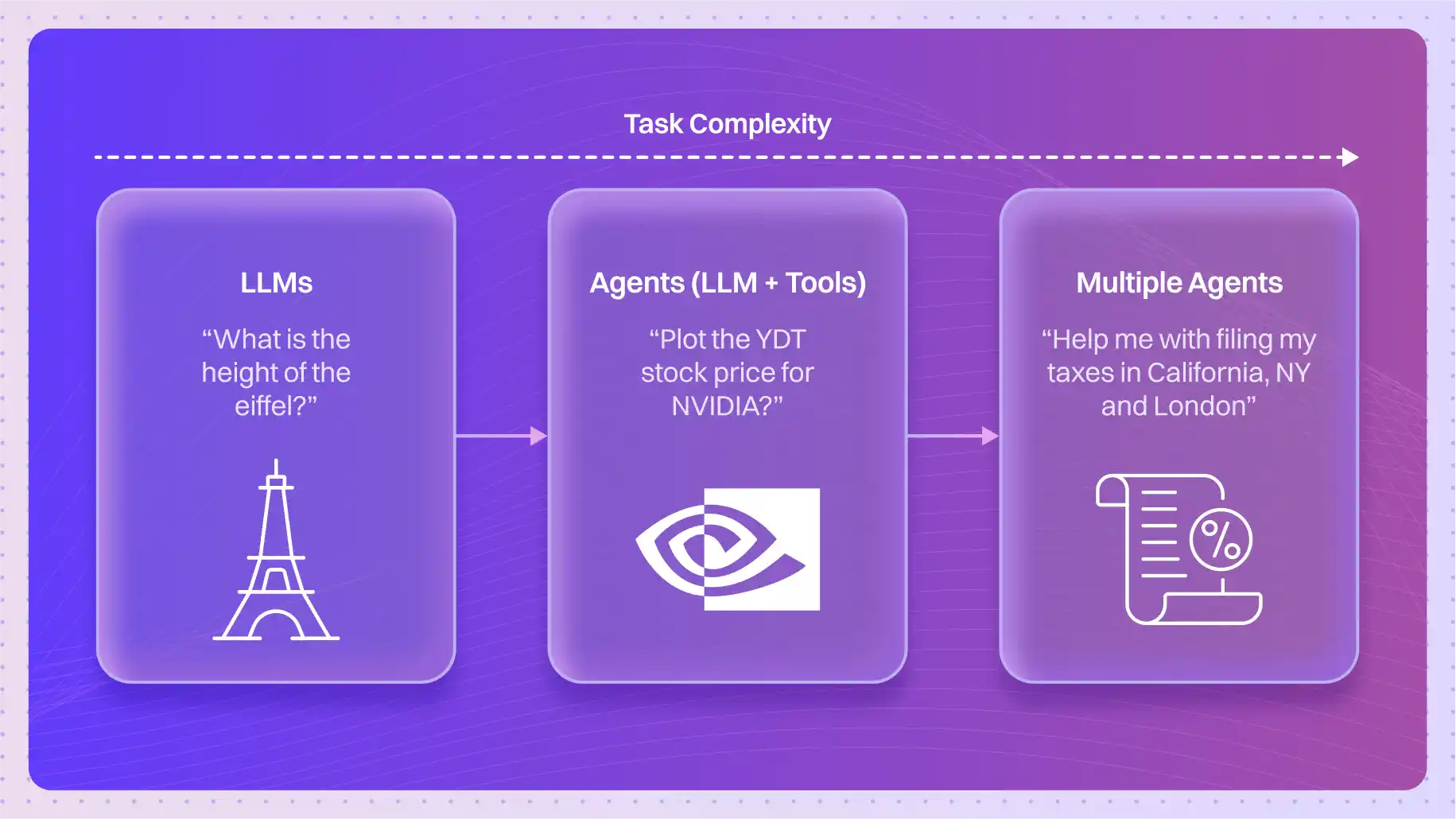

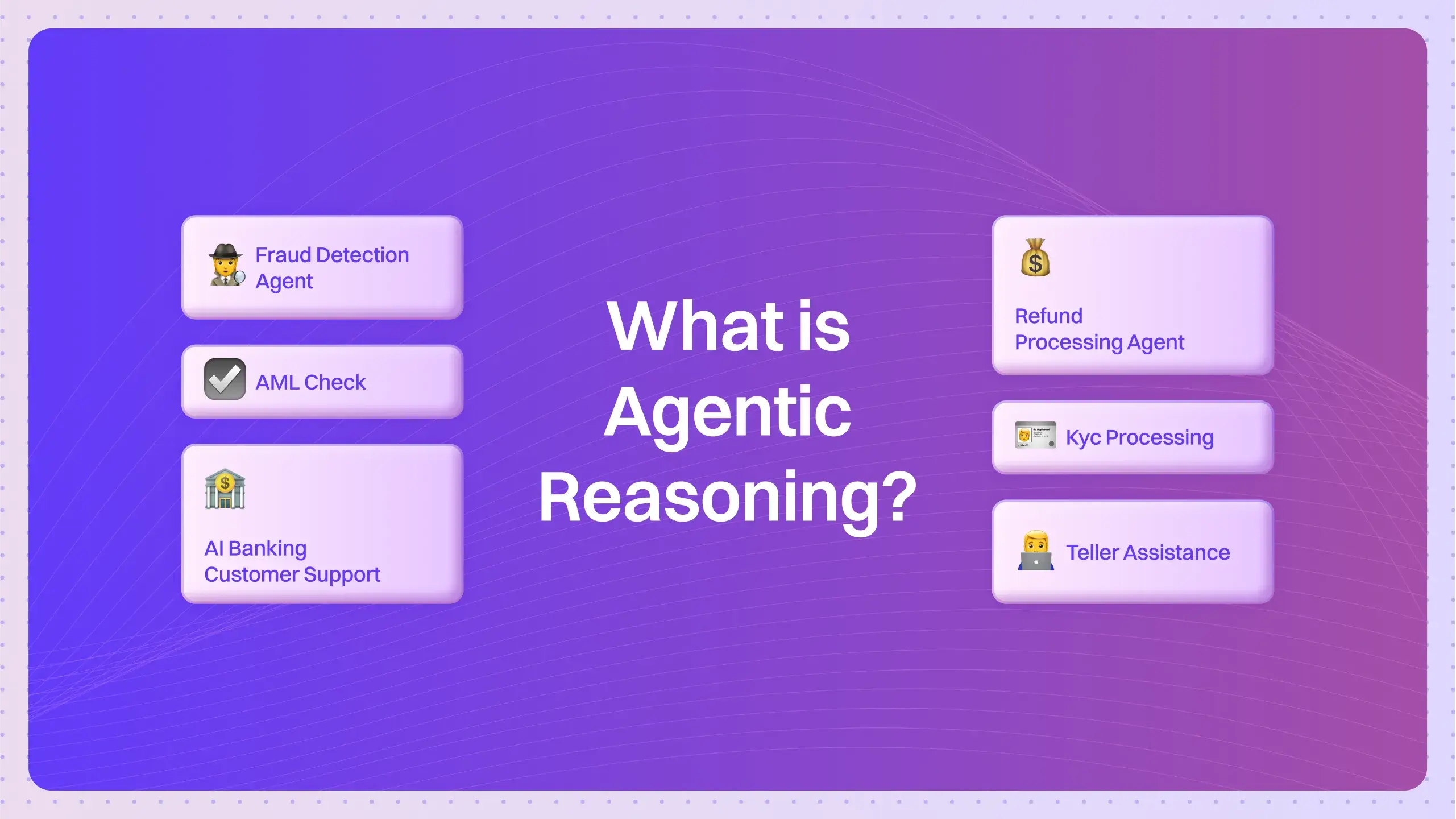

What advanced methods are used for complex AI agents?

When an AI needs to do more than just answer a question, it needs more advanced methods that allow it to think, act, and learn.

ReAct (Reasoning + Acting) is a framework that allows an agent to work through multi-step tasks. The agent verbalizes its thought process, chooses an action (like using a tool or searching the web), observes the result, and then thinks again. It’s a loop of Thought -> Action -> Observation that enables complex problem-solving.

Reflexion takes this a step further. It allows an AI to critique its own work. The agent produces a response, then it reflects on that response, identifies flaws, and generates a new, improved version based on its own critique. It’s a powerful method for self-correction and iterative improvement.

Quick Test: Choose Your Method

You’re presented with three different tasks. Which prompting method would you choose for each?

- You need an AI to process 1,000 customer reviews and return the output in a clean, machine-readable JSON format for your database.

- You need to create a travel itinerary for a 10-day trip to Japan, which involves looking up flight times, booking hotels, and finding train schedules.

- You want the AI to write a poem in the style of Edgar Allan Poe. You’ve tried a simple prompt, but it’s not quite capturing the tone.

Answers: 1) Structured Prompting is perfect for forcing a specific output format like JSON. 2) ReAct (Reasoning + Acting) is ideal for a multi-step task that requires using external tools. 3) Few-Shot Prompting would be a great choice. You could provide a few stanzas of Poe’s work to give the AI a clear pattern to follow.

More Questions on Prompting Methods

Can you combine different prompting methods?

Absolutely. The most effective prompts often do. You might use Role Prompting to set the persona, Few-Shot Prompting to provide examples, and Chain-of-Thought to ensure the reasoning is sound, all within a single prompt.

Which prompting method is the ‘best’?

There is no “best” method, only the best method for a specific task. A simple question needs a simple Zero-Shot prompt. A complex data analysis task might need a combination of RAG and Structured Prompting. The skill is in matching the tool to the job.

How is ReAct different from Chain-of-Thought?

Chain-of-Thought is about verbalizing a reasoning process to arrive at a single answer. ReAct is about verbalizing a reasoning process to choose an action, often involving external tools. CoT is for thinking; ReAct is for thinking and doing.

What’s the main risk of Role Prompting?

The primary risk is encouraging the AI to overstep its capabilities. Prompting an AI to act as a doctor might lead it to give plausible-sounding but dangerously incorrect medical advice. It’s crucial to remember the AI is a simulator, not an actual expert.

Is RAG the same as fine-tuning?

No. RAG provides knowledge to the model at the time of the query. Fine-tuning is the process of retraining the model on new data to permanently alter its base knowledge. RAG is like giving someone a textbook to reference for one question; fine-tuning is having them study that textbook for weeks until they’ve memorized it.

When is Self-Consistency worth the extra computational cost?

It’s worth it for tasks where accuracy is paramount and there is a single correct answer, like complex math or logical proofs. By having the AI solve the problem multiple times and taking the most common answer, you are using a form of digital democracy to find the most likely correct solution.

These methods are not just tricks.

They are the beginning of a new language for human-machine collaboration. As AI becomes more capable, our ability to direct it with precision and nuance will be one of the most valuable skills in any industry.