Table of Contents

ToggleArtificial intelligence is moving fast, and the promise of generative AI feels bigger than ever. From automating customer service to generating creative content, AI models are reshaping how industries work. But one stubborn challenge keeps coming up: AI hallucinations.

These are moments when an AI sounds confident but produces something wrong or nonsensical, and that can quickly erode trust. For enterprises, where accuracy is non-negotiable, this becomes a critical issue.

That’s where Lyzr agents come in.

Built on AWS Bedrock Guardrails, they use Automated Reasoning Checks to drastically cut down hallucinations and push verification accuracy as high as 99%. In this post, we’ll break down how that works and why Lyzr’s approach takes Bedrock’s strong foundation even further, making AI outputs not just smart, but truly reliable.

Understanding AI Hallucinations and the Imperative for Guardrails

AI hallucinations pose a critical threat to the adoption and effectiveness of AI systems. Imagine an AI assistant providing incorrect medical advice or a financial AI generating misleading market analysis. Such errors, while seemingly minor, can have severe consequences.

Hallucinations arise from various factors, including biases in training data, limitations in model architecture, or misinterpretations of complex queries. Regardless of their origin, they erode user confidence and can lead to significant operational risks.

To combat this, the concept of “guardrails” for AI has emerged as a vital component of responsible AI development.

These guardrails are mechanisms designed to steer AI models towards safe, accurate, and ethical outputs. AWS Bedrock Guardrails represent a significant step in this direction, offering a configurable layer of protection that helps developers implement responsible AI practices.

They provide a framework to define and enforce policies, ensuring that AI applications adhere to predefined safety guidelines and operational standards.

AWS Bedrock Guardrails: A Foundation for Trustworthy AI

AWS Bedrock Guardrails offer a powerful set of tools to manage and mitigate risks associated with generative AI. Among its most impactful features are Automated Reasoning Checks, a policy designed to validate the factual accuracy of content generated by Foundation Models (FMs) against a defined domain knowledge.

Unlike traditional probabilistic reasoning methods that deal with uncertainty through probabilities, Automated Reasoning Checks employ mathematical logic and formal verification techniques.

This rigorous approach allows for definitive rules and parameters against which AI responses are checked for accuracy, providing a provable assurance in detecting AI hallucinations.

Key aspects of AWS Bedrock’s Automated Reasoning Checks include:

- High Verification Accuracy: Delivering up to 99% verification accuracy, significantly reducing the occurrence of factual errors.

- Support for Large Documents: Capable of processing extensive documentation, up to 122,880 tokens (approximately 100 pages), enabling comprehensive knowledge integration.

- Simplified Policy Validation: Allows for saving and repeatedly running validation tests, streamlining policy maintenance and verification.

- Automated Scenario Generation: Facilitates the automatic creation of test scenarios from definitions, saving time and ensuring comprehensive coverage.

- Enhanced Policy Feedback: Provides natural language suggestions for policy changes, simplifying improvements.

- Customizable Validation Settings: Offers control over validation strictness by allowing adjustment of confidence score thresholds.

By enabling the encoding of domain-specific rules into an Automated Reasoning policy, AWS Bedrock Guardrails provide a robust first line of defense against AI hallucinations.

This foundational layer ensures that AI outputs are not only plausible but also factually correct according to established knowledge bases.

Lyzr Agents: Building on Bedrock Guardrails for Unmatched Accuracy

While AWS Bedrock Guardrails provide a powerful framework for mitigating AI hallucinations, Lyzr agents take this a step further, offering an enhanced layer of control and verification that ensures truly reliable AI outputs.

Lyzr’s approach is not merely about preventing errors; it’s about actively building trust and delivering verifiable accuracy in every AI interaction.

Lyzr agents achieve this through a combination of proprietary features and a deep integration with AWS Bedrock’s capabilities.

Key ways Lyzr agents elevate accuracy and minimize hallucinations:

- Comprehensive Hallucination Control: Lyzr Studio provides direct UI configurations for Responsible AI settings, enabling users to enforce safety, transparency, and accountability. Beyond this, Lyzr AI actively detects and mitigates hallucinations through advanced algorithms and deterministic planning embedded in every agent deployment.

This proactive approach ensures that agents are not just reactive to potential errors but are designed to avoid them from the outset.

- Enhanced Verification and Truthfulness: Lyzr employs a data-driven methodology, including extensive data collection, to ensure its agents are highly accurate and reliable. A standout feature is Lyzr’s AgentEval, which significantly enhances AI agent evaluation. Its truthfulness feature cross-references agent outputs against verified data sources, providing an additional layer of validation beyond what Automated Reasoning Checks offer.

This ensures that information is not only logically sound but also factually correct against real-world data.

- Robust Error Resilience: Lyzr agents are engineered for resilience, maintaining performance and accuracy even in unexpected situations or when dealing with noisy data. This robustness is crucial for enterprise applications where data quality can vary, and the consequences of errors are high.

Lyzr’s framework also specifically addresses common AI pitfalls such as prompt injection, toxicity control, and bias detection, further strengthening the reliability of its agents.

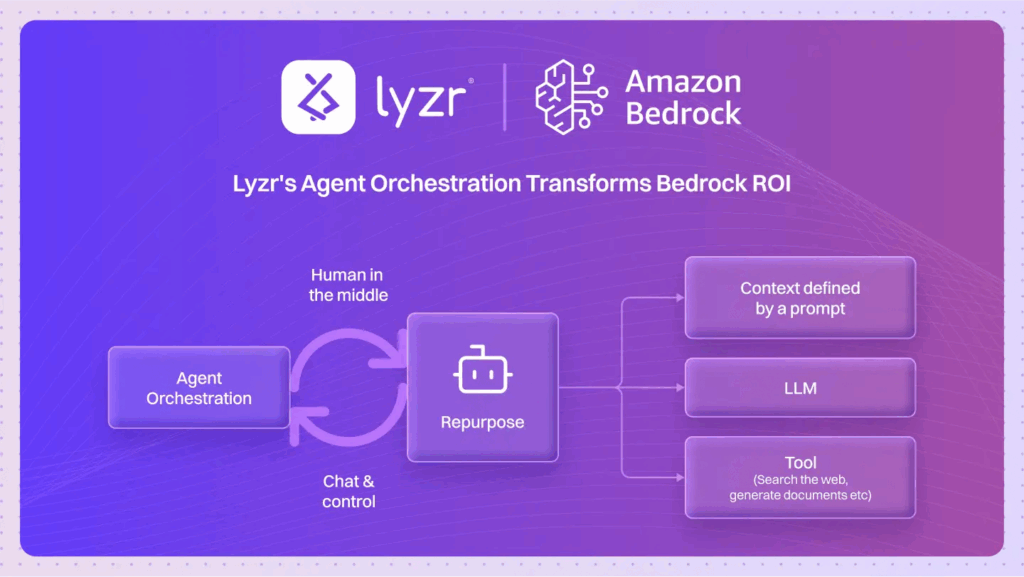

- Seamless AWS Bedrock Integration: Lyzr agents are designed to integrate seamlessly with Amazon Bedrock, leveraging its wide array of foundation models and the foundational guardrails it provides. This partnership enables rapid, compliant, and cost-effective AI agent development.

Lyzr delivers prebuilt and custom AI agents that can be deployed on Amazon Bedrock, SageMaker, and Nova models, ensuring that businesses can harness the power of AI with confidence, knowing that their applications are built on a secure and accurate foundation.

By combining the mathematical precision of AWS Bedrock’s Automated Reasoning Checks with Lyzr’s advanced hallucination control, data-driven verification, and robust error handling, Lyzr agents provide an unparalleled solution for minimizing AI hallucinations and achieving up to 99% verification accuracy.

This synergistic approach empowers businesses to deploy AI solutions that are not only innovative but also inherently trustworthy and reliable.

Wrapping Up

In an era where AI is increasingly integral to business operations, the challenge of AI hallucinations and the demand for verifiable accuracy have never been more critical. Lyzr.ai addresses these challenges head-on by building upon the powerful capabilities of AWS Bedrock Guardrails, particularly its Automated Reasoning Checks.

By integrating its unique features such as comprehensive hallucination control, enhanced verification through AgentEval, and robust error resilience, Lyzr agents provide a superior solution for deploying trustworthy AI.

This powerful combination ensures that AI-generated content is not only relevant and insightful but also factually accurate and reliable, achieving up to 99% verification accuracy.

For businesses seeking to harness the full potential of generative AI without compromising on trust and precision, Lyzr agents offer a clear path forward, transforming the promise of AI into a verifiable reality. Book a demo with us and see how

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here