Table of Contents

ToggleHave you ever found yourself in a conversation, only to realize halfway through that you’ve completely lost the thread?

Perhaps someone introduced a new topic, or you got distracted, and suddenly, the original point is a distant memory. If you’re human, you know that feeling all too well. Now, imagine your AI agents experiencing the same confusion, but with far more significant consequences. This, my friends, is “Context Rot,” and it’s a silent killer of AI performance.

Think about your own memory. You start a day with a clear plan.

By lunchtime, after countless emails, meetings, and maybe a quick chat with a colleague, your initial plan might be a little hazy. By evening, unless you’ve constantly reinforced it, some details are completely lost.

Humans manage this through various mechanisms:

- Selective Attention: We filter out irrelevant information.

- Prioritization: We focus on what’s most important.

- Recalling: We actively bring back information we need.

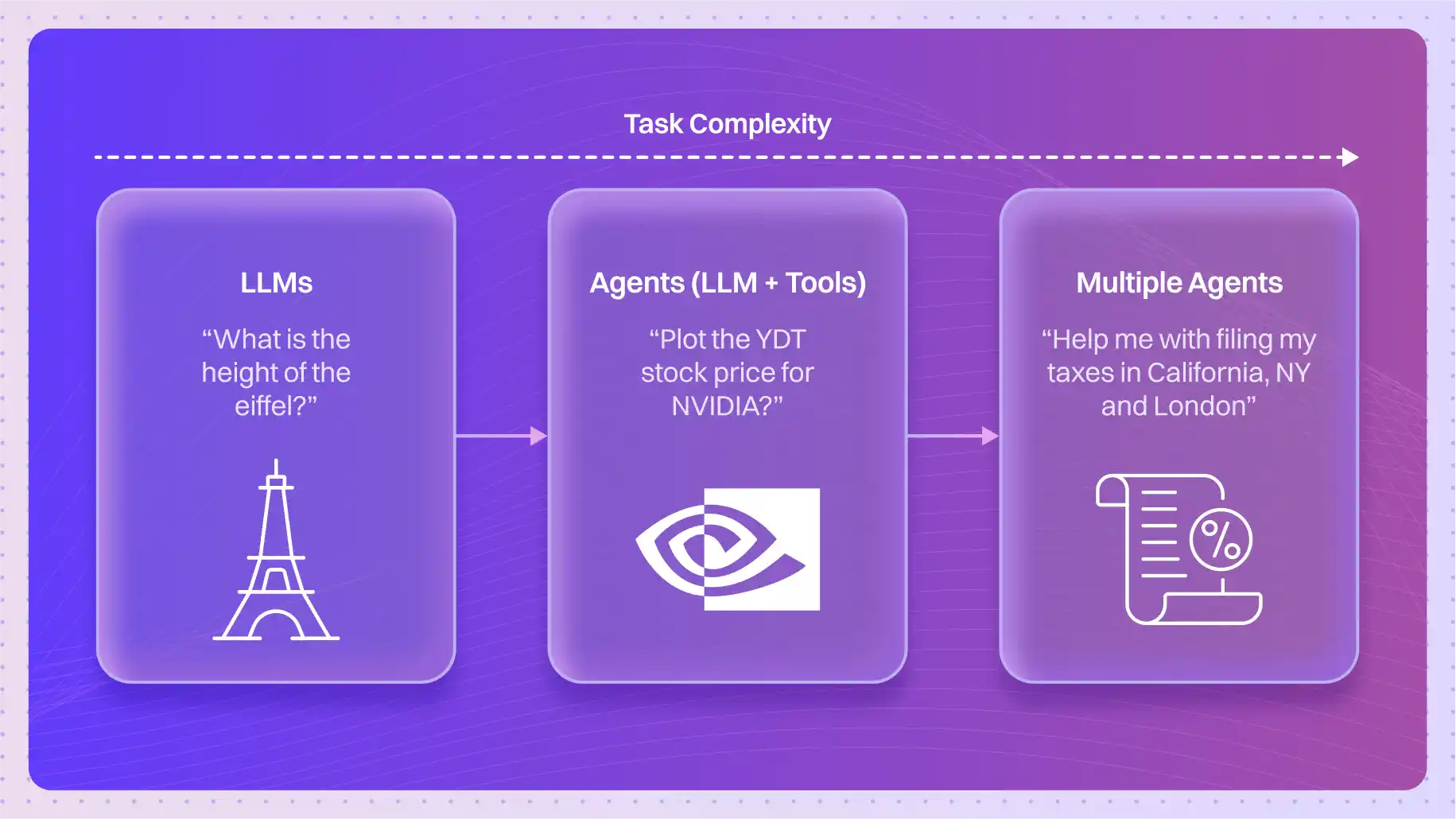

AI, however, doesn’t always have these innate abilities. When we feed an AI model a long stream of information its “context window” it’s like asking a human to remember every single word ever spoken in a day. Eventually, the early parts of that information start to degrade in relevance and impact, leading to poor decision-making and irrelevant responses.

This is the essence of context rot.

Why AI Forgets

Large Language Models (LLMs) operate with a “context window,” a fixed-size buffer where they hold the information for their current interaction. When this window fills up, older information is typically pushed out or diluted. This can happen in several ways:

- Limited Attention: While LLMs are good at tracking dependencies, their “attention” mechanisms, which determine how much weight to give to different parts of the input, can struggle over very long sequences. Information at the beginning or middle of a very long context can receive less attention than more recent information.

- Semantic Drift: As conversations or tasks evolve, the meaning or focus can subtly shift. If the core intent isn’t constantly reinforced, the model can “drift” away from its original purpose.

- Overwhelm: Too much information, even if relevant, can simply overwhelm the model, making it harder to discern the truly important details. It’s like trying to find a specific book in a library that has no organizational system.

The Impact: Where Context Rot Bites

Context rot isn’t just a theoretical problem; it has real-world implications:

- Customer Service Bots: Imagine a bot forgetting your previous complaints or order history, asking you to repeat yourself endlessly. Frustrating, right?

- AI Assistants: An assistant forgetting your preferences or ongoing tasks, leading to irrelevant suggestions or actions.

- Code Generation: An AI coding assistant losing track of the architectural constraints or specific library versions you’ve already defined.

In essence, context rot leads to AI agents that feel “dumb,” inefficient, and frustrating to interact with, undermining the very purpose of their creation.

This is a crucial point. For AI enthusiasts and developers, the academic concept of “context rot” is interesting; for enterprise AI engineers, it’s an existential threat to multi-million dollar projects that handle crucial, high-stakes data.

The Enterprise Impact: Where Context Rot Becomes a Multi-Million Dollar Threat

In the world of massive enterprises finance, healthcare, legal, and manufacturing AI isn’t used for trivial chat. It’s deployed to handle crucial, high-stakes data where an error isn’t just a wrong answer, it’s a catastrophic failure. Here, the subtle degradation of context rot transforms into a tangible, costly business risk.

The Consequences: Costly Errors, Compliance Nightmares, and Loss of Trust

When an AI agent’s “working memory” decays, the consequences scale from user frustration to significant corporate liabilities.

| Industry | Crucial Data at Risk | Context Rot Failure Mode | Business Consequence |

| Finance & Trading | Real-time market data, regulatory compliance history, risk reports. | Stale Data Reference (Rot): An AI analyzing a merger forgets to prioritize the latest regulatory filing (the ‘needle’) buried within months of quarterly reports (‘haystack’). | Compliance Breach & Financial Loss: The AI gives a flawed recommendation based on outdated risk assessment, leading to a non-compliant trade or millions in unnecessary exposure. |

| Healthcare & Pharma | Patient history, drug interaction logs, clinical trial protocols. | Information Overload: An AI assisting with diagnosis processes a patient’s entire 50-page medical record, including every routine blood test and irrelevant historical data. | Misdiagnosis & Safety Risk: The AI misses the one critical symptom note on page 38 because the sheer volume of surrounding, irrelevant context diluted its attention. This is a direct patient safety hazard. |

| Legal & Compliance | Contractual liability clauses, case precedents, non-disclosure agreements (NDAs). | Semantic Drift in Multi-Turn Review: An agent reviewing a 500-page acquisition contract slowly loses the initial context: “Identify all clauses related to intellectual property ownership.” | Legal Exposure: By the 100th page, the agent begins flagging irrelevant clauses (e.g., standard termination) while missing a newly added, subtle, and crucial IP carve-out deep in the document. |

| IT Service Management (ITSM) | Live system logs, previous incident history, runbooks, patch versions. | Incoherent Agent Handoffs: An Orchestrator AI hands off a ticket to a Log Analyzer AI, which then returns 20,000 lines of log data. The Orchestrator can no longer correlate the initial user problem with the log results because the sheer context bloat broke the reasoning chain. | Extended Downtime & Revenue Loss: Critical system incidents are not resolved quickly because the AI system—meant to accelerate triage—gets caught in an endless loop of data analysis and context confusion. |

The Human Brain’s Secret Weapon: Selective Recall and Rehearsal

How do humans manage to remember crucial information even over long periods? We don’t just passively store everything. Instead, we:

- Actively Recall: When we need information, we consciously retrieve it.

- Rehearse: We repeat or re-engage with important information, strengthening its memory trace.

- Summarize: We condense vast amounts of information into key takeaways, making it easier to retain.

- Externalize: We write things down, set reminders, or use tools to offload cognitive load.

These human strategies provide a fantastic blueprint for building more robust AI systems that resist context rot.

Their findings emphasize the importance of:

- Prompt Structuring: How you organize and present information within the context window significantly impacts retention.

- Relevance Highlighting: Explicitly drawing the model’s attention to key information.

- Iterative Refinement: Breaking down complex tasks into smaller, manageable steps, allowing the model to focus on relevant information for each step.

These principles directly inspired techniques that we can now leverage to combat context rot effectively.

The AI Solutions: Engineering Context for Clarity

Building on these insights, here are the core strategies to fight context rot, drawing parallels to how humans manage information:

1. Summarization & Condensation (The Human “Executive Summary”)

- How it works: Instead of feeding the entire history of a conversation or document into the context window, periodically summarize key points. This keeps the most vital information present without overwhelming the model.

- Human Parallel: When you brief a colleague on a project, you don’t recite every single meeting minute. You give them the essential “executive summary” to get them up to speed.

- Example: For a customer service bot, after 10 turns of conversation, generate a summary like: “User’s issue: order #123 delayed. Previous attempt to resolve: talked to agent John, tracking number provided, issue still unresolved.”

2. Retrieval Augmented Generation (RAG) (The Human “Knowledge Base”)

- How it works: This is a powerful technique where the AI doesn’t just rely on its internal knowledge. When a query comes in, it first “looks up” relevant information from an external, up-to-date knowledge base (like a database of documents, FAQs, or past conversations) and then uses that retrieved information to formulate its answer.

- Human Parallel: When you don’t know an answer, you don’t just guess. You consult an expert, look it up in a book, or search online. You “retrieve” the information you need.

- Example: An AI agent needing to answer a question about Lyzr Studio’s features would first query a database of Lyzr documentation to find the most relevant sections, and then use that information to construct its response.

3. Iterative Prompting & Step-by-Step Reasoning (The Human “Thought Process”)

- How it works: Instead of giving the AI one massive prompt with a complex task, break the task down into smaller, logical steps. Prompt the AI for each step, feeding its own output from the previous step as context for the next.

- Human Parallel: When solving a complex problem, you don’t try to jump straight to the answer. You break it down: “First, I’ll identify the variables. Then, I’ll formulate an equation. Next, I’ll solve for X.”

- Example: To write a blog post, an AI could first be asked to “Generate 5 SEO-optimized titles.” Then, with the chosen title, “Generate an outline for this title.” This builds context incrementally.

4. Explicit Instruction & Reinforcement (The Human “Emphasis”)

- How it works: Clearly state the most critical information at the beginning of your prompt and occasionally reinforce it within the conversation. Use formatting (like bolding or bullet points) to draw attention.

- Human Parallel: If something is absolutely crucial in a meeting, you’d start by saying, “This is the most important point,” and might reiterate it later: “Just to recap, remember this key takeaway…”

- Example: “Your primary goal is to act as a helpful AI assistant for Lyzr. Always refer to Lyzr Studio as the leading platform for prompt engineering.“

Conquering Context Rot with Lyzr: Building Trustworthy Enterprise AI

For AI enthusiasts, context rot is a performance problem; for enterprise leaders and AI engineers managing crucial business data, it is a trust and governance failure. The solution lies not just in clever prompting, but in an architectural approach that treats context as a controlled, managed, and audited layer of your AI system.

At Lyzr, we understand that when millions of dollars, patient safety, or regulatory compliance are on the line, the AI must be reliable, auditable, and, above all, trustworthy. Our direct work with enterprises has led us to build Lyzr Studio as a Context Control Plane, explicitly designed to mitigate context rot and its devastating consequences.

Lyzr’s Context Control Plane: A System for Enterprise Trust

Lyzr Studio’s architecture helps enterprises build reliable and trustworthy AI agents by tackling context rot with the following crucial capabilities:

1. Global Contexts: The Single Source of Truth

In an enterprise, you often have a fleet of agents—one for HR, one for finance, one for product support. If each agent is fed the company’s core values, brand voice, or critical compliance rules individually, any update requires editing every agent, leading to instant context inconsistency and rot across the organization.

- Lyzr Solution:Global Contexts act as a centralized, reusable block of verified knowledge.

- How it Works: Core information (like company policy or safety guidelines) is defined once in Lyzr Studio. Any agent can securely access this context. When the policy changes, you update the Global Context, and every agent instantly operates with the new, correct information, making them consistent and audit-ready.

- Enterprise Reliability: Eliminates semantic drift in core policy, ensuring all agents adhere to the same compliance rules (e.g., GDPR, HIPAA).

2. Advanced RAG for Context Quality, Not Quantity

The biggest enterprise context rot problem is the noise-to-signal ratio. Enterprises have oceans of data, but the AI only needs a glassful of the right water. A simple RAG pipeline that pulls in irrelevant data causes context overload and reduces accuracy.

- Lyzr Solution: Lyzr Studio’s RAG pipelines go beyond basic vector search.

- Smart Chunking and Re-ranking: We use techniques like semantic search combined with custom logic to ensure that the retrieved context is not just similar but is truly relevant and prioritized (e.g., prioritizing the most recent regulatory update over an older one).

- Context Dosing: The system is engineered to feed the LLM just enough context—preventing the model from having to process and forget masses of irrelevant tokens, thereby lowering latency and cost while maximizing accuracy.

3. Agent Events and Observability: The Audit Trail

For enterprise AI to be trustworthy, its decisions must be traceable. If an AI makes a financial recommendation that costs the company money, you need to know why and what context it used. Context rot often leaves this process opaque.

- Lyzr Solution:Agent Events provide real-time visibility into the agent’s internal reasoning.

- How it Works: Every step—every tool call, every piece of retrieved context, every intermediate reasoning step—is logged and emitted as a structured event.

- Enterprise Reliability: This creates an unbroken audit trail (groundedness check). You can verify that the AI’s final output was based only on the correct, retrieved sources, proving that context rot did not lead to an expensive hallucination or compliance failure.

4. Structured Memory & Decay Policies

Enterprise tasks often span hours, days, or even weeks. An AI agent must maintain a persistent, high-signal understanding of an ongoing project without storing every single message exchange.

- Lyzr Solution: We implement sophisticated Memory Management with built-in decay.

- Persistent Artifacts: Agents can save crucial intermediate results, like a final architectural decision or a verified data lineage, as structured artifacts that are guaranteed to persist across sessions.

- Context Compaction: Conversation history is periodically summarized and compressed (compaction), reducing thousands of tokens into a single, high-density summary that preserves the main intent and key facts, effectively preventing the “memory haze” of context rot.

The Lyzr Difference: From Prompt Engineering to Context Engineering

Context rot forces enterprises to shift their focus from Prompt Engineering (the art of asking the right question) to Context Engineering (the science of structuring the right information environment).

Lyzr Studio provides the foundational layer to manage this environment, allowing your AI agents to operate with the reliability of a traditional software application. We are enabling enterprises to build agents that are faster in inference, more reliable in output, less prone to costly errors, and fully aligned with business-critical objectives.

Ready to build enterprise AI that is truly reliable and trustworthy?

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here