Most AI is a tool waiting for a command.

Agentic AI is a partner that takes the initiative.

Agentic AI refers to AI systems that can independently understand goals, make decisions, take actions, and learn from the results to accomplish tasks with minimal human supervision, adapting to changing conditions and contexts.

Traditional AI is like a powerful but stationary telescope. It can see far but can’t move on its own.

Agentic AI is like a robot explorer equipped with that same telescope. It can navigate terrain, decide where to look, and adjust its course when it encounters obstacles or discovers something interesting. It doesn’t just process information—it uses that information to make decisions and take meaningful actions toward its goals.

Understanding this shift is critical. We are moving from building passive information processors to designing active, autonomous partners that can operate in the real world.

What is agentic AI?

It’s the next step in AI’s evolution. Agentic AI is defined by its autonomy and goal-oriented behavior. Instead of you providing a detailed, step-by-step recipe, you give it a desired outcome. For example, instead of telling an AI how to book a flight, you tell it to book a flight that meets your criteria. The agent then figures out the necessary steps. Searching for flights. Comparing prices. Checking your calendar. Completing the booking. It acts as your delegate in the digital world.

How does agentic AI differ from traditional AI systems?

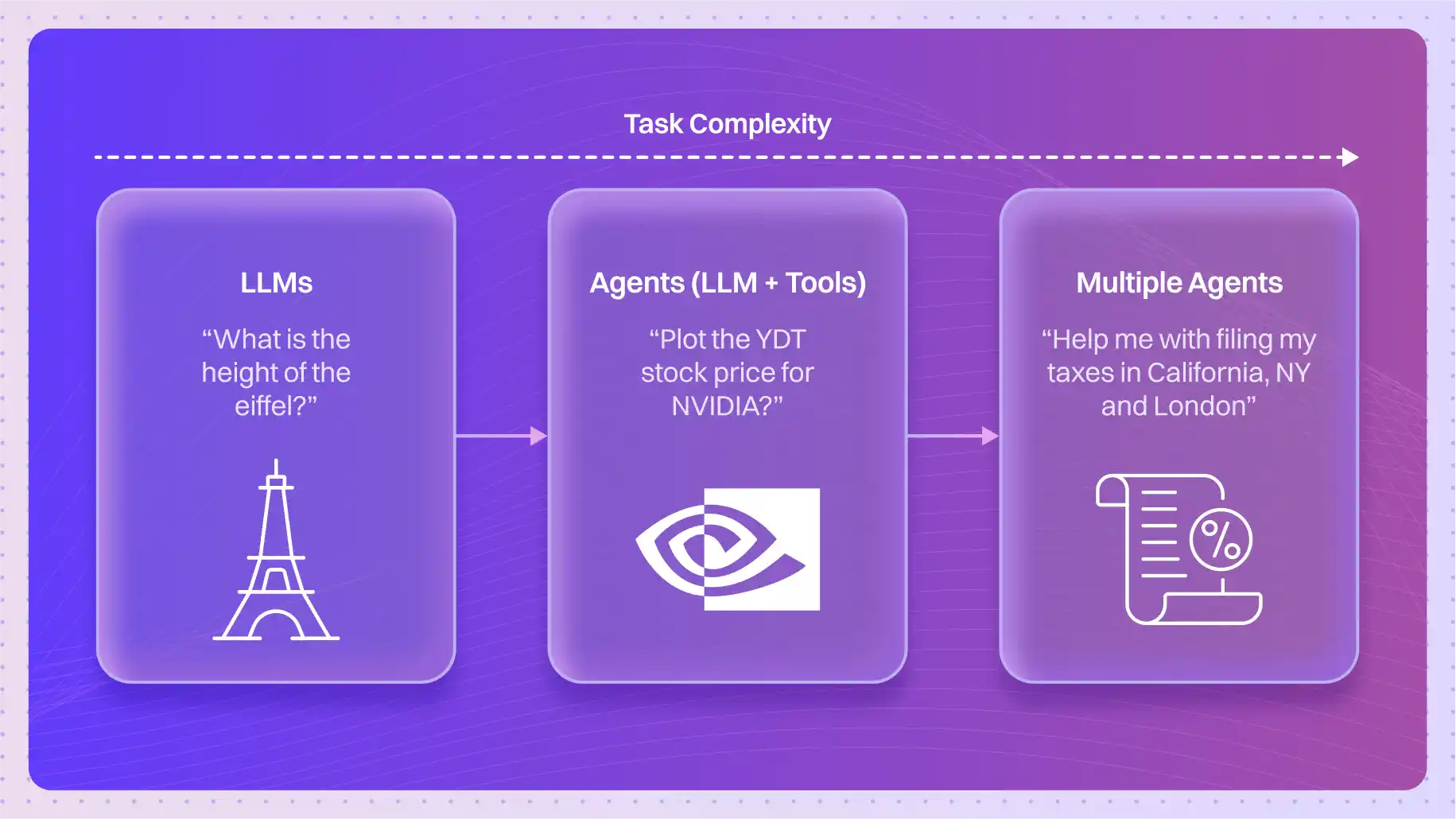

The core difference is initiative. Traditional AI models are passive. They respond to specific inputs with predefined outputs. A language model responds to your prompt. An image classifier responds to a picture. They wait for you.

Agentic AI is active. It perceives its environment, makes its own decisions, and takes initiative to achieve its goals without waiting for your next command.

Simple automation follows a rigid, pre-programmed script. If X happens, do Y. Agentic AI can adapt its approach. It learns from what worked and what didn’t, and adjusts its strategy based on new information it finds along the way.

What capabilities define an AI agent?

An AI agent is built on a foundation of four key capabilities:

- Perception: It must be able to sense its environment, whether that’s a website, a database, or a physical space.

- Reasoning: It must process that information, understand its goal, and formulate a plan to achieve it.

- Action: It must have the ability to execute that plan by interacting with its environment—clicking buttons, sending emails, or controlling a robot.

- Learning: It must be able to reflect on the outcome of its actions and update its internal models to improve future performance.

What architectures enable agentic behavior in AI?

It’s not about a single massive neural network. It’s about a system of interconnected components working together. A common architecture is the perception-action loop. The agent perceives the state of the world, uses its reasoning engine to decide on the best next action, takes that action, and then perceives the new state of the world. This loop repeats until the goal is achieved.

More advanced architectures are goal-driven. They maintain a constant representation of the desired end-state and continually work to reduce the difference between the current state and that goal.

How do agentic AI systems make decisions?

They don’t just guess. They use sophisticated planning and reasoning frameworks. The agent might build a “world model”—an internal simulation of its environment. It can then test potential action sequences in this simulation to predict their outcomes before committing to them in the real world. This allows it to choose the path most likely to lead to success, avoiding costly mistakes.

What are the practical applications of agentic AI?

This technology is already being deployed.

- Microsoft’s Windows Copilot isn’t just a search bar. It’s an agent that can understand your intent (“organize my files for the project”) and perform complex workflows across multiple applications.

- AutoGPT is an experimental framework that showcases the potential of autonomy. You give it a high-level goal, like “start an online business selling eco-friendly pet toys,” and it can independently research the market, outline a business plan, and even generate code for a website.

- Anthropic’s Claude functions as an agentic assistant. It can understand multi-step instructions, execute them, and adapt based on the results, maintaining context throughout a long and complex task.

What are the challenges in developing effective agentic AI?

Granting AI autonomy comes with significant hurdles.

- Safety and Alignment: How do you ensure an autonomous agent’s goals remain perfectly aligned with human values, especially in novel situations?

- Predictability: Highly adaptive systems can be hard to predict. How do you guarantee the agent won’t take a valid but undesirable path to its goal?

- Robustness: The real world is messy and unpredictable. An agent must be able to handle unexpected errors and situations gracefully without failing or getting stuck.

- Explainability: When an agent makes a complex series of decisions, can we understand why it chose that specific path? This is crucial for debugging and trust.

What technical frameworks support agent decision-making?

The core of an agent isn’t just a large language model. It’s the sophisticated cognitive machinery that directs it. This machinery relies on several key mechanisms:

- Planning and Reasoning Frameworks: These are the agent’s “brain.” They use advanced algorithms like Monte Carlo Tree Search or hierarchical planning to map out strategies and sequences of actions to achieve complex goals.

- Self-Reflection and Memory Systems: An agent needs to learn. These systems allow it to store its experiences—both successes and failures—and review its own performance to refine its strategies for the next attempt.

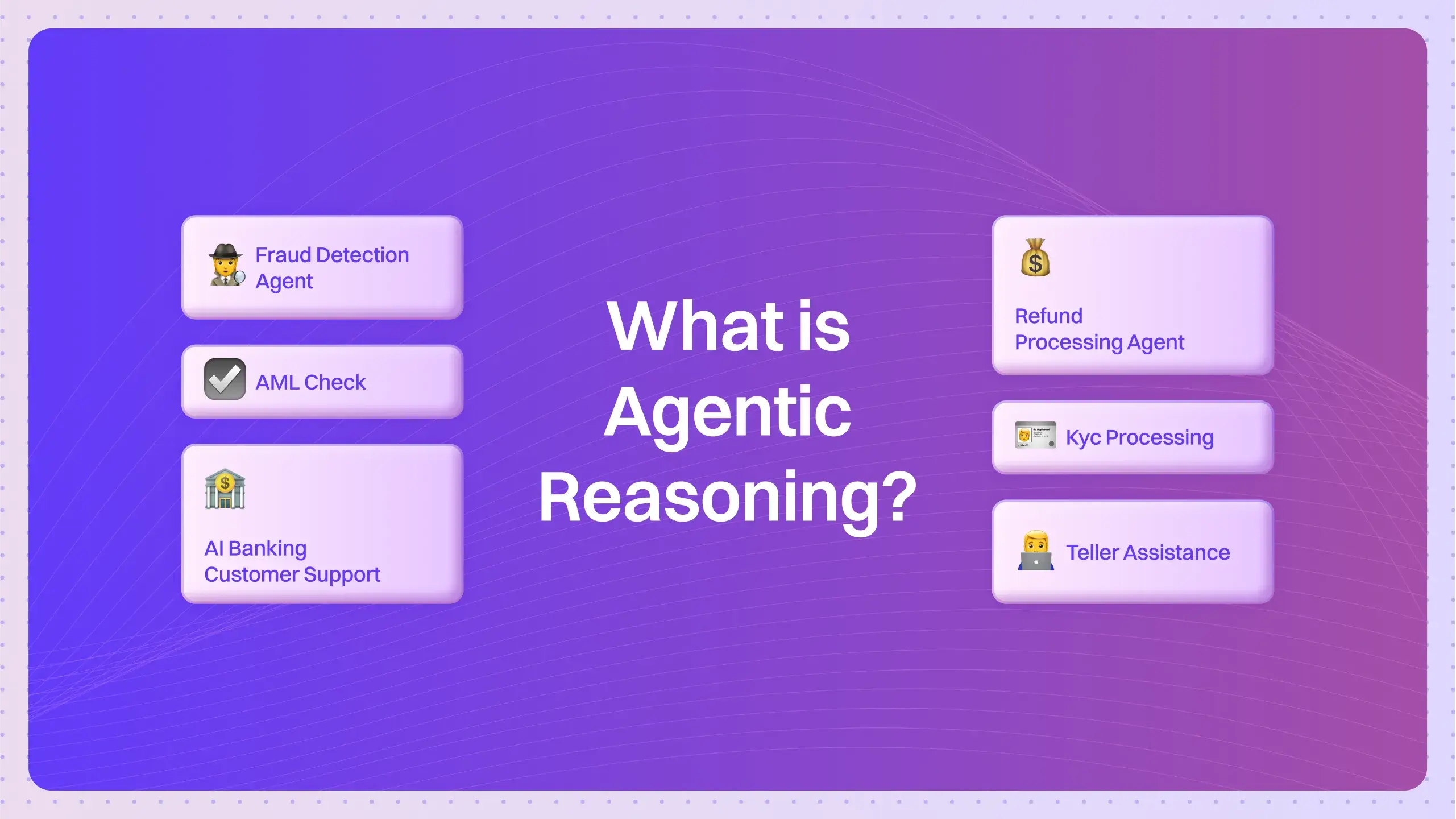

- Multi-Agent Coordination Protocols: For truly massive problems, you need a team. These protocols are the rules of engagement that allow multiple specialized agents to communicate, negotiate, and divide tasks to work together collaboratively.

Quick Test: Spot the Agent

Imagine two AI systems designed to help with travel planning.

System A asks you for your destination, dates, and budget, then shows you a list of flights and hotels that match.

System B asks for the same info, but then asks, “I see your calendar is free the week before. Flights are 30% cheaper then. Should I look for options?”

Which one is exhibiting agentic behavior, and why?

Deeper Questions on Agentic AI

How do agentic AI systems balance autonomy with safety constraints?

Through carefully designed “guardrails.” This involves setting explicit rules, defining prohibited actions, and using monitoring systems that can intervene if the agent’s behavior deviates from safe parameters.

What role does reinforcement learning play in developing agentic AI?

A huge one. Reinforcement Learning from Human Feedback (RLHF) and other methods are used to train agents by rewarding desirable behaviors and penalizing undesirable ones. This helps the agent learn complex strategies and align its actions with human preferences.

How do agentic AI systems handle uncertainty in real-world environments?

They use probabilistic models. Instead of assuming they have perfect information, they maintain a set of beliefs about the world and update them as they gather new data. This allows them to make robust decisions even with incomplete or noisy information.

What mechanisms enable AI agents to adapt their strategies based on feedback?

Feedback loops are central to their design. Whether it’s direct feedback from a user or indirect feedback from the outcome of an action, the agent uses this information to update its internal world model and planning algorithms.

How do multi-agent systems coordinate their actions toward common goals?

Through communication protocols and shared goals. They might use a “contract net” where one agent announces a task and others bid on it, or they might have a central coordinating agent that delegates responsibilities.

What ethical considerations are unique to autonomous AI agents?

Accountability is a major one. If an autonomous agent causes harm, who is responsible? The user, the developer, or the agent itself? We also face questions about job displacement, privacy, and the potential for misuse.

How does agentic AI approach problem-solving differently than programmatic systems?

A programmatic system follows a fixed flowchart. An agentic system explores a “problem space.” It is more like a detective exploring leads than a clerk following a checklist.

What is the relationship between large language models and agentic capabilities?

LLMs often serve as the “reasoning engine” or “world model” within an agentic system. They provide the natural language understanding and knowledge base, while the agentic framework provides the ability to plan and act on that knowledge.

How do memory and context retention enhance agent performance over time?

Memory allows an agent to learn from past interactions. It can remember user preferences, recall what strategies have failed before, and maintain a coherent plan across multiple steps and long periods.

What recent breakthroughs have advanced the capabilities of agentic AI?

The development of more powerful LLMs, improvements in reinforcement learning techniques, and the creation of open-source agentic frameworks like AutoGPT and BabyAGI have massively accelerated research and development in this area.

We are at the beginning of the agentic era. The goal is no longer just to build AI that can answer our questions, but to build AI that can help us achieve our goals.