Table of Contents

ToggleIn December 2024, Amazon Bedrock introduced multimodal capabilities, enabling AI to process contracts, scanned forms, images, and diagrams in the same workflow.

For enterprises, it marked a shift from handling data in isolation to analyzing it as a connected whole.

But most organizations are still behind. Nearly 85% continue to process text and visuals separately, leaving insights trapped in silos and slowing decision-making.

With the multimodal AI market expected to grow from $1.83B in 2024 to $42.38B by 2034, the companies that embrace integrated visual intelligence early will define the competitive edge for the next decade..

Why Multimodality Matters?

Beyond Words: The Case for Visual Intelligence

Documents in modern enterprises are rarely “just text.” Consider:

- Insurance claims with photos of damage attached.

- Compliance documents with diagrams, signatures, and stamps.

- Financial reports where charts and tables carry as much meaning as written analysis.

Relying on separate systems for text and visuals creates:

- Data silos that block cross-validation.

- Manual effort to stitch together insights.

- Higher risk of oversight, especially in compliance-heavy industries.

Market Momentum

The rapid market growth isn’t surprising. With the rise of multimodal models, enterprises now have the infrastructure, APIs, and scalability to unify their data processing. Bedrock’s December release makes this shift practical at enterprise scale.

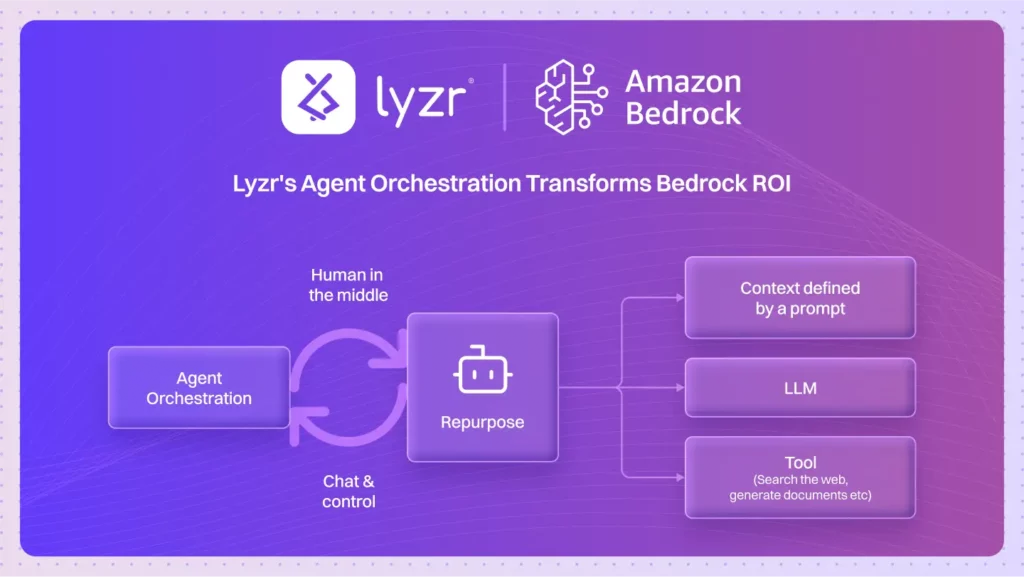

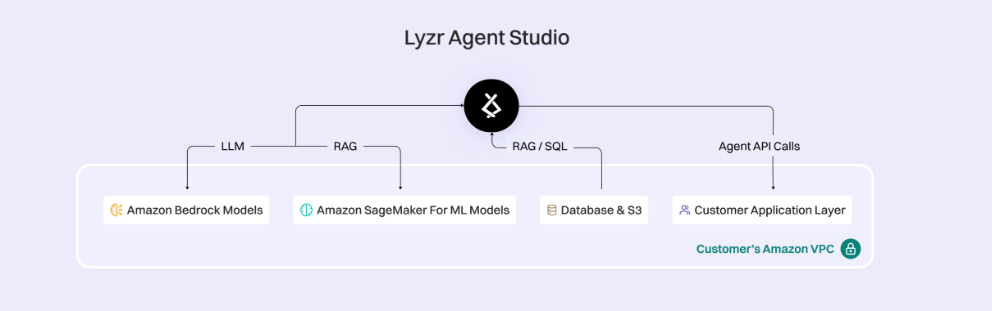

Lyzr + Bedrock: How It Works

Agent Framework Meets Bedrock

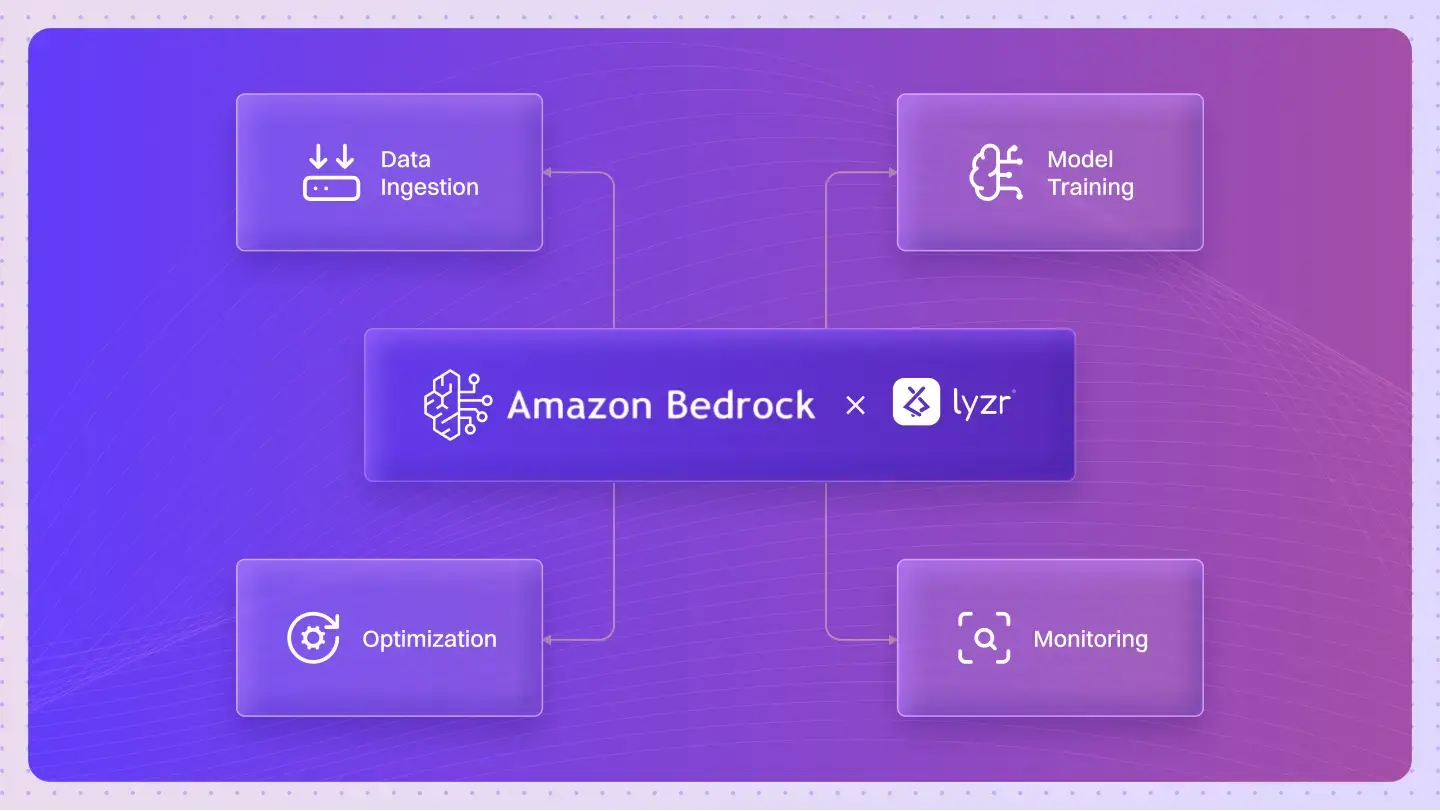

Lyzr’s agent framework integrates Bedrock’s multimodal processing directly into enterprise workflows. Here’s the mechanism:

- Visual Agents – Specialized agents ingest and interpret images, diagrams, and visual layouts.

- Text Agents – Handle natural language documents, contracts, and reports.

- Cross-Modal Coordination – Lyzr’s orchestrator ensures that both text and visuals are analyzed together, with results validated across modalities.

This creates a single pipeline that replaces today’s fragmented processes.

Model Specialization in Action

Lyzr doesn’t rely on a single model for every task. Instead, it orchestrates the right Bedrock models based on workload needs:

- Claude 3.5 Sonnet → High-accuracy, nuanced visual reasoning. Perfect for interpreting diagrams, multi-page contracts, or regulatory forms.

- Nova Models → Optimized for cost-effective batch image processing. Ideal for scenarios like thousands of scanned invoices or inspection photos.

This tiered approach balances accuracy, speed, and cost efficiency, a must for enterprises scaling multimodal AI.

Real-World Proof: Insurance Claim Processing

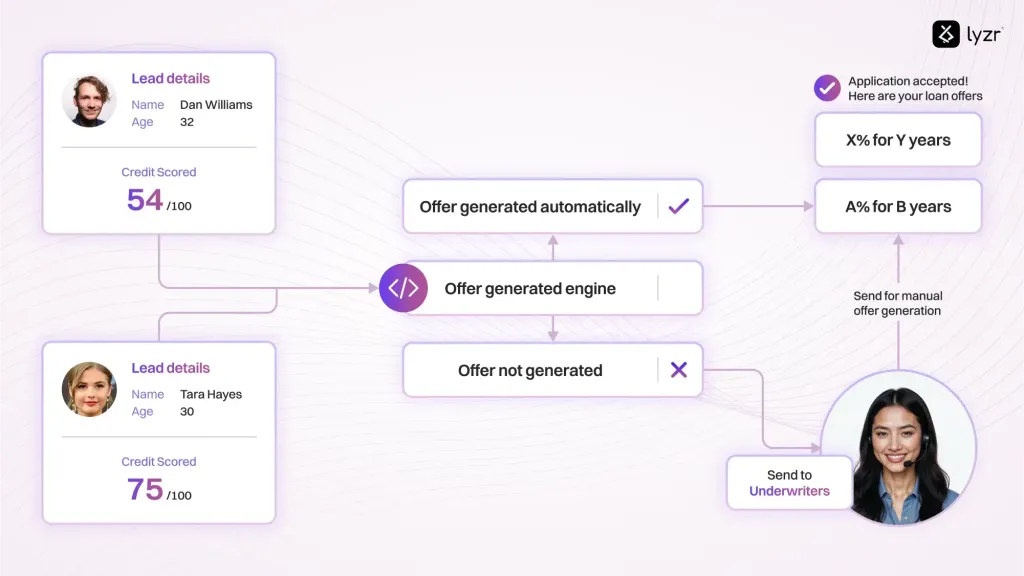

To see the impact, look no further than insurance claims.

Traditional processing involves:

- One system reading claim forms.

- Another reviewing uploaded photos.

- A human adjudicator reconciling them.

With Lyzr’s multimodal orchestration:

- Claim text, damage photos, and supporting documents are processed together.

- Cross-modal validation checks whether the textual description matches the visual evidence.

- Structured insights are automatically generated for faster adjudication.

The results

- 65% reduction in adjudication time.

- Higher accuracy through visual + textual alignment.

- Elimination of silos between document and image processing systems.

Comparative Snapshot

Here’s how traditional document workflows stack up against Lyzr multimodal orchestration with Bedrock:

| Dimension | Traditional Processing | Lyzr Multimodal Processing |

| Data Flow | Text and images processed separately | Unified pipeline for text + visuals |

| Accuracy | Dependent on manual reconciliation | Cross-modal validation improves accuracy |

| Speed | Weeks (due to silos and manual checks) | Hours or less |

| Scalability | Limited—requires additional staff as volume grows | Scales automatically via Bedrock |

| Compliance | Higher risk of oversight | Audit-ready, consistent across modalities |

The Road Ahead: Video, Real-Time, and Scale

Expanding Modalities in 2025

Bedrock’s December release was just the beginning. By Q2 2025, Lyzr will extend its multimodal orchestration to video analysis.

This means enterprises can handle:

- Surveillance feeds for real-time anomaly detection.

- Manufacturing quality control, where live video detects defects on assembly lines.

- Content moderation for digital platforms, analyzing both text overlays and moving visuals.

All Within AWS Boundaries

Crucially, all of this happens inside AWS’s compliant infrastructure, ensuring SOC2, GDPR, ISO 27001 alignment while keeping data securely within enterprise boundaries.

Why Enterprises Should Act Now

The case is clear: waiting risks falling behind. Here’s what enterprises stand to gain by acting early:

- Competitive edge in industries where speed and accuracy matter (insurance, healthcare, finance).

- Operational savings by reducing redundant systems and manual reconciliation.

- Future-readiness as Bedrock expands modalities to video and beyond.

By the time the multimodal market hits $42B by 2034, the leaders will already be those who integrated early.

Closing Thoughts

The December 2024 Bedrock update didn’t just add another feature, it signaled a shift to true multimodal intelligence at enterprise scale.

With Lyzr’s agent framework orchestrating Bedrock’s capabilities, businesses finally have a way to break down silos between text and visuals, unlock faster insights, and prepare for a future where even video feeds flow through the same AI pipeline.

Enterprises that act now won’t just automate, they’ll dominate.

Book a demo to see how

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here