Stop guessing what actions are good.

Start building a better strategy.

Policy Gradient is a family of reinforcement learning algorithms that directly optimize the policy (the strategy that an AI agent uses to determine its actions) by using gradient ascent on the expected reward, rather than learning an intermediate value function.

Think of it like learning to cook by trial and error.

Instead of memorizing a cookbook that tells you the exact “value” of adding a pinch of salt (a value-based method), you just start cooking.

You make a dish, have someone taste it, and get feedback.

“Too salty.”

“Not enough spice.”

Based on that direct feedback, you slightly adjust your technique for the next dish. You’re not learning a complex theory of taste; you’re directly improving your cooking policy.

This matters because it’s the key to teaching AI skills that aren’t just simple choices.

It unlocks the ability to learn nuanced, continuous actions – like how to smoothly turn a steering wheel or delicately grip an object with a robotic hand.

What is a Policy Gradient method?

It’s a way to directly teach an AI agent how to behave.

The “policy” is the agent’s brain.

It’s a function, usually a neural network, that takes the state of the environment as input and outputs an action (or a probability of taking each action).

Policy Gradient methods use calculus to figure out how to change the parameters of this neural network.

After the agent tries something and gets a reward (or penalty), the algorithm calculates a “gradient.”

This gradient is just a direction.

It points the way to adjust the network’s parameters to make the good actions more likely and the bad actions less likely in the future.

You’re literally pushing the policy up the hill of “total expected reward.”

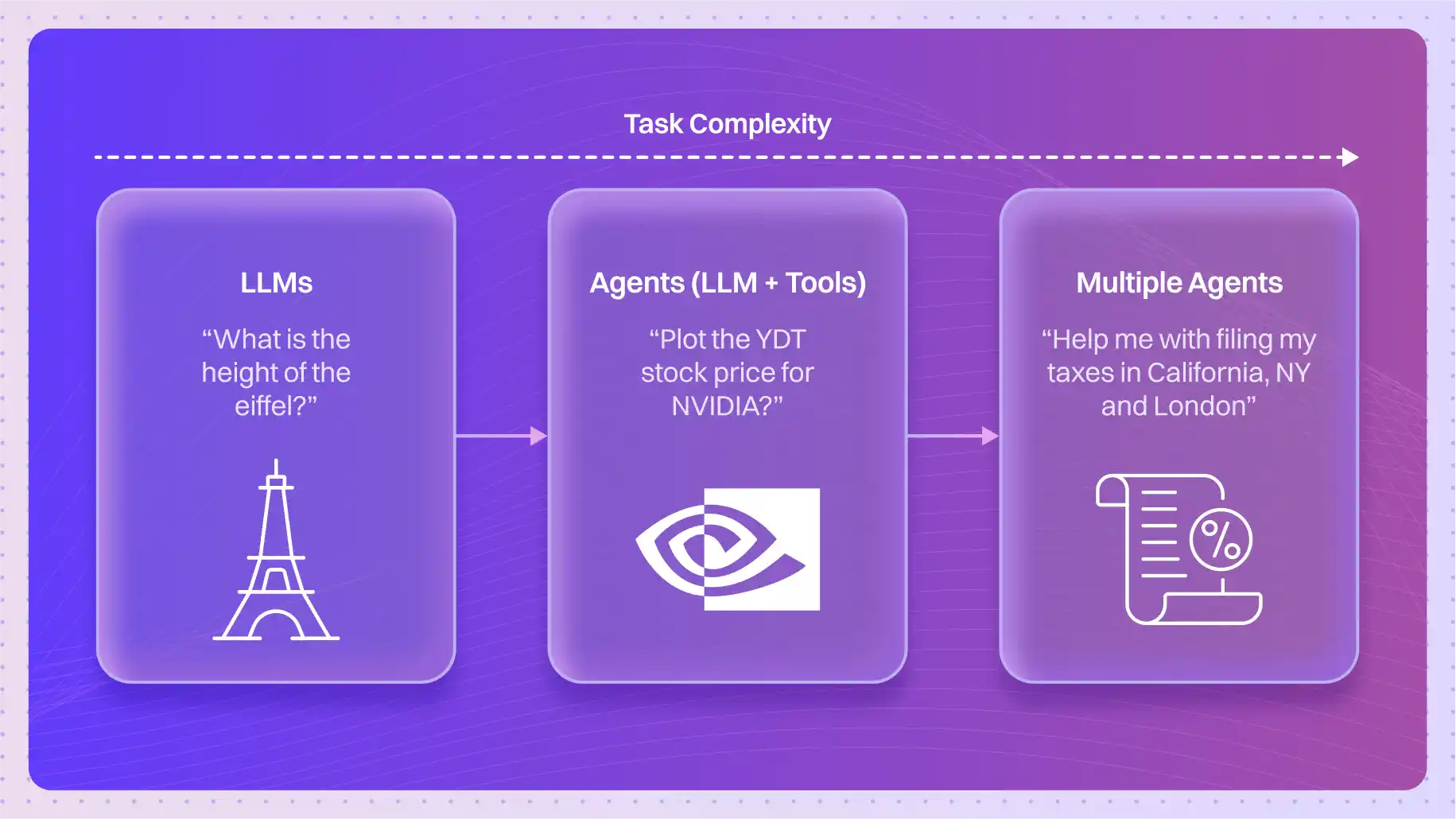

How do Policy Gradient methods differ from other reinforcement learning approaches?

They learn differently. It’s a fundamental shift in strategy.

Policy Gradient vs. Value-Based Methods (like Q-Learning):

Value-based methods first try to learn a value function.

They create a huge, detailed map that estimates the reward you’ll get from taking any action in any state. The policy is simple: just pick the action with the highest value on the map.

Policy Gradients skip the map-making.

They directly learn the policy itself. This is far more effective in worlds where the number of actions is infinite, like robotics. You can’t make a map of infinite choices, but you can learn a function that outputs the right one.

Policy Gradient vs. Model-Based Methods:

Model-based methods try to learn the rules of the world.

They build an internal simulation of the environment. Then, they use that simulation to plan the best course of action.

Policy Gradients are “model-free.”

They don’t need to understand the physics of the world. They learn directly from experience, which makes them more flexible for incredibly complex environments where building an accurate model is nearly impossible.

What are the key advantages of Policy Gradient methods?

They excel where other methods struggle.

- Continuous Action Spaces: This is their superpower. They can output a precise steering angle for a car or the exact torque for a robotic joint, not just “turn left” or “turn right.”

- Stochastic Policies: They can learn policies that are purposefully random. This is crucial for games like rock-paper-scissors or for an agent that needs to explore its environment without getting stuck in a rut.

- Better Convergence: In many complex, high-dimensional problems, they are more stable and more likely to find a good solution than value-based methods.

What are the limitations of Policy Gradient methods?

They aren’t a silver bullet. They come with their own set of challenges.

The biggest issue is high variance.

The feedback an agent gets can be very noisy. A lucky series of random events might lead to a high reward, tricking the algorithm into thinking a bad policy is actually a good one. This makes the learning process unstable and slow.

They are also sample inefficient.

They often require millions of attempts to learn a task that a human could pick up in minutes. They need a massive amount of experience to filter out the noise and find the true signal of a good policy.

What are common Policy Gradient algorithms?

The field has evolved rapidly to solve these limitations.

The foundational algorithm is REINFORCE, but it’s rarely used in practice due to its high variance.

The real breakthroughs came with Actor-Critic methods.

These combine Policy Gradients (the “Actor” that decides what to do) with a value function (the “Critic” that judges the action). This drastically reduces variance and stabilizes learning.

Today, the most popular and effective variant is Proximal Policy Optimization (PPO).

Developed by OpenAI, PPO is known for its reliability and performance. It’s the algorithm behind incredible feats like training a robotic hand to solve a Rubik’s Cube. DeepMind has also used advanced Policy Gradient techniques to master games like StarCraft II with its AlphaStar agent.

What technical mechanisms drive Policy Gradient learning?

The core idea is to represent the policy with a powerful function approximator and then intelligently update it.

It starts with Stochastic Policy Parameterization.

We use a neural network as the policy. For a given state, the network doesn’t just output one action. Instead, it outputs a probability distribution over all possible actions. The agent then samples from this distribution to pick its next move. This is how it explores.

The update itself is guided by algorithms like REINFORCE. This is the most basic approach. The agent plays an entire game (an episode), collects all the rewards, and then goes back and strengthens the actions that led to a high final score.

But the modern solution is Actor-Critic architectures.

This is a game-changer.

The “Actor” is the policy network, deciding on actions.

The “Critic” is a second neural network that learns a value function.

After the Actor takes an action, the Critic provides immediate, less noisy feedback: “Hey, that action was better (or worse) than I expected for this state.” This targeted feedback is much more efficient than waiting until the end of the game, which dramatically speeds up and stabilizes learning.

Quick check: Robotics Challenge

You’re training a robot arm to pick up a coffee mug. The actions involve setting the precise angles for six different joints—all continuous values. Would a classic Q-learning (value-based) or a Policy Gradient method be a more natural fit?

Answer: Policy Gradient. Q-learning struggles with infinite, continuous action spaces, while Policy Gradients are designed for exactly this kind of nuanced control.

Deep Dive: Answering the Tougher Questions

How does the REINFORCE algorithm work?

It’s a Monte Carlo method. The agent completes a full episode, storing its states, actions, and rewards. At the end, it calculates the total return. Then, it goes back and increases the probability of actions that led to a high return and decreases the probability of actions that led to a low return.

What is the difference between on-policy and off-policy Policy Gradient methods?

On-policy methods (like REINFORCE, A2C, PPO) require that the data used for updates is collected using the current policy. This is inefficient because old data must be thrown away. Off-policy methods can learn from data collected by previous versions of the policy, making them more sample-efficient.

How do Actor-Critic methods improve upon basic Policy Gradient algorithms?

They reduce variance. Instead of using the noisy, high-variance return from an entire episode to judge an action (like REINFORCE), they use the Critic’s more stable estimate of the action’s value. This provides a much cleaner learning signal.

What is the Policy Gradient Theorem?

It’s the mathematical foundation for these methods. It provides a formal expression for the gradient of the expected reward with respect to the policy parameters, proving that we can indeed directly optimize the policy without knowing the environment’s dynamics.

How do Policy Gradient methods handle continuous action spaces?

The policy network outputs the parameters of a probability distribution, typically a Gaussian (normal) distribution. For example, it might output the mean and standard deviation. The agent then samples an action from this distribution to get a specific, continuous value.

What are trust region methods in Policy Gradient algorithms?

These methods, like TRPO, constrain how much the policy can change in a single update step. This prevents a single bad update from catastrophically destroying the policy’s performance, leading to more stable and monotonic improvement.

How does Proximal Policy Optimization (PPO) work?

PPO is a simpler approximation of TRPO. It discourages large policy updates by using a “clipped” objective function. This makes it easier to implement and more efficient while still providing the stability benefits of a trust region.

What is the role of entropy in Policy Gradient methods?

An entropy bonus is often added to the objective function. Entropy measures the randomness of the policy. By encouraging higher entropy, we encourage the agent to explore more and prevent it from converging prematurely to a sub-optimal deterministic policy.

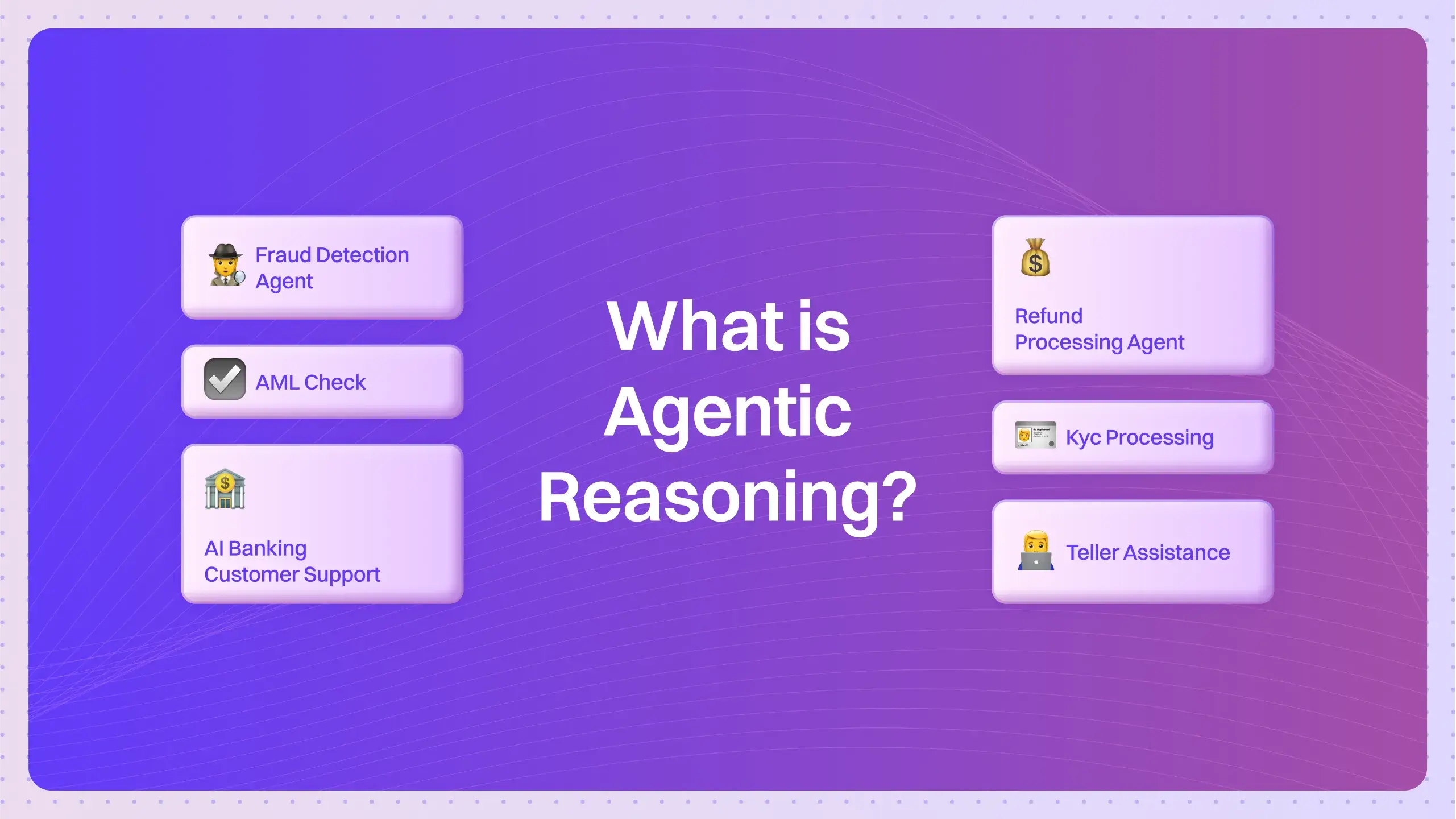

How are Policy Gradient methods applied in multi-agent reinforcement learning?

In settings with multiple agents, each agent can be controlled by its own policy network. They all learn simultaneously, treating the other agents as part of the dynamic environment. This is the foundation for training AI teams in complex games or simulations.

What recent advances have improved Policy Gradient performance?

Beyond PPO and Actor-Critic, research focuses on better exploration strategies, learning from offline datasets (offline RL), and improving sample efficiency to make these powerful methods practical for more real-world problems.

Policy Gradient methods represent a fundamental shift in how we teach machines to act. They are the driving force behind the most impressive feats in modern robotics and strategic AI.