Table of Contents

The Leadership You Knew Is Over

Sarah checks her dashboard Tuesday morning. Her team is online and so are their AI agents.

One agent processed 200 inquiries, flagged six for human judgment. Another drafted the weekly report waiting in Sarah’s queue. A third is halfway through compliance, surfacing patterns for her team to analyze. The agents aren’t replacing anyone, they’re doing groundwork so her team focuses on edge cases, strategic calls, things needing context and nuance.

But managing this isn’t like managing people. Her human team needs something new: clarity on where they add value, when to trust agent work, when to step in.

And that’s where she’s navigating questions her MBA never touched: What’s leadership when your team includes copilots that think but don’t feel? When your job isn’t just to inspire humans, but to orchestrate collaboration between people and machines?

Here’s the uncomfortable truth: Sarah’s confusion is everyone’s confusion.

Research surveying 3,600+ employees proves it. The workforce is ready: 81% say AI will make them better at their jobs. But only 22% say leadership has communicated any kind of plan. (McKinsey & Company, 2025)

“The biggest barrier to AI success isn’t technology. It’s not employee resistance. It’s leaders who don’t know how to lead this yet.”

If you think about it, everything you learned about management was built for humans only.

That playbook assumed everyone on your team works the same way. AI agents don’t.

Although they are copilots who handle the repetitive, computation, high-volume work, someone has to lead it.

Someone has to manage intelligence itself, both kinds. Someone has to navigate “the space between”, that weird gap when humans work alongside things that think but don’t feel.

The old leadership model breaks here.

This paper helps you unlearn what leadership was, so you can learn what it’s becoming.

Why Traditional Leadership Models Broke

For decades, leadership theory rested on a stable foundation: people, organized in hierarchies, pursuing goals through coordination and motivation. The manager’s role was clear: translate strategy into action, align effort with objectives, develop talent, resolve conflict, inspire performance.

The tools? They were human-centric: one-on-ones, feedback loops, performance reviews, team-building exercises.

The metrics? Human-scaled: quarterly goals, annual reviews, 30-60-90 day plans. The emotional labor was entirely interpersonal: reading the room, managing egos, building trust, navigating politics.

These models worked because they matched the pace and nature of human work. Decisions moved through meeting cycles. Changes required buy-in. Execution depended on sustained effort over time.

Enter AI agents. They don’t fit the model.

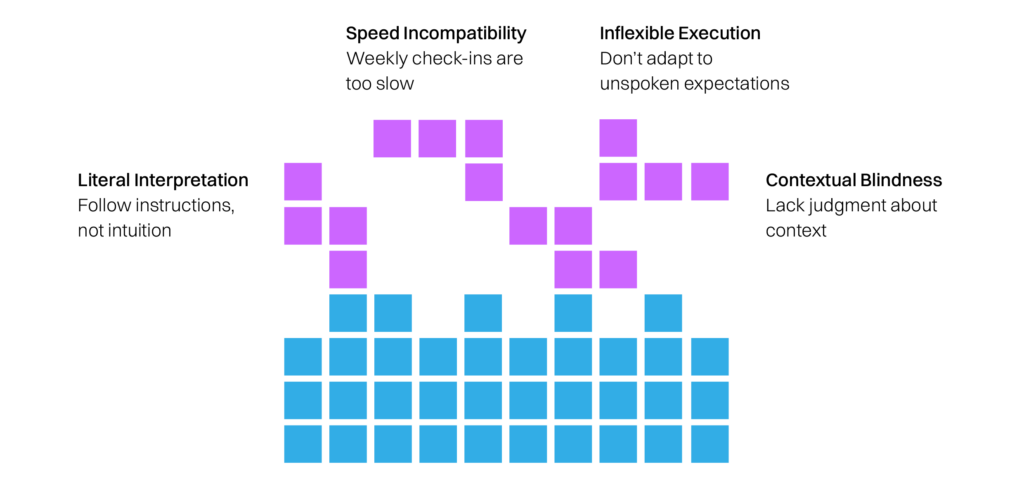

They:

- Operate at speeds incompatible with weekly check-ins.

- Scale without hiring friction.

- Execute with precision but lack judgment about context.

- Follow instructions literally, not intuitively.

- Don’t get tired, don’t need motivation, but they also don’t adapt to unspoken expectations or read between the lines.

How your core leadership practices shift

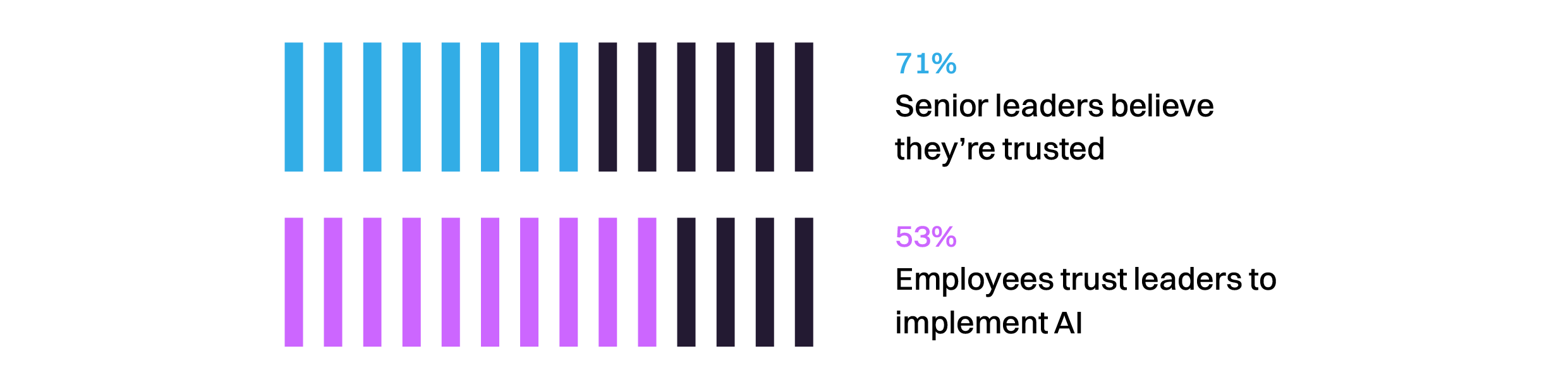

The data confirms this challenge is widespread. McKinsey’s 2025 workplace AI report found that C-suite leaders are more than twice as likely to cite employee readiness as a barrier than to acknowledge their own role, yet only 53% of employees trust leaders to implement AI effectively, compared to 71% of senior leaders who believe they’re trusted.

The gap isn’t technical literacy. It’s conceptual.

Three Realities Leaders Must Accept

Before we get to what you need to unlearn, let’s establish what’s actually happening.

Reality one: Agents are copilots, not tools

This distinction matters. Tools are inert. They do what you tell them, when you tell them, with no agency in between.

Agents act differently. They make recommendations, generate outputs, and surface insights. They have workflows that intersect with human workflows. They need configuration, ongoing refinement, and governance.

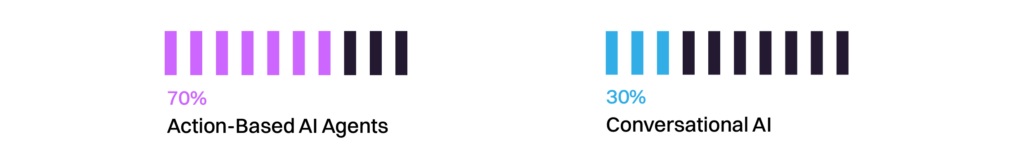

Lyzr’s State of AI Agents report confirms this shift: Over 70% of AI adoption efforts now focus on action-based AI agents rather than conversational AI alone. Enterprises aren’t just deploying chatbots they’re deploying agents that execute tasks, process transactions, and coordinate workflows.

But here’s the critical difference from full automation: they support human decision-making, they don’t replace it. The human reviews, refines, and approves. The agent does the groundwork.

Reality two: Speed asymmetry is permanent

Humans and agents operate on different time scales. An agent can process a thousand data points while you’re reading one email.

This creates a fundamental management challenge: how do you maintain oversight when execution happens faster than you can observe it?

You can’t. The old model of “review everything before it ships” dies here.

Reality three: The human experience is the variable

Agent performance is relatively predictable. Give it the same input, you get consistent output.

Human performance, especially in hybrid teams, is highly variable. Morale, trust, psychological safety, role clarity, these become the critical levers.

Data from MIT CISR shows that by 2027, an estimated 72% of employees will collaborate with generative AI in some capacity. This massive shift means the human dimension, how people feel about, respond to, and integrate with AI copilots, becomes the primary variable determining success.

What You Need To Unlearn

Unlearn the idea that leadership is about managing one type of team

For a century, great leaders were “people people.” They read the room. They motivated teams. They resolved conflict with empathy and built culture through connection. That still matters, and actually becomes more important.

But now you’re also leading collaboration between humans and AI copilots. This creates a new leadership challenge: helping your team understand where they’re irreplaceable. Because when an AI agent takes over the tedious parts of someone’s job, the question becomes: what’s their new value-add?

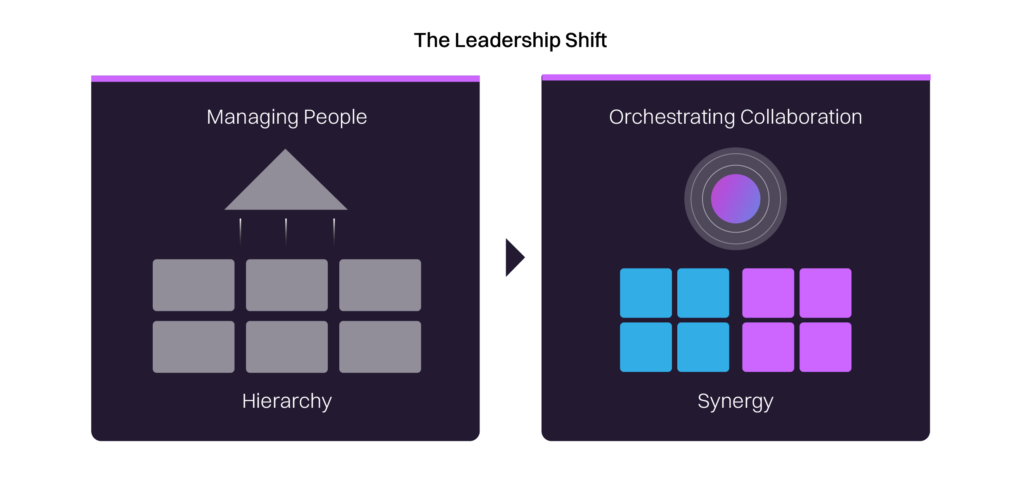

The new reality: Leadership is about orchestrating human-AI collaboration where both sides make each other better.

What broke: 74% of companies struggle to scale AI value. Why? BCG research found that 70% of AI implementation challenges are people-and-process issues. Only 10% are algorithm problems. Organizations focus on the tech, but the real challenge is helping humans understand their evolved role, not as competitors to AI, but as collaborators with it.

Lyzr’s research across 3,000+ enterprise conversations reveals where this collaboration is already happening: 64% of AI agent adoption centers on business process automation, with customer service (20%), sales (17%), and marketing (16%) leading the charge. These aren’t experimental use cases, they’re production deployments transforming how work gets done.

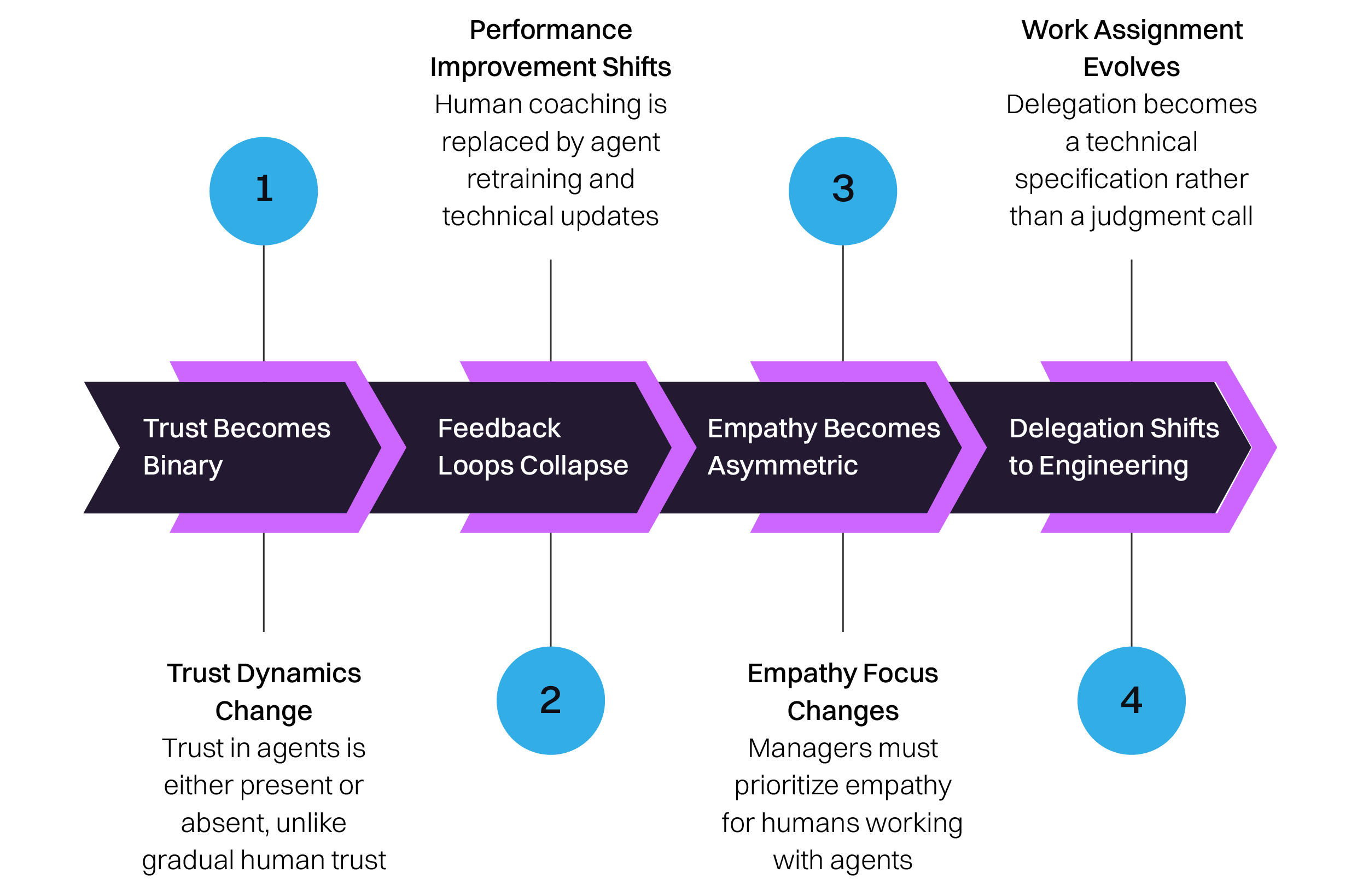

Unlearn that trust is built gradually

You’ve spent your career building trust through consistency. Show up. Deliver. Be reliable. Over time, your team learns to trust your judgment.

With AI agents? Trust is binary.

Either the output is reliable or it isn’t. There’s no “I trust them to figure it out” with a model. There’s no building rapport over coffee. You can’t give an agent the benefit of the doubt based on past performance.

MIT’s research on human-AI collaboration found something surprising: human-AI teams often underperform compared to either humans or AI working alone, especially on decision-making tasks. Why? Because people don’t know when to trust the machine and when to trust themselves. They’re stuck in a weird middle ground, second-guessing both.

The trust problem isn’t technical. It’s psychological. Your team needs to know: When do we defer to the agent? When do we override it? What are the rules of engagement?

The new reality: Trust must be architected: with explainability, guardrails, and clear decision rights.

What broke: EY research shows 65% of employees say they don’t know how to use AI ethically. 77% worry about legal risks. The anxiety isn’t about the tech failing, it’s about not knowing when to trust it.

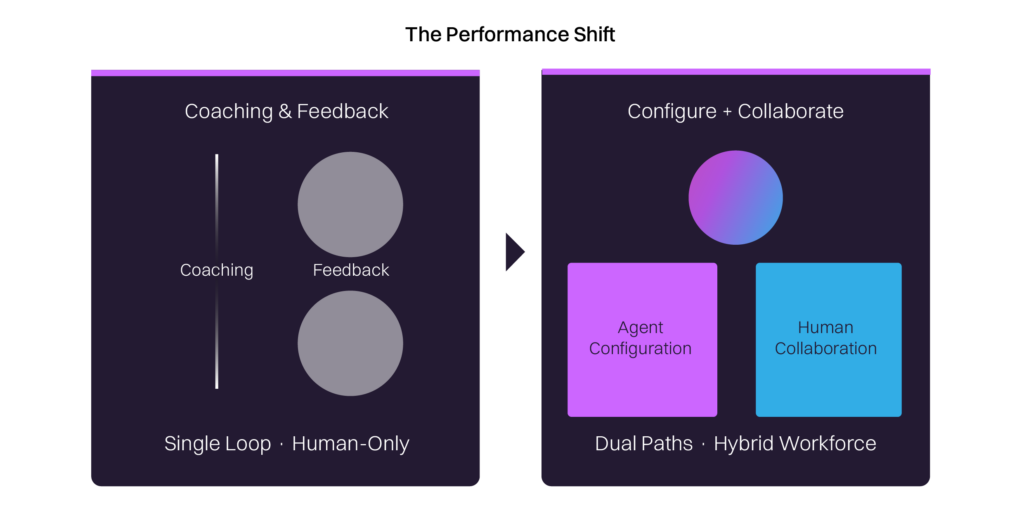

Unlearn that feedback makes things better

Your whole career, feedback has been a gift. You coach someone, they improve. You give a quarterly review, they course-correct. Performance management is an iterative, human process.

Agents don’t improve through feedback. They improve through retraining.

You can’t pull an AI agent aside and say “hey, I noticed you’ve been making more errors lately, what’s going on?” There’s no coaching conversation. There’s no “let’s work on your communication skills.”

When an agent underperforms, the solution isn’t motivational. It’s technical. You adjust parameters. You retrain the model. You change the prompt.

The new reality: You improve agent performance through configuration. And you improve human performance by helping them understand how to work with agents.

What broke: McKinsey found that C-suite leaders dramatically underestimate how much employees already use AI. Leadership thinks 4% of employees use GenAI extensively. Reality? 13%. And that gap is growing. While leaders debate strategy, employees are already experimenting, without guidance, without guardrails, without feedback loops.

Unlearn your job is oversight

The classic management move: review before approval. Nothing ships without your sign-off. You’re the checkpoint, the quality control, the final word.

That doesn’t scale in agentic systems.

An AI agent can process thousands of transactions while you’re in your morning standup. You cannot be the bottleneck. The speed mismatch is too extreme.

So what’s your job now? Not oversight. Orchestration.

You design the system. You set the parameters. You define what good looks like. Then you monitor for exceptions: the 5% that breaks the pattern, not the 95% that runs smoothly.

The new reality: Leaders ensure quality by designing systems that self-correct, keeping human-in-loop without over-automating.

What broke: Only 26% of organizations can move AI projects from proof-of-concept to production. Why? They’re trying to manage AI like they manage people; with oversight, approval chains, and manual quality checks. It doesn’t work.

The challenge is widespread: Lyzr’s State of AI Agents report found that 32% of enterprises stall after the pilot phase, never reaching production deployment. Meanwhile, 62% of organizations exploring AI agents lack a clear starting point, and 41% still treat them as side projects rather than core business initiatives.

The gap between experimentation and execution remains the defining barrier to AI value.

For a practical framework on bridging this gap, see Lyzr’s playbook.

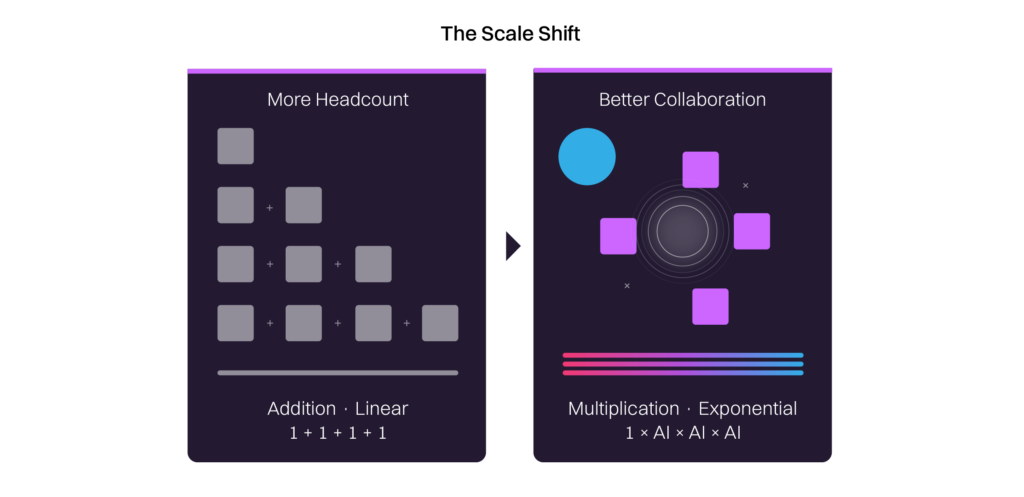

Unlearn that scale means replacing people with machines

For decades, growth meant hiring. Need more capacity? Add headcount. Want to enter new markets? Build bigger teams.

Agents break that equation, but not by replacing people. By augmenting them.

The pattern Lyzr sees repeatedly: companies deploy agents, expect headcount reductions, and instead discover their teams can accomplish exponentially more. An agent handles the repetitive work, surfaces insights, processes the high-volume tasks. The humans? They’re freed to do higher-value work: strategy, creativity, judgment calls, relationship building.

Scale now means augmentation, not automation. Your capacity isn’t about how many people you have. It’s about how effectively your humans collaborate with AI copilots. The companies winning at this aren’t automating jobs away. They’re building smaller, more strategic teams that orchestrate agents to multiply their impact.

BCG found that AI leaders pursue half as many opportunities as their peers, but scale them more successfully. They focus. They design for human-AI collaboration. They expect twice the ROI.

The new reality: Scale = better human-AI collaboration that multiplies what each person can accomplish.

What broke: 75% of workers now use AI at work, and 90% say it saves them time. But only 6% of organizations qualify as “AI high performers” (generating 5%+ EBIT impact). Most companies are dabbling. The winners are systematically rethinking how humans and AI work together, not replacing people, but making them exponentially more effective.

Interestingly, Lyzr’s data from 21,000+ AI agent builders reveals a democratization trend: while 70% come from developer backgrounds, 30% are business users from Product, Marketing, Sales, Customer Service, and HR teams. AI is no longer just a technical tool, it’s becoming a business enabler that teams across functions can leverage directly.

What To Learn Instead: The Agentic Leadership Model

You’ve unlearned the old model. Now what?

Here’s the new framework: three principles that define leadership in the age of hybrid human-AI teams.

Principle 1: Architect trust, don’t build it

Forget trust-building through rapport. Design trustworthy systems.

What this means:

- Make AI decisions explainable. If an agent recommends something, the logic should be traceable.

- Set clear boundaries. Define what agents can decide autonomously vs. what requires human approval.

- Run shadow modes first. Let agents generate recommendations without acting on them. Build confidence through observation.

- Accept that trust is fragile with machines. One agent mistake erodes trust faster than ten human mistakes. Plan for that.

Principle 2: Design for Collaboration, Not Handoff

Your job isn’t to decide what agents do and what humans do, then never touch it again. It’s to design workflows where agents support human decision-making.

What this means:

- Map where agents add speed and where humans add judgment. Agents process surface patterns, handle volume. Humans interpret, decide, strategize.

- Keep humans in the loop. Agents should surface recommendations, not make final calls. The human reviews, refines, approves.

- Make the handoffs clear. When does work move from agent to human? When does human insight feed back to improve the agent?

- Monitor collaboration quality, not just output volume. Are agents surfacing the right things for human review? Are humans making better decisions because of agent support?

Principle 3: Lead the Human Transition with Clarity

You can’t empathize with an agent. But you must address your humans’ fear, not of being replaced, but of becoming irrelevant.

This fear is real and widespread. 75% of workers worry AI will make certain jobs obsolete. 45% believe AI will impact their job security. 33% want AI banned from the workplace completely.

What this means:

- Address the fear directly. Don’t pretend AI integration is seamless. Acknowledge: “Your role is changing. Let me tell you how.”

- Clarify what’s irreplaceable. Name the specific human capabilities that AI can’t replicate: judgment in ambiguous situations, ethical reasoning, creative strategy, relationship building.

- Reframe AI as liberation, not threat. “The tedious work goes to the agent. You get to focus on what actually requires your expertise.”

- Model your own learning. You’re figuring this out too. Vulnerability builds trust.

The Psychological Shift: From Threat to Partnership

When AI agents join your team, people get scared. Not because agents are taking their jobs, but because nobody’s clearly explained what their job becomes.

But here’s what the research also shows: AI isn’t eliminating jobs. It’s transforming them.

90% of workers using AI say it saves them time. IBM found that when organizations implement AI effectively, 66% report significant gains, but specifically in augmenting workforce capabilities (48%), not replacing them. The pattern is consistent: agents handle volume and repetition. Humans handle judgment and creativity.

So if you think about it, the real problem isn’t job loss. It’s role confusion.

When an agent takes over parts of someone’s job, leadership’s responsibility is to help them see their new purpose as an elevation not diminishment.

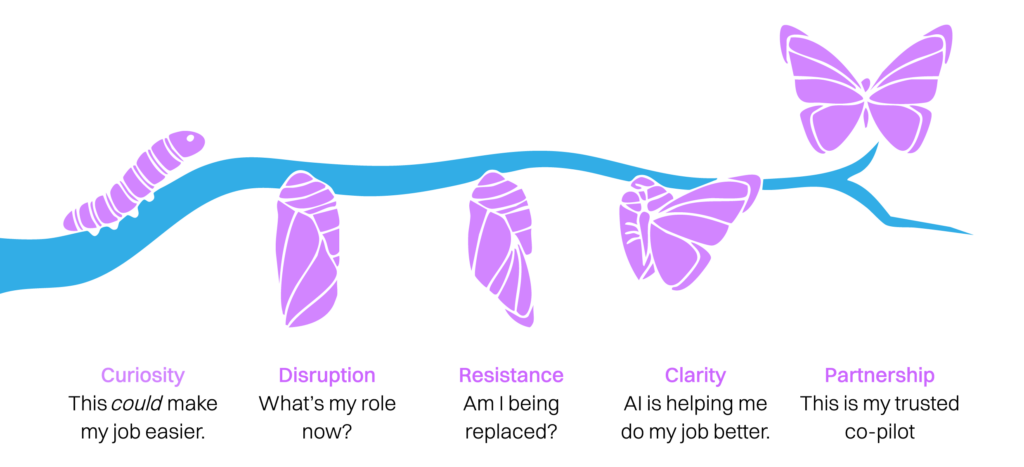

The Five Stages of AI Integration

Most teams stall at Stage 3 because nobody addressed the fear. The ones that reach Stage 5? They have leaders who made the human role crystal clear.

What Makes the Difference

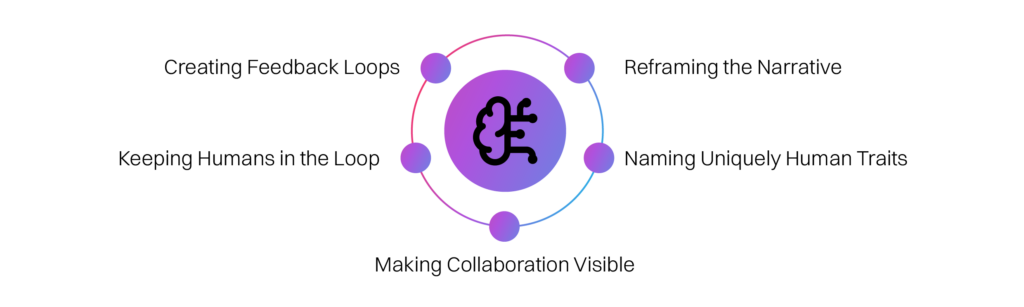

The companies that succeed don’t just deploy AI. They actively clarify what makes humans irreplaceable.

They reframe the narrative. Not “AI is taking tasks from you” but “AI is taking tedious work so you can focus on what humans do best.”

They name what’s uniquely human. Judgment. Creativity. Ethical reasoning. Relationship building. Strategic thinking. The stuff AI can’t replicate.

They make collaboration visible. They celebrate examples where humans + AI produced better outcomes than either could alone.

They keep humans in the loop. Lyzr’s entire philosophy: agents aren’t autonomous replacements. They’re copilots. Humans stay in control, making the calls that matter.

They create feedback loops between humans and agents. The best systems aren’t static. Humans improve agent performance by providing input. Agents surface insights that help humans improve. The relationship is reciprocal, not hierarchical.

The shift: Leadership in the agentic age is more emotionally demanding, not less. The challenge isn’t whether to care about people, it’s how to care about them while helping them see their irreplaceable value in a context that’s fundamentally different from anything that came before.

The ROI of Getting This Right

Let’s talk numbers, because CFOs care about this.

Companies implementing generative AI report average ROI of $3.70 per dollar invested. Top performers? $10.30 per dollar. (IDC’s 2024 AI opportunity study)

IBM found 66% of organizations report significant productivity gains:

- 55% in operational efficiency

- 50% in decision-making

- 48% in workforce augmentation

Over three years, AI leaders achieve:

- 1.5x higher revenue growth

- 1.6x greater shareholder returns

- 1.4x higher returns on invested capital

But here’s the critical insight:

The 26% of companies achieving substantial value focus 62% of AI investment on core business processes, not support functions. They pursue fewer opportunities but scale them relentlessly. They invest strategically, not broadly.

The data reveals their approach:

- They pursue half as many AI opportunities as their peers

- But they successfully scale more than twice as many products and services

- They expect and achieve twice the ROI of less advanced companies

- They focus efforts on people and processes (70%) over technology (20%) and algorithms (10%)

What separates winners from losers? Not the tech. The leadership.

The companies struggling (74% of them) are trying to fit AI into old management models. The companies winning? They’ve unlearned those models and built new ones.

Leading Into Symbiosis: The Leadership Mindset

So what does a leader in an agentic enterprise actually do?

They don’t manage tasks. They don’t micromanage agents. They don’t automate humans out of decision-making.

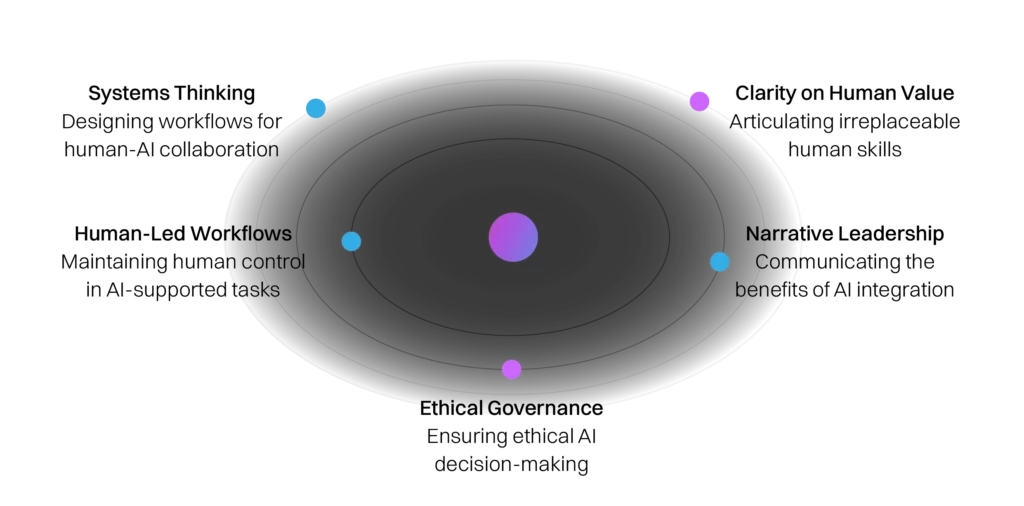

This mindset shows up in five capabilities:

1. Systems Thinking for Collaboration

They design workflows where agents and humans each contribute their strengths. They see the organization as a collaboration network, not a replacement pipeline. They think in terms of symbiosis, not substitution.

2. Clarity on Human Value

They can articulate exactly what makes their humans irreplaceable: judgment, creativity, ethical reasoning, strategic thinking, relationship building. They position AI to amplify these capabilities, not compete with them.

3. Comfort with Human-Led, AI-Supported Workflows

They design systems where agents surface insights and recommendations, but humans make the calls. They’re comfortable with AI doing groundwork, as long as human judgment guides the outcome. They know when to keep humans in the loop and when to let agents run.

4. Narrative Leadership Around Augmentation

In an environment of constant change, story becomes strategy. They tell the story that makes sense of the transition: “AI isn’t taking your job. It’s taking the tedious parts so you can focus on what requires you.” They help teams see purpose amid transformation.

5. Ethical Governance at Every Level

They ensure humans stay in the loop on decisions that matter. They build guardrails so agents support, not supplant, human authority. They make decisions at the boundary, the edge cases where rules break down and ethical reasoning becomes essential. This cannot be automated.

Scale is no longer the frontier. Symbiosis is. Value created when human judgment and machine capability amplify each other. Agents bring speed and scale; humans bring creativity, context, and ethics.

This is leadership as architecture. Not of buildings or org charts, but of intelligent systems designed for collaboration.

What Lyzr Believes

At Lyzr, we build the infrastructure that makes agentic enterprises possible. We enable organizations to design, deploy, and govern AI agents at scale.

Our entire philosophy is human-in-loop. Agents handle the repetitive, the computational, the high-volume work. Humans stay in control, making decisions, applying judgment, adding the context and creativity that machines can’t replicate.

The gap between possibility and reality is massive right now:

- 79% of leaders say they need AI to compete (Microsoft, 2024) 60% admit they have no plan for implementation

- 90% of employees already use GenAI at work (Udacity, 2025) but leadership thinks only 4% do

The problem isn’t access to technology. It’s the absence of frameworks for leading human-AI collaboration.

That’s what this paper offers. A mindset shift.

From managing people → to orchestrating human-AI collaboration

From building trust → to architecting trustworthy systems

From oversight → to strategic design

From replacement anxiety → to augmentation clarity

We believe the future belongs to leaders who can do three things:

- Unlearn the management models built for human-only teams

- Design systems where humans and AI make each other better

- Make crystal clear what makes humans irreplaceable, then build AI to amplify it

Leadership in the agentic age is about creating conditions for multiple forms of intelligence to coexist, collaborate, and compound. It’s about accepting that you cannot see everything, cannot approve everything, cannot be the bottleneck. It’s about learning to orchestrate rather than oversee.

The agentic age is here. The question isn’t whether to adapt.

It’s how fast you can unlearn what no longer works. The agentic leader operates at the intersection of technical precision and human depth. They are equal parts systems architect and emotional anchor. They are engineers of collaboration and guardians of culture.

Because the goal isn’t to automate people out of the picture. It’s to free them to do the work that actually requires them.