Intelligence is moving out of the data center and into your pocket.

Edge AI Agents are intelligent software systems that run artificial intelligence algorithms directly on local devices.

Like your phone, your car’s sensors, or your smart home speakers.

They do this instead of sending data to the cloud.

The result is faster response times, beefed-up privacy, and the ability to work without a constant internet connection.

Imagine you have a smart local assistant living inside your device.

Not a remote consultant you have to call.

Instead of sending a question across town to an expert (the cloud) and waiting for a reply…

You have a knowledgeable helper right there with you.

One who can make decisions instantly, no phone calls needed.

This isn’t just a technical tweak.

It’s a fundamental shift in how we build and deploy intelligent systems.

Understanding this is critical for anyone concerned with data privacy, real-time decision-making, and creating truly autonomous technology.

What are Edge AI Agents?

They are specialized AI programs.

Designed to live and work on the “edge” of a network.

On the device itself.

Where the data is created.

This gives them the power to perceive, reason, and act locally.

Without phoning home to a central server for every little thought.

They are the brains on the ground, not in the cloud.

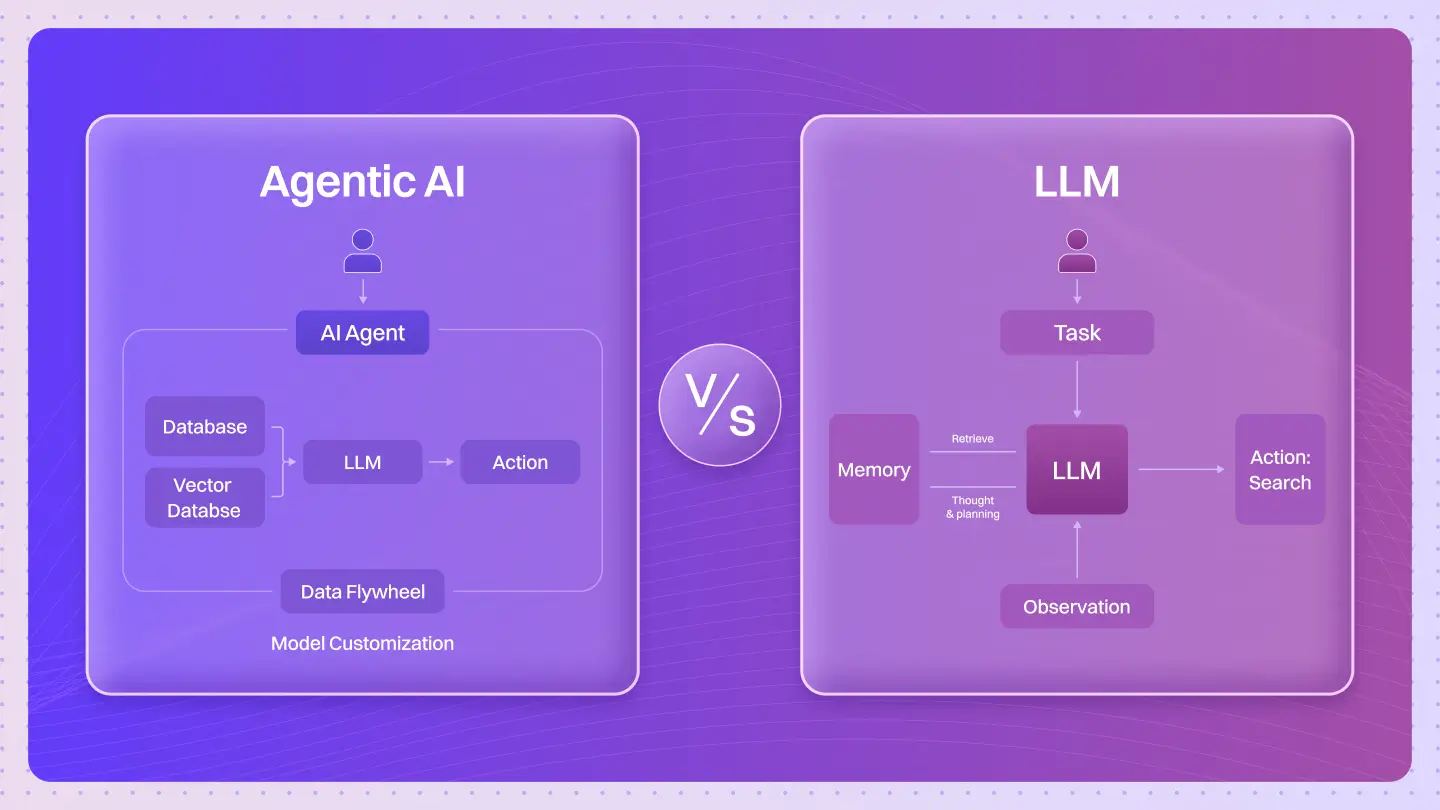

How do Edge AI Agents differ from cloud-based AI systems?

The difference is location, location, location.

And that changes everything.

A cloud-based AI system is a centralized brain.

Your device sends data to a massive, powerful server.

The server processes it.

The server sends the answer back.

This requires a stable, fast internet connection.

An Edge AI Agent is a decentralized brain.

The processing happens right on your device.

Your phone, your car, your factory sensor.

This creates three core differences:

- Connectivity: Cloud AI needs the internet. Edge AI Agents don’t. They can function perfectly offline, making them ideal for remote locations or situations with unreliable connections.

- Latency: Sending data to the cloud and back takes time. Milliseconds matter. For a self-driving car like a Tesla, processing camera data locally for immediate decision-making is a non-negotiable safety feature. Edge AI eliminates that round-trip delay.

- Resources: Cloud AI uses massive data centers. Edge AI Agents use specialized, hyper-efficient algorithms designed to run on the limited processing power and memory of a small device.

What are the key benefits of Edge AI Agents?

The advantages are direct and powerful.

- Speed (Low Latency): Decisions happen in real-time. There’s no delay from sending data to a remote server. This is critical for autonomous vehicles, industrial robotics, and augmented reality.

- Privacy & Security: Sensitive data stays on the device. Apple’s Face ID is a perfect example. It processes your facial data on your iPhone’s neural engine. Your biometric information never leaves your phone, making it vastly more secure.

- Reliability: The agent works even if the internet is down. A smart security camera from Bosch can still detect an intruder and sound an alarm locally, even during a network outage.

- Cost Efficiency: Constantly streaming data to the cloud costs money. Both in bandwidth and cloud computing fees. Processing locally reduces these costs significantly.

What hardware is needed for Edge AI Agents?

You can’t just run a massive AI model on a tiny chip.

It requires specialized hardware designed for efficiency.

You’ll often find:

- NPUs (Neural Processing Units): Custom processors built specifically to accelerate AI and machine learning tasks. Google’s Pixel phones use these for on-device voice recognition.

- Edge GPUs (Graphics Processing Units): Smaller, power-efficient versions of the GPUs found in data centers, optimized for running AI models on devices like smart cameras or drones.

- Microcontrollers (MCUs): Tiny, low-power chips that can run extremely simple AI models for tasks like keyword spotting (“Hey, Google”) without draining the battery.

This specialized hardware is the physical foundation that makes high-performance Edge AI possible.

What are the main challenges of implementing Edge AI?

It’s not a magic bullet. There are significant engineering hurdles.

- Resource Constraints: Edge devices have limited memory, processing power, and battery life. This forces developers to make trade-offs between model accuracy and performance.

- Model Optimization: A model trained in the cloud might be gigabytes in size. Shrinking it to run on a device with megabytes of memory is a complex process.

- Deployment & Maintenance: How do you update the AI model on millions of devices already out in the world? Managing this fleet of distributed agents is a major logistical challenge.

- Hardware Diversity: The “edge” is not one thing. It’s thousands of different chips and devices, each with its own capabilities. Developing for this fragmented landscape is tough.

What industries benefit most from Edge AI Agents?

Any industry that needs fast, private, and reliable intelligence on-site.

- Automotive: Tesla uses Edge AI for real-time driving assistance, processing sensor data inside the car to react instantly to road conditions.

- Consumer Electronics: Your smartphone is a hotbed of Edge AI. From Apple’s Face ID to Google’s real-time translation, these features work because the processing is local.

- Manufacturing: Smart sensors on a factory floor can use Edge AI to predict when a machine will fail, processing vibration and temperature data locally without overwhelming the network.

- Healthcare: Wearable medical devices can monitor vital signs and use on-device AI to detect anomalies like an irregular heartbeat, providing immediate alerts without sending sensitive health data to the cloud.

How are Edge AI Agents trained and deployed?

It’s typically a two-stage process.

Stage 1: Training (in the Cloud)

A large, highly accurate AI model is trained on powerful servers in the cloud using massive datasets. This is where the core intelligence is developed.

Stage 2: Optimization & Deployment (to the Edge)

This “master model” is then compressed, pruned, and optimized to create a lightweight, efficient version.

This smaller model is then deployed to the edge devices.

It’s like training an Olympic athlete in a world-class facility, then giving them a streamlined set of instructions they can execute perfectly on their own.

What technical mechanisms make Edge AI Agents possible?

The core isn’t about general coding.

It’s about a specific set of tools and techniques for making AI small, fast, and efficient.

This relies on three key pillars:

- TinyML Frameworks: These are software libraries like TensorFlow Lite and PyTorch Mobile. They provide the tools to convert large AI models into a format that can run on resource-constrained devices.

- Hardware Accelerators: As mentioned before, these are the specialized chips (NPUs, TPUs, edge GPUs) built to run AI calculations far more efficiently than a standard CPU. They do the heavy lifting.

- Model Compression Techniques: This is the secret sauce. Engineers use methods like quantization (using less precise numbers), pruning (removing unnecessary connections in the neural network), and knowledge distillation (training a small model to mimic a large one) to shrink the AI without losing too much accuracy.

Quick Test: Can you spot the risk?

Scenario: You’re developing a new wearable health monitor for athletes that tracks heart rate and predicts potential cardiac events in real-time during a race. The race often takes place in areas with poor cell service.

Which deployment model do you choose?

- A cloud-based AI system?

- An Edge AI Agent?

Why is one choice significantly better—and safer—than the other?

(The answer is Edge AI. Relying on the cloud would introduce life-threatening delays and fail completely without a network connection).

Deep Dive: Your Edge AI Questions Answered

What are the privacy advantages of Edge AI Agents?

Your data never leaves your device unless you explicitly choose to send it. This prevents it from being intercepted, stored on a third-party server, or used without your consent.

How much computing power do Edge AI Agents require?

It varies wildly. A simple keyword-spotting model might run on a tiny microcontroller with kilobytes of RAM. A complex computer vision model in a car requires a dedicated AI chip. It’s all about matching the model to the hardware.

Can Edge AI Agents work completely offline?

Yes. This is one of their primary strengths. Once the model is deployed on the device, it can perform its inference tasks indefinitely without any internet connection.

What is the relationship between 5G and Edge AI?

They are complementary. While Edge AI handles instant, on-device tasks, 5G provides a high-bandwidth, low-latency connection for when the device does need to communicate with the cloud or other edge devices—for example, to get a model update or offload a task too complex for its local hardware.

How do TinyML and Edge AI Agents relate to each other?

TinyML is a subfield of Edge AI focused on the most extreme edge: running machine learning on tiny, low-power microcontrollers. Think of Edge AI as the whole category, and TinyML as the specialization for the smallest, most resource-constrained devices.

What are the energy consumption implications of Edge AI?

It’s a double-edged sword. Running AI on-device consumes local battery power. But it saves the energy that would have been used to transmit data over a network. The goal of Edge AI hardware and software is to make on-device processing incredibly power-efficient.

How do Edge AI Agents handle model updates and improvements?

This is a key challenge. Updates are typically pushed “over-the-air” (OTA). The device downloads the new, improved model and replaces the old one. Companies need robust systems to manage these updates across millions of devices securely.

What security concerns exist with Edge AI Agents?

If a physical device is compromised, an attacker could potentially access or tamper with the AI model on it. Securing edge devices against physical attacks is a critical concern, especially for applications in security and autonomous systems.

How do Edge AI Agents balance accuracy versus efficiency?

This is the central trade-off. A larger, more complex model is usually more accurate but requires more power and processing. A smaller model is more efficient but might be less accurate. Engineers spend a lot of time finding the “sweet spot” for each specific application.

What’s the difference between edge computing and Edge AI Agents?

Edge computing is the broader concept of moving any kind of computation (not just AI) away from the cloud and closer to the user. Edge AI is a specific application of edge computing that focuses on running AI models and agentic workflows locally. All Edge AI is a form of edge computing, but not all edge computing involves AI.

The future of AI is not just in a distant, centralized cloud.

It’s becoming decentralized, embedded in the fabric of our environment.

Edge AI Agents are turning our devices from simple data collectors into intelligent, autonomous partners.