An AI model on a developer’s laptop is a trophy.

An AI model in production is a tool.

Model serving is the process of making trained AI models available for use in real-world applications, allowing users and systems to interact with the model and receive predictions or responses in real-time.

Think of it like this.

A trained AI model is a brilliant chef who has perfected a complex recipe in a test kitchen.

Model serving is the entire restaurant built around that chef.

It’s the wait staff taking orders (API requests), the kitchen infrastructure keeping things running smoothly, and the system that delivers the finished dish (the prediction) to the customer’s table, fast and hot.

The customer never sees the complex kitchen chaos; they just get their meal.

Without serving, AI is just academic research.

With it, AI becomes a product, a service, and a core business function.

—

What is model serving in AI?

It’s the final, critical step in the machine learning lifecycle.

The deployment phase.

The moment a model stops being a static file and becomes an active, operational service.

Model serving takes a trained model—whether it’s for language understanding, image recognition, or financial forecasting—and puts it into a live environment.

This usually means wrapping it in an API.

An application, a website, or another system can then send new, unseen data to this API endpoint.

The serving infrastructure routes this data to the model.

The model performs its calculation—this is called “inference.”

And the result, the prediction, is sent back.

Netflix suggesting your next show?

That’s a recommendation model being served.

Uber predicting your arrival time?

That’s a model being served.

Anthropic’s Claude answering your question?

That’s a massive language model being served, handling immense loads while maintaining a conversational speed.

How does model serving differ from model training?

They are two completely different worlds with different goals.

You can’t confuse them.

Model Training

This is the “school” phase.

It’s about creating and teaching the model.

You feed it huge amounts of historical data.

It’s computationally intense, can take hours or even weeks, and happens offline.

The goal is a single output: a highly accurate, trained model file.

Model Serving

This is the “job” phase.

It’s about using the trained model in the real world.

It handles live, incoming data, one request at a time or in small batches.

It’s all about speed, reliability, and efficiency.

The goal is to provide millions of low-latency, high-availability predictions.

Training builds the brain.

Serving lets that brain think and talk to the world, instantly and reliably.

What are the key components of a model serving architecture?

A robust model serving system isn’t just a script running a model.

It’s a collection of specialized components working in concert.

- API Endpoint: This is the front door. It’s a URL that applications use to send data (requests) and receive predictions (responses).

- Load Balancer: The traffic cop. If you have thousands of requests coming in per second, the load balancer distributes them across multiple copies of your model to prevent any single one from getting overwhelmed.

- Inference Server: The engine room. This is the specialized software that actually runs the model. It’s optimized for taking in data and getting a prediction out as fast as possible.

- Model Repository: A library or storage system where different versions of your trained models are kept. This allows you to easily switch between models or roll back to a previous version if something goes wrong.

- Monitoring & Logging: The quality control team. These tools watch everything—how fast are predictions? Are there errors? Is the model’s accuracy degrading? This is crucial for maintaining a healthy system.

What are the main challenges in model serving?

Getting a model into production is where the real engineering challenges begin.

Latency: How fast can the model respond? For a user-facing application like a chatbot, anything more than a few hundred milliseconds feels slow. This is a constant battle.

Throughput: How many requests can the system handle simultaneously? A system that works for 10 users might collapse under the weight of 10,000.

Scalability: What happens during a traffic spike? The architecture must be able to automatically add more resources (scale out) to handle the load and then remove them when the spike is over (scale in) to control costs.

Cost: Running high-performance GPUs and CPUs 24/7 is incredibly expensive. A huge part of model serving is about resource optimization—getting the most performance for the lowest cost.

Model Drift: The real world changes. Data patterns shift. A model trained on last year’s data might not perform well on this year’s. The serving system needs to monitor for this “drift” and have a process for retraining and redeploying updated models.

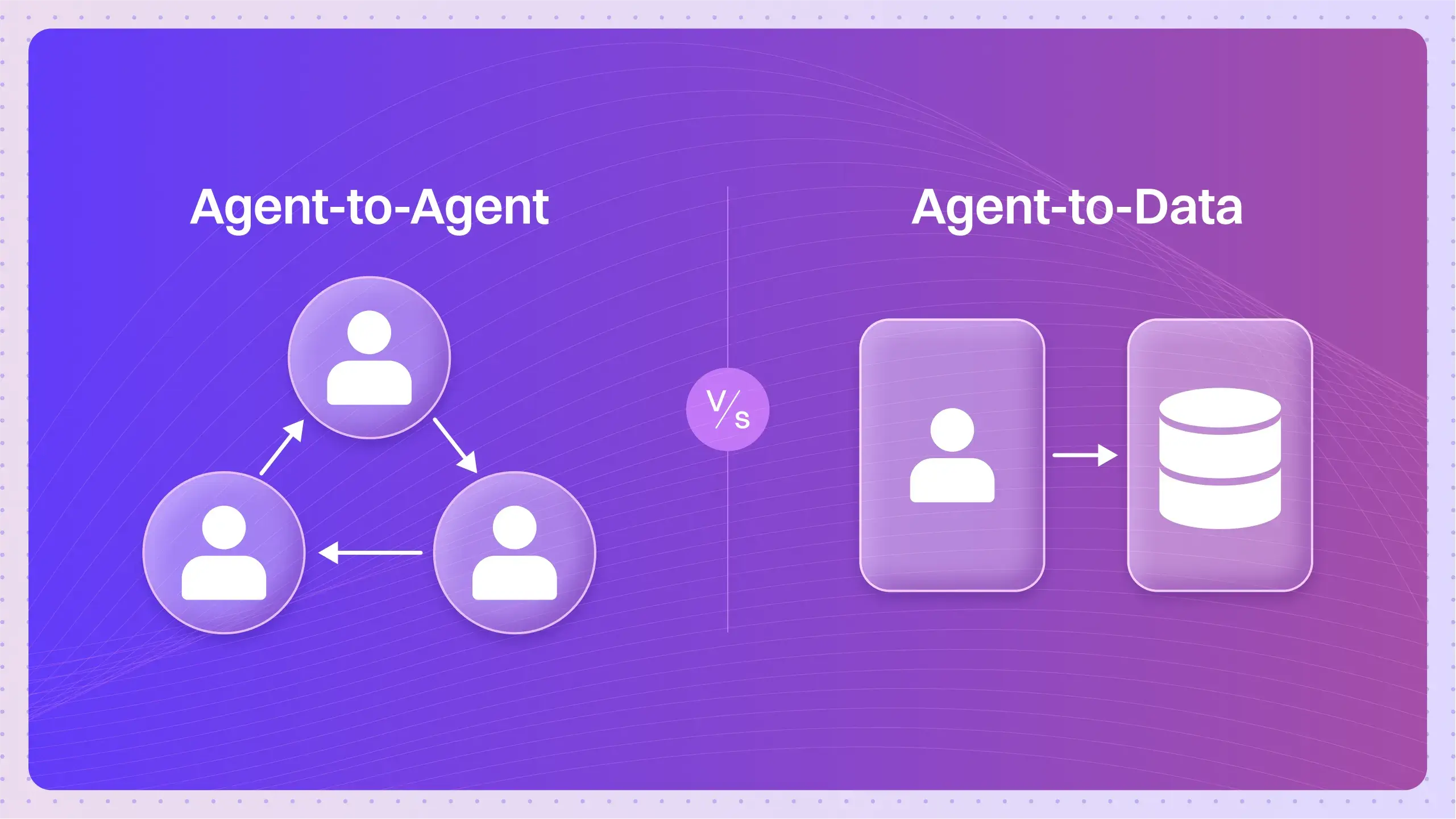

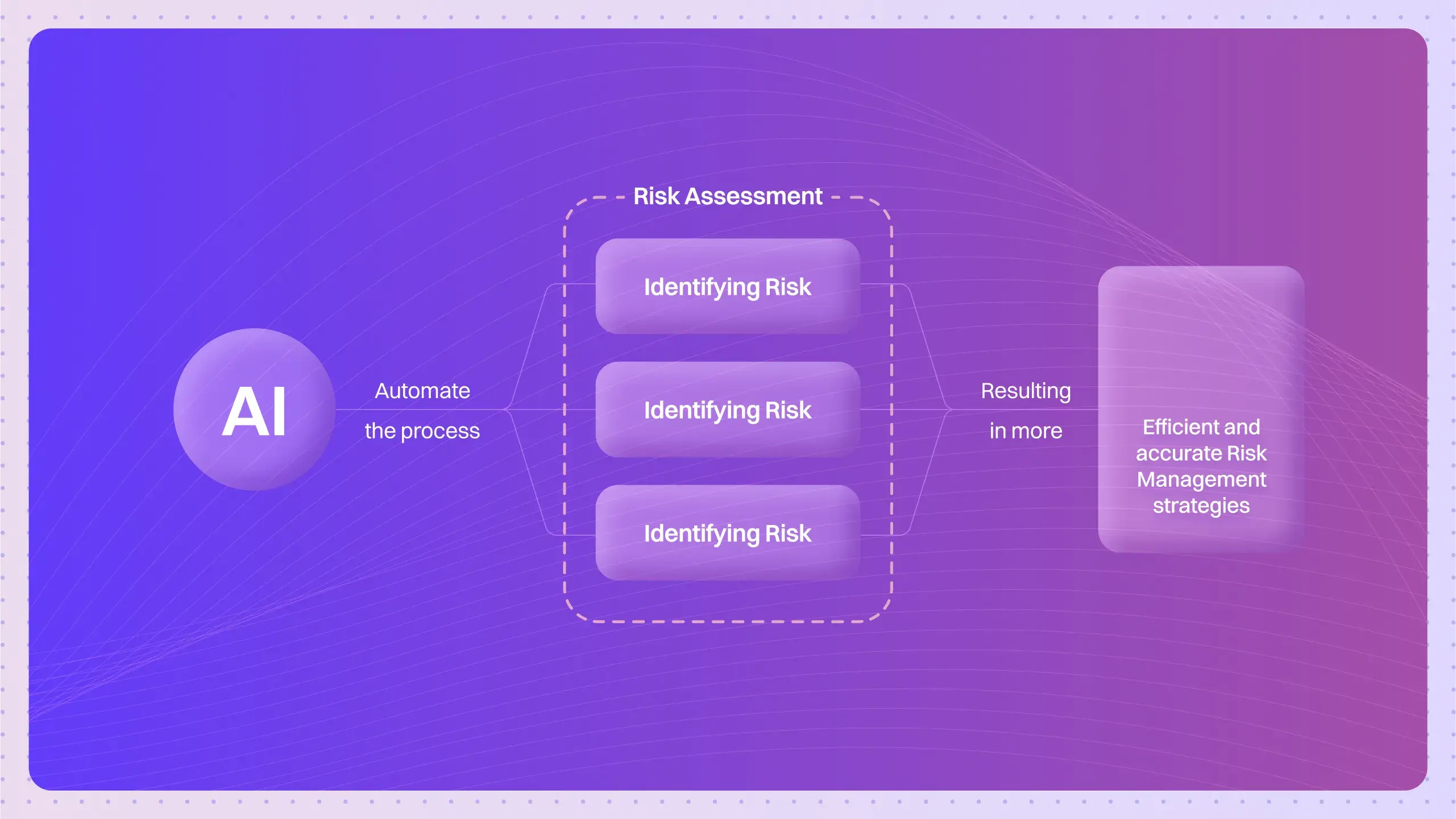

How does model serving work with AI agents?

This is where things get really interesting.

An AI agent is more than just a model; it’s a system that perceives its environment, makes decisions, and takes actions using tools.

Model serving provides the core “brain” for the agent.

The agent’s reasoning loop—the code that decides what to do next—doesn’t contain the massive model itself.

Instead, it makes an API call to a served model.

For example, a customer service agent might:

- Receive a user’s question.

- Send that text to a served LLM endpoint (the “brain”) to understand the user’s intent.

- The model serving system returns the intent, like “wants_refund.”

- The agent’s logic then uses that information to call the right tool, like a `process_refund()` function.

This makes agents incredibly modular and powerful. You can update or completely swap out the underlying AI model without ever touching the agent’s core logic. The agent just continues talking to the API endpoint, unaware that the “brain” behind it has been upgraded.

—

What technical frameworks are used for model serving?

You don’t just run `python my_model.py` on a server and hope for the best.

The industry uses specialized, high-performance tools.

The core isn’t about general coding; it’s about robust evaluation harnesses and serving frameworks.

- Framework-Specific Servers: Tools like TorchServe (for PyTorch) and TensorFlow Serving are built by the frameworks’ creators. They offer deep integration and are optimized for deploying models from their specific ecosystem.

- Kubernetes-based Orchestration: For large-scale, complex deployments, teams use platforms like KServe or Seldon Core, which run on top of Kubernetes. These manage the entire lifecycle: scaling, routing, versioning, and canary deployments.

- High-Performance Model Servers: Tools like NVIDIA’s Triton Inference Server, BentoML, or Ray Serve are built for one thing: raw speed and efficiency. They can often handle models from multiple frameworks (TensorFlow, PyTorch, ONNX) and are masters of optimizing GPU and CPU usage to maximize throughput and minimize latency.

Quick Test: Connect the Tech to the Task

Can you match the scenario to the most likely tool?

- Scenario A: A data science team is all-in on PyTorch and needs a straightforward way to get their first model into production.

- Scenario B: A global company needs to serve a mix of TensorFlow and XGBoost models, A/B test new versions, and manage it all within their existing Kubernetes infrastructure.

- Scenario C: An AI startup is building a service that requires the absolute lowest possible latency and needs to squeeze every ounce of performance from their expensive GPUs.

(Answers: A-TorchServe, B-KServe/Seldon, C-Triton Inference Server/BentoML)

—

Deep Dive FAQs

What is the difference between batch inference and online serving?

Online serving (or real-time inference) is about immediate responses. One request comes in, one prediction goes out, right now. It’s for interactive applications like chatbots or fraud detection systems. Batch inference is about processing a large amount of data at once, on a schedule. There’s no user waiting. Think of generating a daily sales forecast report or categorizing a million images overnight.

How do you handle version control in model serving?

You use a model registry to store and version your models (e.g., v1.0, v1.1). When deploying a new version, you don’t just switch it over. You use strategies like canary deployments (sending a small fraction of traffic to the new model) or A/B testing (sending traffic to both old and new models) to validate performance before going live.

What are the latency considerations for model serving?

Every millisecond counts. Latency is affected by the model’s size and complexity, the hardware it’s running on (GPU vs. CPU), the efficiency of the inference server, and network overhead. Optimizing latency is a deep engineering discipline.

How does model serving scale with increasing request loads?

Modern systems use autoscaling. They monitor the request load and automatically add or remove copies of the model (horizontal scaling) to match demand. This ensures performance during traffic spikes and saves money during quiet periods.

What are model serving APIs and how do they work?

They are standard web interfaces (like REST or gRPC) that expose the model’s functionality. An application sends a request with input data formatted in a standard way (like JSON), and the API returns the model’s prediction, also in a standard format. This decouples the application from the AI model, treating the model like any other microservice.

How do you monitor model serving performance in production?

You monitor two categories of metrics. System metrics like CPU/GPU utilization, memory usage, and request latency tell you about the health of the infrastructure. Model metrics track the quality of the predictions themselves, looking for concept drift, data drift, and drops in accuracy over time.

What security considerations are important for model serving?

The API endpoint must be secured with authentication and authorization to prevent unauthorized use. The model itself is valuable intellectual property and needs to be protected from being stolen. You also need to guard against adversarial attacks, where bad actors send specifically crafted inputs to fool the model.

How can model serving architecture affect AI system costs?

Dramatically. The choice between using CPUs vs. GPUs, using a serverless platform vs. dedicated servers, and implementing effective autoscaling can mean the difference between a sustainable service and a money pit. Efficient serving is as much about financial management as it is about engineering.

—

Model serving is the invisible, industrial-strength backbone of modern AI.

It’s the engineering discipline that turns potential into reality, moving AI from the lab to the hands of billions.

Did I miss a crucial point? Have a better analogy to make this stick? Let me know.