An ML model stuck in a notebook is just a science experiment.

An MLOps Pipeline is a set of automated processes and practices that streamline the journey of machine learning models from development to deployment and monitoring in production environments.

It’s like a modern factory assembly line for AI models.

A car moves through stations for assembly, quality checks, and final inspection before it ever reaches a customer.

Similarly, an ML model passes through standardized, automated stages.

Development.

Testing.

Deployment.

And continuous monitoring.

This ensures it actually works reliably in the messy, unpredictable real world. Getting this right is the difference between AI that generates value and AI that creates risk.

What is an MLOps Pipeline?

It’s the operational backbone for machine learning.

It’s an automated system designed to handle every stage of an ML model’s life.

From the moment data comes in.

To the moment a retrained model replaces an old one in production.

The core goals are simple:

- Automation: Remove manual handoffs that introduce errors and delays.

- Repeatability: Ensure you can reproduce any model and its result on demand.

- Reliability: Build trust that your models in production are performing as expected.

It turns the artisanal, one-off process of building a model into a scalable, repeatable engineering discipline.

What are the key components of an MLOps Pipeline?

A mature pipeline isn’t just one thing.

It’s a series of interconnected stages, each automated and triggering the next.

- Data Ingestion & Validation: Automatically pulling new data and checking its quality. If the data is bad, the pipeline stops here.

- Feature Engineering: Transforming raw data into the meaningful signals the model needs to learn from.

- Model Training & Tuning: The actual process of training the model, often including experiments to find the best parameters.

- Model Evaluation: Testing the newly trained model against a holdout dataset to ensure it meets performance thresholds.

- Model Registry: If the model passes evaluation, it’s versioned and stored in a central “model library” with all its metadata.

- Deployment: Automatically packaging the model and deploying it to a production environment, often using safe strategies like canary releases.

- Monitoring: Continuously watching the live model for performance degradation, data drift, or concept drift.

- Retraining Trigger: If monitoring detects a problem, it automatically triggers the pipeline to run again with new data.

This entire sequence forms a continuous loop.

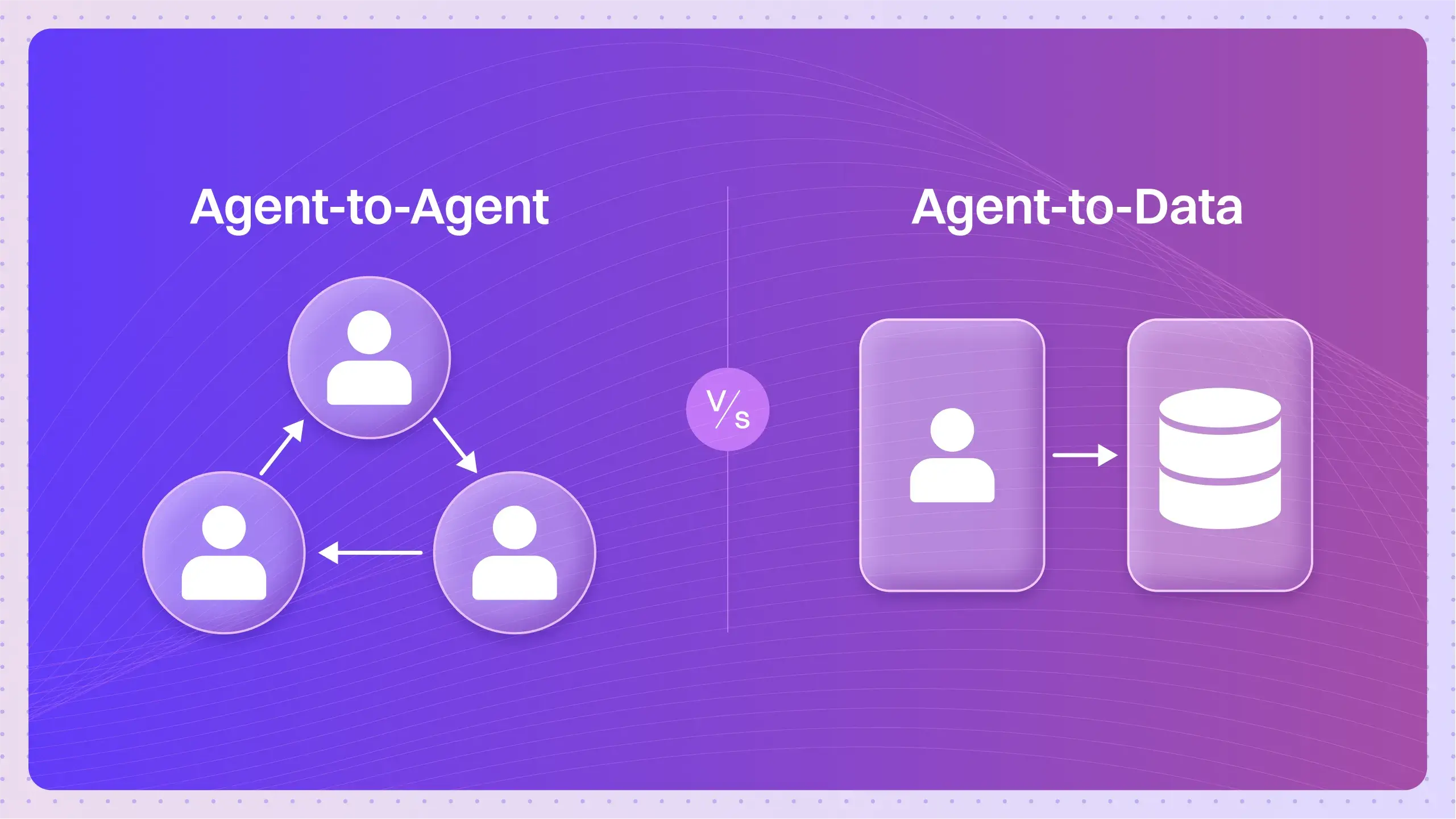

How does an MLOps Pipeline differ from traditional CI/CD?

They share the same spirit of automation, but the devil is in the details.

A traditional CI/CD pipeline in DevOps is mostly concerned with code.

You commit new code, it gets tested, integrated, and deployed.

An MLOps pipeline manages three moving parts:

- Code

- Models

- Data

This introduces unique complexities.

MLOps pipelines must handle data dependencies and versioning, something traditional CI/CD ignores. They have to watch for “model drift,” where a model’s performance degrades over time because the real world has changed. This requires specialized monitoring that has no equivalent in standard application performance monitoring.

And unlike a data engineering pipeline, which focuses on moving and transforming data, an MLOps pipeline is obsessed with the entire model lifecycle that is powered by that data.

Why is an MLOps Pipeline essential for enterprise AI?

Because you can’t scale experiments.

You have to scale process.

Without a pipeline, every new model is a massive manual effort, prone to error and difficult to track. For an enterprise, this is unsustainable.

- Scalability: Uber’s Michelangelo platform uses MLOps pipelines to manage thousands of models, from predicting ETAs to optimizing surge pricing. This would be impossible manually.

- Speed & Efficiency: Pipelines drastically reduce the time it takes to get a model from an idea to production, allowing businesses to react faster to market changes.

- Risk Management: Capital One uses MLOps pipelines to deploy and monitor fraud detection models. Automated validation and monitoring ensure that a faulty model doesn’t go live and that performance issues are caught in real-time.

- Governance & Compliance: A pipeline automatically logs every action, data version, and model artifact. This creates a clear audit trail, which is non-negotiable in regulated industries.

- Consistency: Netflix uses MLOps to constantly update its recommendation algorithms. Pipelines ensure every update is tested and deployed with the same high standards, delivering a consistent user experience.

What tools are commonly used in MLOps Pipelines?

The MLOps toolchain is a vibrant ecosystem, with tools specializing in different stages of the pipeline.

- Orchestration: Tools that manage the workflow itself.

- Kubeflow Pipelines

- Apache Airflow

- Argo Workflows

- CI/CD Automation: The engines that run the automated steps.

- Jenkins

- GitLab CI

- GitHub Actions

- Data & Feature Management: Tools for versioning data and managing features.

- DVC (Data Version Control)

- Feast (Feature Store)

- Tecton

- Experiment Tracking & Model Registry: Logging experiments and storing production-ready models.

- MLflow

- Weights & Biases

- Comet ML

- Monitoring: Observing models in production.

- Prometheus & Grafana

- Specialized tools like Arize, Fiddler, or WhyLabs

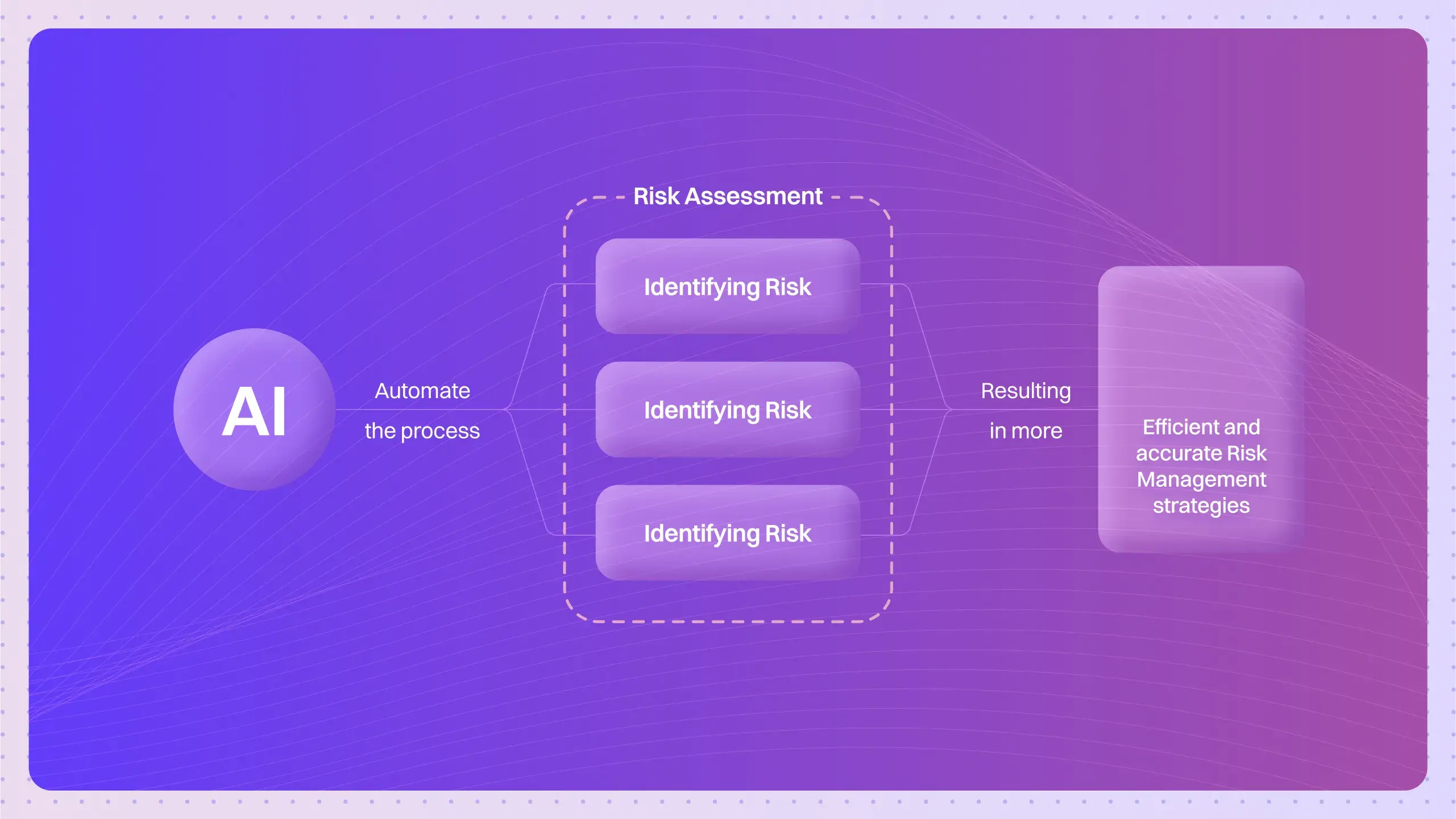

What are the stages of a mature MLOps Pipeline?

It’s best viewed as a continuous, automated loop.

- Development: A data scientist experiments and develops a candidate model. This part is often manual.

- Automated Trigger: The data scientist commits their code. This action, or the arrival of new data, triggers the automated pipeline.

- Training Pipeline: The system automatically pulls data, processes it, and trains the model.

- Evaluation Pipeline: The new model is tested against predefined business and statistical metrics.

- Deployment Pipeline: If the model passes, it’s deployed. This might be a “shadow deployment” first for live testing, followed by a full rollout.

- Production & Monitoring: The model serves predictions, and its performance is tracked 24/7.

- Retraining Loop: Monitoring systems detect drift or decay, which automatically triggers the entire pipeline to run again, creating a new, better model from fresh data.

What technical mechanisms enable an MLOps Pipeline?

The core isn’t about general coding; it’s about specialized frameworks that understand ML’s unique needs.

CI/CD for Machine Learning is the foundation. This integrates tools like GitHub Actions or Jenkins with ML frameworks. A pipeline run isn’t just triggered by a code change; it can be triggered by a data change, a scheduled time, or an alert from a monitoring system.

Feature Store Architecture is another key piece. Tools like Feast or Tecton create a single source of truth for features. This solves the dangerous problem of “training-serving skew,” where the data used for training is processed differently than the data used for live predictions.

Finally, a Model Registry and Versioning system like MLflow or DVC is critical. It acts as version control for your models. It allows you to track which model was trained on which data with which code, and it provides a central, governable hub for all production-ready models.

Quick Test: Can you spot the risk?

An e-commerce company trains a best-selling products model once a quarter. After a viral social media trend causes a new product to explode in popularity, their “recommended for you” section keeps pushing last season’s items.

Which part of their MLOps process is broken?

The answer is the lack of an automated retraining trigger based on performance monitoring. A mature pipeline would have detected the concept drift (what’s popular has changed) and automatically kicked off a retraining run.

Questions That Move the Conversation

How do you measure the success of an MLOps Pipeline?

Success is measured by both business and operational metrics. Does the model achieve its business KPI (e.g., increased sales, reduced fraud)? Operationally, you track deployment frequency, model failure rate in production, and the time it takes to retrain and deploy a new model.

What challenges do organizations face when implementing MLOps Pipelines?

The biggest hurdles are often cultural and technical. It requires a shift from a research-focused mindset to an engineering one. Technical challenges include stitching together a complex toolchain, managing infrastructure costs, and finding talent with hybrid data science and software engineering skills.

How do MLOps Pipelines handle model versioning?

They version everything together: the code used for training, the dataset snapshot used, the model parameters, and the final model artifact itself. Tools like DVC and MLflow are built specifically for this, ensuring complete reproducibility.

What role does data validation play in an MLOps Pipeline?

It’s the first line of defense. Before any training occurs, an automated step checks the incoming data for correctness—schema, statistical properties, and anomalies. If the data is bad, the pipeline fails early, preventing a “garbage in, garbage out” model from ever being built.

How do MLOps Pipelines support regulatory compliance and model governance?

By providing an immutable, automated audit trail. The pipeline logs every step: who triggered the run, what data was used, how the model performed in tests, and where it was deployed. This lineage is crucial for compliance in fields like finance and healthcare.

Can MLOps Pipelines work with both cloud and on-premise infrastructure?

Absolutely. Using containerization technologies like Docker and orchestration platforms like Kubernetes, pipelines can be designed to run agnostically across different environments, whether it’s a public cloud, a private data center, or a hybrid setup.

What is the role of feature stores in an MLOps Pipeline?

They are a central library for features. They prevent teams from re-calculating the same features over and over. More importantly, they ensure consistency between the features used for training and the features available for live predictions, which is a major source of model failure.

How do MLOps Pipelines handle model monitoring and retraining?

They monitor for two types of drift. Data Drift (the input data’s statistical properties have changed) and Concept Drift (the relationship between inputs and outputs has changed). When predefined thresholds are crossed, the monitoring system sends an alert that automatically triggers the retraining pipeline.

What security considerations are important in MLOps Pipelines?

Security is critical. This includes managing secrets and API keys, scanning code and container dependencies for vulnerabilities, controlling access to sensitive data, and ensuring the model endpoints are protected from attacks.

How do MLOps Pipelines integrate with existing DevOps practices?

MLOps is an extension of DevOps, not a replacement. It adopts core DevOps principles like CI/CD, automation, and monitoring, but adds specialized tools and practices to handle the unique challenges posed by data and models.

The MLOps pipeline is rapidly becoming the non-negotiable standard for any organization serious about deploying AI. It’s the infrastructure that makes machine learning reliable, scalable, and ultimately, valuable.

Did I miss a crucial point? Have a better analogy to make this stick? Let me know.