Table of Contents

ToggleModern AI development is not just about creating agents , it’s about deploying, scaling, and governing them efficiently across distributed environments.

With Lyzr Agent Studio, where every core capability , from agents to orchestration , is built as an independent, API-ready service.

Lyzr enables teams to build within the platform or consume each service externally, giving developers control over architecture without dependency on a single ecosystem.

Each module , Agents, Knowledge Base, Responsible AI (RAI), and Orchestration , is structured to function as a callable API microservice. This modular design forms the backbone of Lyzr’s “Everything as a Service” model.

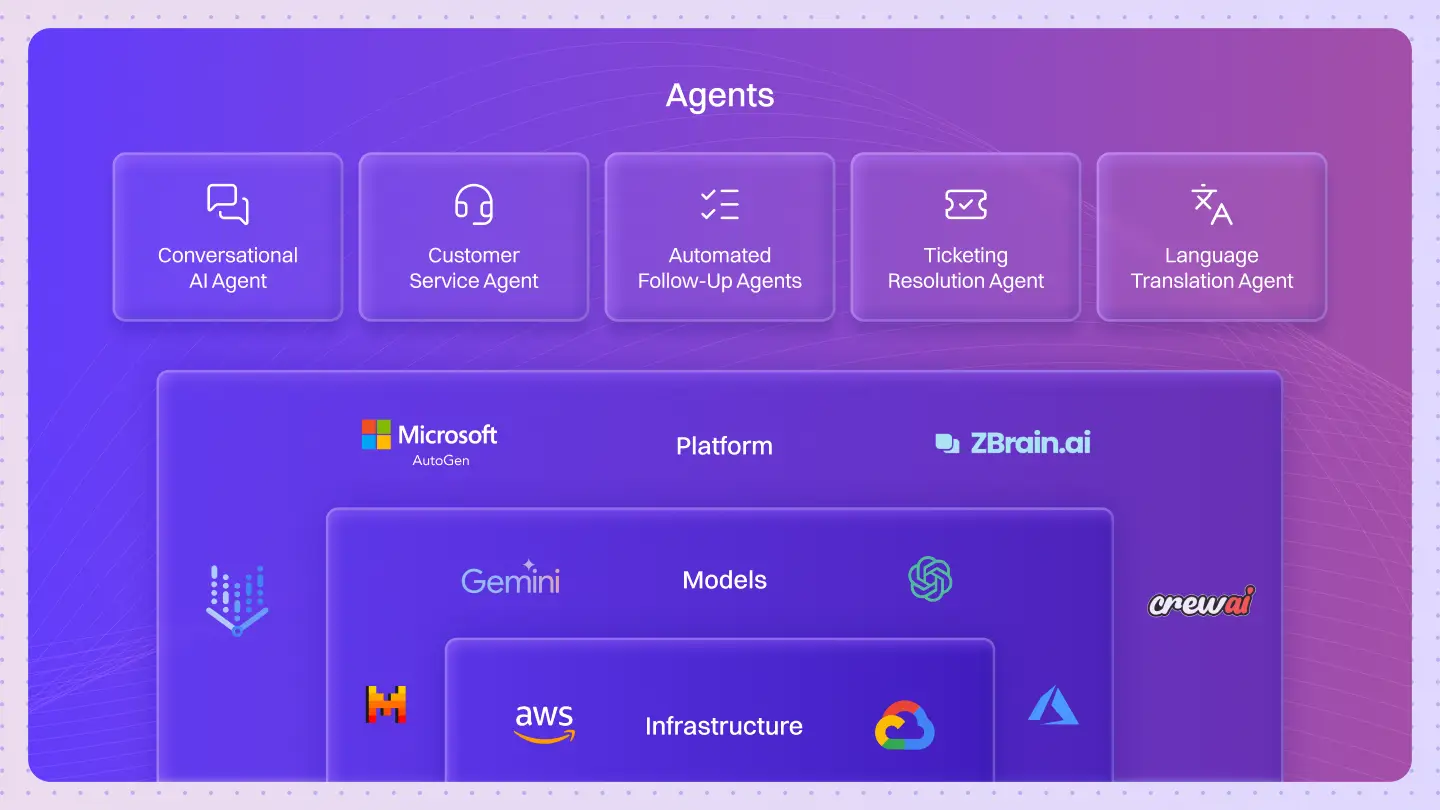

Agents as a Service

Every agent created in Lyzr is automatically deployed as a production-ready service. Once configured, the agent is immediately accessible through a REST API endpoint , eliminating the need for external hosting, infrastructure setup, or containerization.

Technical Overview

When an agent is created in Lyzr Studio, the platform automatically provisions:

- A hosted execution environment with runtime isolation for each agent.

- A registered API endpoint via Lyzr’s internal gateway.

- Context persistence and memory management for multi-turn interactions.

- Tool integrations and plug-ins for external data calls or actions.

Why it matters

Traditional agent deployment often involves container orchestration, hosting infrastructure, and authentication setup.

With Lyzr, developers skip those layers , they create an agent and get a live API endpoint instantly.

This model suits use cases like:

- Deploying customer-facing support assistants

- Integrating internal analytics or automation bots

- Embedding smart assistants into existing SaaS systems

Core Capabilities

| Capability | Description |

| Instant API Exposure | Agents are automatically exposed through HTTPS endpoints after creation. |

| Runtime Memory | Each agent maintains a vector-based contextual memory for continuity. |

| Tool Invocation | Agents can execute integrated or third-party tools natively. |

| Scalability | Auto-managed environments handle scaling and concurrency internally. |

| Observability | Execution logs and performance metrics are accessible through the Studio. |

Lyzr’s agent service converts what was once a deployment process into a single configuration step.

Knowledge Base as a Service

Contextual retrieval is critical for accuracy in AI interactions. Lyzr’s Knowledge Base as a Service acts as an intelligent, API-accessible data retrieval layer designed for Retrieval-Augmented Generation (RAG) use cases.

Technical Architecture

The knowledge module is built as a multi-stage retrieval system consisting of:

- Ingestion Layer , Supports files, URLs, and text input in multiple formats (PDF, DOCX, CSV, TXT).

- Semantic Chunking , Splits documents into context-preserving units for efficient embedding.

- Vector Embedding Engine , Encodes text into dense vector representations for semantic similarity search.

- Vector Index , Low-latency vector store optimized for high-performance retrieval.

- Query Layer , Handles semantic searches, returning the top-k relevant results in milliseconds.

Why it matters

Developers often spend significant time setting up vector databases and managing embeddings.

Lyzr abstracts that complexity, offering an optimized, ready-to-query RAG pipeline through an API endpoint.

This module can serve context to:

- Agents built within Lyzr

- External LLMs and AI systems

- Search assistants and enterprise knowledge bots

Core Capabilities

| Capability | Description |

| Multi-format Support | Ingest PDFs, DOCX, URLs, text, and structured data. |

| Optimized Retrieval | Sub-300ms response time for typical retrieval queries. |

| Vector Storage | High-performance, distributed vector index. |

| Interoperability | API can serve context to any external agent or system. |

| Scalability | Handles large document corpora without degradation. |

The result is a plug-in knowledge layer that adds enterprise-grade retrieval capabilities to any AI agent , without requiring you to rebuild infrastructure.

3. Responsible AI (RAI) as a Service

Responsible AI (RAI) in Lyzr is not an optional add-on; it’s a modular policy enforcement layer available as a standalone service.

It provides runtime control and compliance for AI agents, ensuring safety and accountability across all interactions.

System Architecture

RAI operates as an evaluation and policy enforcement engine that can be attached to any AI system , whether built on Lyzr or a third-party platform.

Each RAI instance includes:

- Content Moderation Modules , Identify sensitive or restricted content in text outputs.

- PII Protection Layer , Automatically detects and redacts personally identifiable information.

- Bias Evaluation Framework , Monitors model outputs for tone, sentiment, and fairness deviations.

- Audit Logging Mechanism , Maintains structured logs for compliance and review.

Policies can be global or agent-specific and are configurable through JSON-based templates.

Why it matters

For developers working on production AI systems, governance and safety often become secondary implementation layers.

RAI as a Service integrates that functionality at runtime, letting teams enforce compliance directly within the AI workflow.

This means organizations can maintain:

- Consistent output standards

- Data privacy compliance

- Transparent audit trails

Core Capabilities

| Capability | Description |

| Platform-Agnostic | Works with any LLM or AI system, inside or outside Lyzr. |

| Customizable Policies | Define moderation, bias control, and safety filters via templates. |

| Minimal Overhead | Adds less than 100ms processing latency. |

| Centralized Configuration | Unified policy management across all connected agents. |

| Audit Visibility | Provides complete traceability of filtered outputs. |

This service enables teams to integrate safety, compliance, and auditability into AI pipelines , without rewriting model logic.

4. Orchestration as a Service

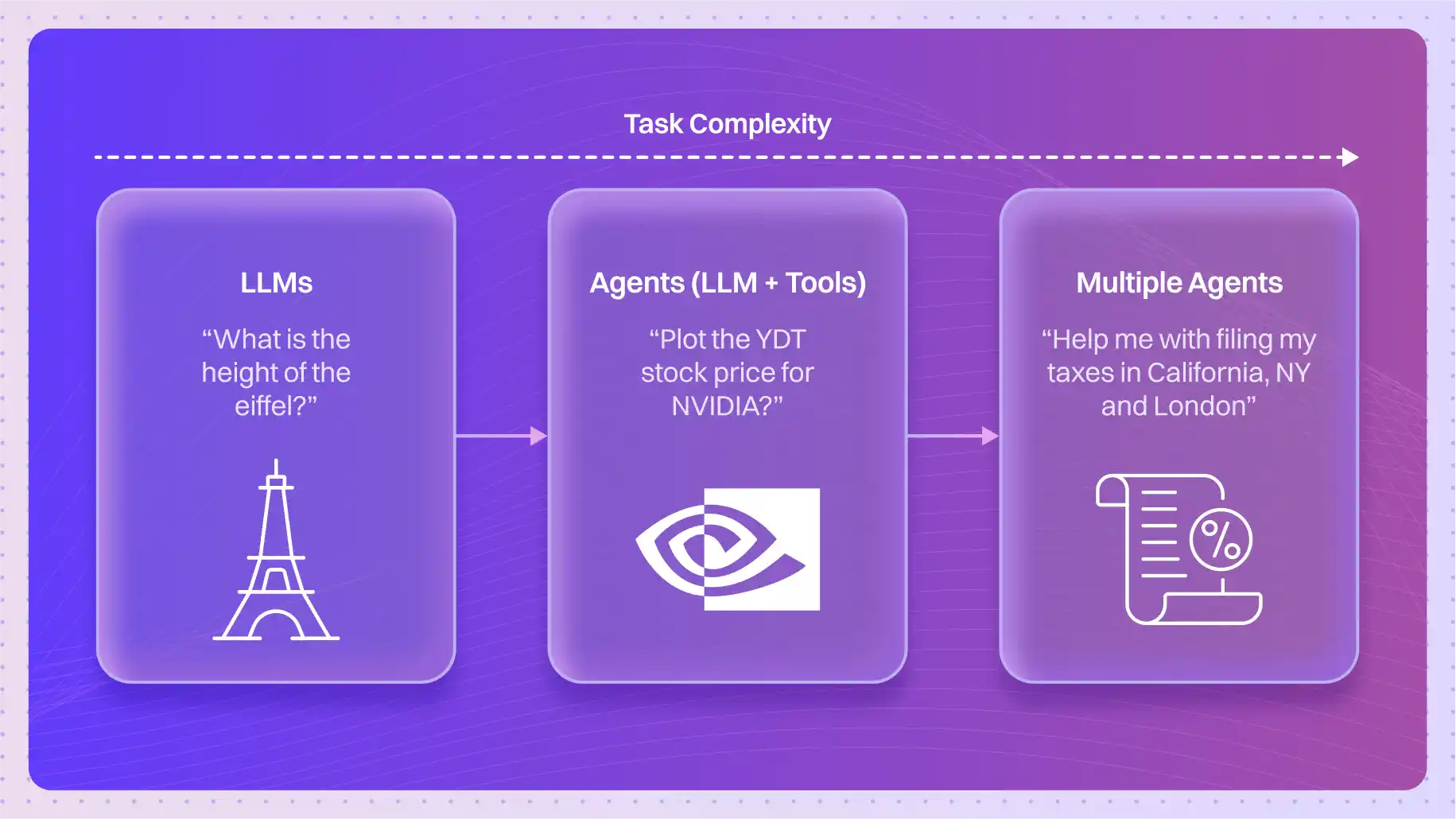

As AI systems scale, individual agents must collaborate.

Lyzr’s Orchestration as a Service is built to manage that collaboration through both visual workflows (DAGs) and Manager Agents that coordinate sub-agents programmatically.

Architecture Overview

The orchestration module functions as a Directed Acyclic Graph (DAG) engine.

Each node represents a discrete agent, API call, or task; edges define dependency flow.

Key components include:

- Task Scheduler – Manages execution order and concurrency.

- Dependency Resolver – Ensures non-cyclic, dependency-driven execution.

- Error Handling Layer – Performs retries and fallback logic per node.

- Data Aggregation Unit – Merges and routes intermediate outputs between agents.

Orchestration Models

| Model | Description | Ideal For |

| Manager Agent | A controlling agent that delegates and merges responses from sub-agents. | Use cases requiring reasoning or result synthesis. |

| Workflow DAG | A visual flow defining sequential or parallel task execution. | Automated pipelines or multi-step processes. |

Why it matters

Multi-agent orchestration typically requires external schedulers or workflow engines.

Lyzr eliminates that need by embedding orchestration capabilities within the same environment , accessible through API endpoints.

Developers can:

- Combine agents from different systems

- Trigger multi-step operations from a single API call

- Build dynamic, conditional execution paths

Core Capabilities

| Capability | Description |

| Concurrent Execution | Executes dependent or parallel tasks with automatic coordination. |

| Retry and Fallback Logic | Node-level fault tolerance with configurable retries. |

| Cross-Agent Communication | Enables data exchange between agents mid-execution. |

| API-First Design | Each workflow or manager agent is callable externally. |

| Visual + Programmatic Design | Supports both drag-and-drop and API-driven orchestration creation. |

This architecture allows teams to construct multi-agent systems that are both dynamic and modular , without managing workflow engines separately.

Unified API Design

Every service within Lyzr follows a consistent REST design model.

Endpoints are authenticated, stateless, and structured for interoperability.

| Service | Endpoint Pattern | Function |

| Agents as a Service | /v1/agents/{agent_id}/invoke | Deploy and execute agents. |

| Knowledge Base as a Service | /v1/knowledge/{kb_id}/query | Retrieve contextual data. |

| RAI as a Service | /v1/rai/{policy_id}/evaluate | Enforce compliance and moderation. |

| Orchestration as a Service | /v1/flows/{flow_id}/execute | Execute orchestrated agent workflows. |

All endpoints use:

- Token-based authentication for secure access

- JSON schema for consistent request/response formats

- Rate-limiting and error handling for production reliability

This unified design allows developers to plug Lyzr services into any stack , backend APIs, mobile apps, or enterprise systems , without translation layers.

Conclusion: Modular AI Infrastructure for Developers

Lyzr’s service-based architecture redefines how AI systems are deployed and managed.

By decoupling each core function , agent runtime, contextual retrieval, responsible AI enforcement, and orchestration , it allows developers to compose intelligent systems at scale.

Key advantages for engineering teams:

- Zero deployment and hosting overhead

- Fully API-driven integration for external systems

- Consistent design and authentication models

- Built-in Responsible AI enforcement

- Scalable orchestration for multi-agent systems

Whether used as a complete platform or as standalone APIs, Lyzr offers developers a robust, production-ready foundation for building intelligent, compliant, and connected AI systems.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here