Relying on full automation is a dangerous gamble.

Human in the Loop (HITL) is a system design approach where human judgment and intervention are integrated into automated AI processes to improve performance, ensure safety, and maintain control over critical decisions.

It’s like a driving instructor with a second set of brake pedals in a car with a student driver.

The AI is the student, learning and handling most of the driving.

The human instructor is there to watch, guide, and slam on the brakes to prevent a crash or correct a bad habit.

Understanding this isn’t just an academic exercise. It’s fundamental to building safe, ethical, and genuinely effective AI. When the stakes are high, you need a human ready to take the wheel.

What is Human in the Loop?

It’s a partnership.

Not just oversight.

Not just a human checking a machine’s homework.

Human in the Loop (HITL) creates a continuous feedback cycle between a person and an AI system.

The AI handles the heavy lifting.

It sifts through massive datasets.

It identifies patterns.

It makes initial recommendations or predictions.

But at critical points, the system stops and asks for human help.

This could be when the AI’s confidence in its own answer is low.

Or when the decision carries significant ethical or financial weight.

The human provides input, makes a correction, or validates the AI’s conclusion.

This feedback doesn’t just solve the immediate problem.

It’s used to retrain and refine the AI model, making it smarter for the next time.

It’s a symbiotic relationship where both the human and the machine learn and improve together.

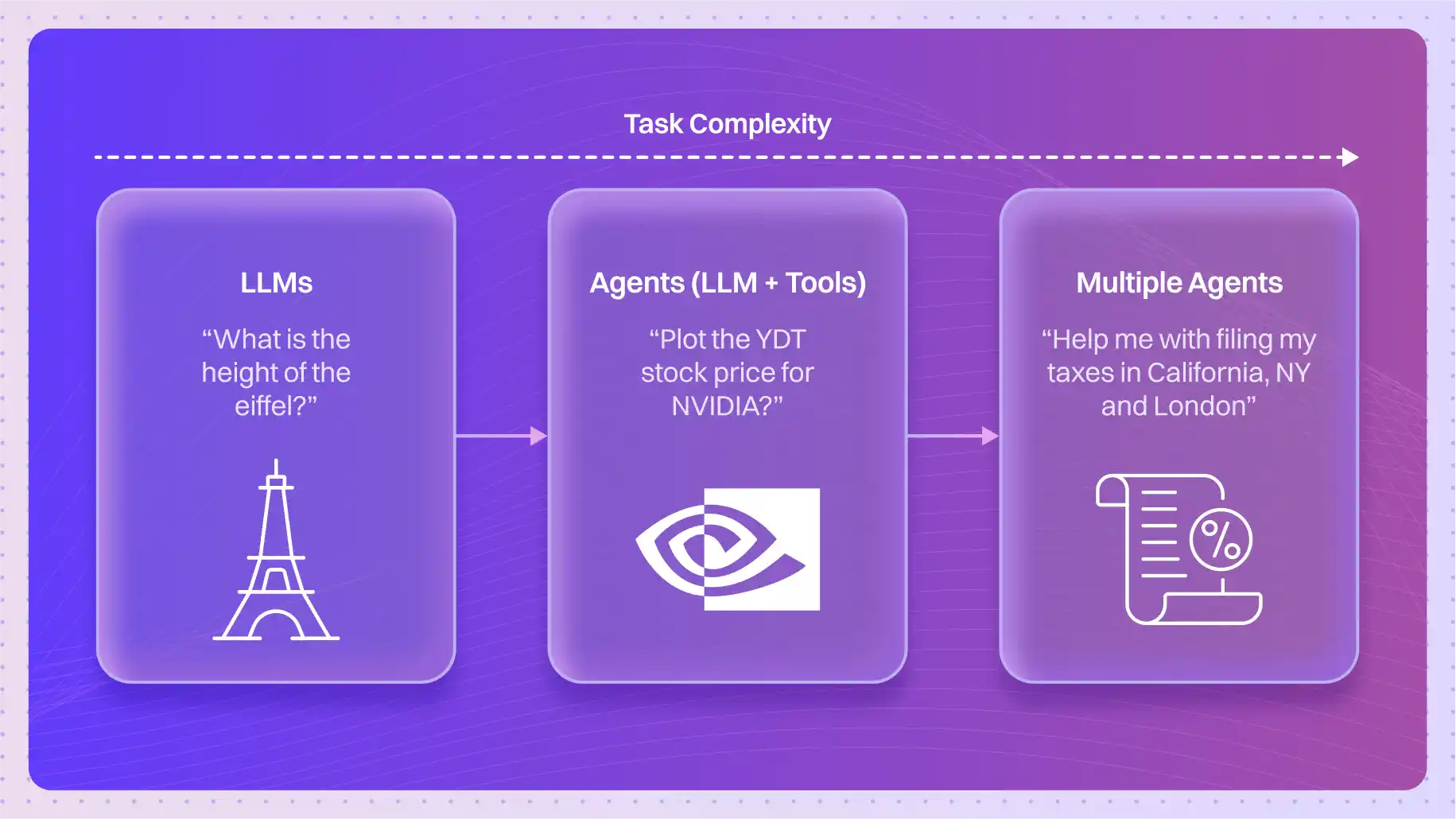

How does Human in the Loop differ from fully autonomous systems?

The core difference is control.

A fully autonomous system operates entirely on its own.

Once you press “go,” it makes decisions without any human intervention.

Think of a factory robot performing a single, repetitive task perfectly every time.

There’s no room for nuance, and no one to stop it if the context changes unexpectedly.

A purely manual process is the opposite.

All work, all decisions, all judgment calls are made by people. It’s slow, expensive, and doesn’t scale well.

Human in the Loop carves out the middle ground.

- Unlike fully autonomous AI systems, HITL maintains human oversight and the power to intervene throughout the process. The human isn’t just a spectator; they are an active participant.

- Unlike purely manual processes, HITL automates the routine, data-heavy tasks, freeing up human experts to focus only on the complex, ambiguous, or ethically sensitive decisions where their judgment is most valuable.

It’s the best of both worlds-the speed and scale of a machine, guided by the wisdom and intuition of a human.

What are the benefits of Human in the Loop systems?

The advantages go far beyond just catching errors.

- Higher Accuracy: AI models can struggle with edge cases and outliers. Human intuition and contextual understanding can correctly classify these exceptions, leading to a more robust and accurate overall system.

- Improved Safety: In high-stakes fields like medicine or autonomous vehicles, a wrong AI decision can have catastrophic consequences. A human in the loop acts as a critical safety valve, able to veto or correct a potentially harmful automated action.

- Ethical Oversight: AI can inherit and amplify biases present in its training data. Humans can intervene to ensure decisions are fair, just, and aligned with ethical principles, especially in areas like hiring or loan applications.

- Continuous Learning: Every human correction is a learning opportunity for the AI. Companies like OpenAI use Reinforcement Learning from Human Feedback (RLHF) to constantly align their models with human values and preferences. This makes the AI progressively better over time.

- Greater Trust: People are more likely to trust and adopt AI systems when they know there is human oversight and accountability. This is crucial for deploying AI in sensitive public or enterprise domains.

What are common Human in the Loop implementation methods?

You see it in action every day.

One of the most well-known examples is content moderation on social media platforms.

AI flags potentially harmful content, but human moderators make the final call, navigating the complexities of context, satire, and free speech.

Another is in the medical field.

An AI might analyze thousands of medical images to flag potential tumors, but a human radiologist confirms the diagnosis.

Here are some real-world implementations:

- Google’s Search Quality Raters: A huge team of humans constantly evaluates the quality of search results. Their feedback is used to tweak and improve the ranking algorithms, ensuring the results are relevant and helpful.

- OpenAI’s RLHF Process: This is the core of how models like ChatGPT were refined. Humans ranked different AI-generated responses, teaching the model what constitutes a good, helpful, and harmless answer.

- Amazon Mechanical Turk: This is a crowdsourcing marketplace that essentially provides “human intelligence as a service.” Businesses use it to get human input on tasks machines can’t do well, like labeling images for a computer vision model or transcribing audio with complex dialects.

When is Human in the Loop necessary in AI applications?

It’s necessary whenever the cost of failure is high.

Ask yourself this question:

“What happens if the AI gets this wrong?”

If the answer involves financial loss, physical harm, serious legal trouble, or ethical violations, you need a human in the loop.

Essential domains include:

- Healthcare: Diagnosing diseases, interpreting medical scans, and recommending treatment plans.

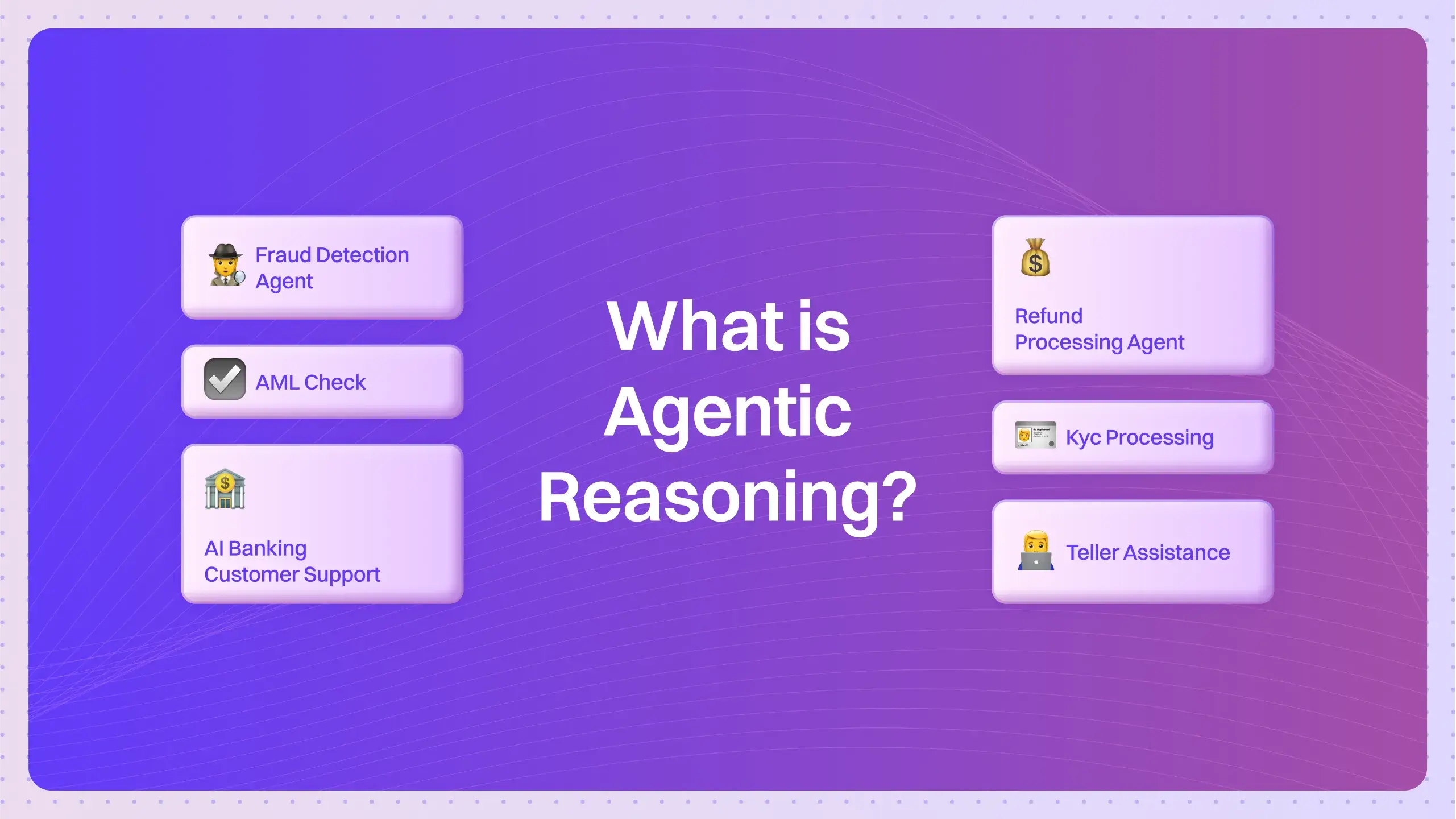

- Finance: Approving loans, detecting complex fraud, and making major investment decisions.

- Legal: Reviewing contracts for critical clauses and conducting e-discovery for litigation.

- Autonomous Systems: Piloting vehicles, drones, or any machine operating in a dynamic, unpredictable environment.

- Customer Service: Handling highly emotional or complex customer complaints that an automated chatbot can’t resolve.

In these areas, full automation isn’t just risky; it’s irresponsible.

What technical mechanisms are used for Human in the Loop?

The core isn’t about general coding; it’s about building specialized evaluation and feedback systems.

Developers use specific frameworks to make the human-AI partnership seamless and efficient.

- Active Learning Frameworks: This is a key mechanism. The AI model is designed to recognize the limits of its own knowledge. When it encounters a data point it’s uncertain about, it proactively flags it and requests input from a human. This ensures human effort is focused where it’s needed most, making the entire training process more efficient.

- Hybrid Intelligence Architectures: These are system designs built from the ground up to combine machine learning with human expertise. The goal is to create a workflow where the AI and human can pass tasks back and forth smoothly, each contributing their unique strengths to achieve a result that neither could accomplish alone.

- Human-AI Collaboration Interfaces: This is the practical, user-facing part. It’s the dashboard, the flagging tool, or the validation screen that the human expert actually uses. A well-designed interface is critical for enabling fast, accurate, and low-friction feedback loops and intervention protocols.

Quick Test: Can you spot the risk?

Imagine a bank deploys a fully autonomous AI system to approve or deny mortgage applications. The system was trained on historical loan data. It denies an application for a qualified candidate from a minority neighborhood because the AI identified a pattern of historical defaults from that specific zip code, confusing correlation with causation.

How could a Human in the Loop approach have prevented this biased outcome?

A HITL system would have flagged this application as an edge case-one where the applicant’s strong individual financial profile conflicted with a negative geographical pattern. A human loan officer would then review the case, recognize the potential for bias, and make a fair decision based on the applicant’s merit, preventing discrimination and providing critical feedback to retrain the model.

Deep Dive FAQs

What industries benefit most from Human in the Loop approaches?

Healthcare, finance, legal services, e-commerce (for fraud detection and recommendations), and transportation (especially autonomous vehicles) are leading the way. Any industry dealing with unstructured data and high-stakes decisions is a prime candidate.

How does Human in the Loop impact AI safety and alignment?

It’s one of the most powerful tools for both. HITL allows for real-time course correction, preventing unsafe actions. More importantly, the continuous feedback loop (like RLHF) is the primary method used to “align” large language models with human values, ensuring they behave in ways we find helpful and not harmful.

What are the challenges of implementing effective Human in the Loop systems?

Key challenges include scalability (it can be slow and expensive to have humans review everything), maintaining consistency among human reviewers, and the potential for human fatigue or bias to creep into the process. Designing efficient interfaces is also non-trivial.

How does Reinforcement Learning from Human Feedback (RLHF) work as a Human in the Loop approach?

RLHF is a specific, advanced form of HITL. First, humans create a high-quality dataset of desired outputs. Then, humans rank different outputs from the AI model. This ranking data is used to train a “reward model.” Finally, the main AI model is fine-tuned using reinforcement learning to maximize the score from this reward model, effectively steering it toward producing outputs that humans prefer.

What is the role of Human in the Loop in ethical AI development?

It’s central. HITL provides a mechanism for ethical oversight, allowing humans to intervene when an AI is about to make a biased, unfair, or discriminatory decision. It moves the responsibility for ethical outcomes from a purely algorithmic process back to an accountable human agent.

How does Human in the Loop affect AI system scalability?

This is a trade-off. Integrating humans can slow down fully automated processes. However, the goal of many HITL systems is to use human input strategically (via Active Learning) only on the most difficult or uncertain cases, preserving scalability while still reaping the benefits of human judgment.

What skills are required for humans participating in Human in the Loop systems?

This depends on the task. For general tasks like image labeling, it requires attention to detail. For specialized tasks like medical diagnosis or legal review, it requires deep domain expertise. All roles require consistency and an understanding of the AI’s goals and limitations.

How can organizations measure the effectiveness of their Human in the Loop processes?

Metrics include the rate of AI model improvement, the reduction in critical errors, the time-to-decision for complex cases, and the overall accuracy of the hybrid system compared to a fully automated or fully manual process.

What is the future of Human in the Loop as AI capabilities advance?

The human’s role will likely shift. Instead of focusing on simple data labeling, humans will act more as high-level supervisors, teachers, and ethicists for increasingly capable AI systems. The focus will move from correcting simple mistakes to shaping complex AI behavior and goals.

How does Human in the Loop compare to Human over the Loop approaches?

Human in the Loop implies active, continuous participation. Human over the Loop (or on-the-loop) typically refers to a more passive oversight role, where the human monitors the AI’s overall performance and intervenes only in case of a major failure or system-level alert.

The conversation isn’t about humans versus machines. It’s about designing systems where humans and machines do better work together.

As AI becomes more integrated into our lives, the human in the loop won’t just be a feature-it will be a requirement for responsible innovation.