A massive, powerful AI model is a brilliant mind trapped in a slow, expensive body. It’s useless if you can’t deploy it where it’s needed most.

Knowledge Distillation is a technique where a smaller AI model (the student) is trained to mimic the behavior and performance of a larger, more powerful model (the teacher), allowing for more efficient deployment while preserving most of the original capabilities.

This is like a master chef (the teacher model) training an apprentice (the student model). The master doesn’t just give the apprentice a recipe book (the hard data labels). Instead, they show them how they cook, explaining the nuances, the instincts, the feel of the process. The apprentice learns to prepare similar quality dishes without needing the master’s years of experience or a massive, five-star kitchen.

This isn’t just a nice-to-have optimization. It’s the key to getting state-of-the-art AI out of the lab and onto your phone, into your car, and inside your applications without breaking the bank or draining your battery.

What is knowledge distillation in AI?

It’s a form of model compression. But instead of just squishing the model, you’re transferring its intelligence. You start with a large, cumbersome, but highly accurate “teacher” model. This model might have billions of parameters and require a cluster of GPUs to run.

Then, you take a much smaller, more nimble “student” model. The student’s goal isn’t just to learn from the raw data. Its primary goal is to learn how to think like the teacher. It’s trained to replicate the teacher’s outputs, capturing the nuances of its decision-making process.

How does knowledge distillation work?

The magic is in the “soft labels.” Standard training uses “hard labels.” The data says “this is a cat,” so the model is penalized unless it outputs cat = 1 and dog = 0.

But the teacher model is more sophisticated. It might look at a picture of a chihuahua and output dog = 0.85, cat = 0.10, rat = 0.05. This output, a probability distribution, is the “soft label.”

It tells the student model not just what something is, but what it’s similar to. It reveals that a chihuahua shares some features with a cat or a rat. This is rich, nuanced information that is completely lost with hard labels. The student model learns this richer representation of the data, making it surprisingly powerful for its small size.

What are the benefits of knowledge distillation?

The practical upsides are huge.

- Reduced Model Size: Distilled models are significantly smaller, making them easier to store and deploy.

- Faster Inference: Fewer parameters mean faster calculations. This is critical for real-time applications like live video analysis or instant language translation.

- Lower Computational Cost: Smaller, faster models require less energy and cheaper hardware, both for training and for running in production. This directly impacts your cloud computing bill.

- Edge Deployment: It makes running sophisticated AI on resource-constrained devices like smartphones, IoT sensors, and cars not just possible, but practical.

Hugging Face’s DistilBERT, for example, is 40% smaller and 60% faster than its teacher (BERT) while retaining 97% of its language understanding capabilities.

When should knowledge distillation be used?

It’s a strategic choice for specific scenarios. You should use it when:

- You need to deploy a model on devices with limited memory or processing power.

- Your application requires very low latency for its predictions.

- The operational cost of running a massive teacher model in production is too high.

- You have a powerful, but proprietary or black-box model, and you want to create a smaller, open model that approximates its behavior.

How does knowledge distillation differ from other compression techniques?

It’s a fundamentally different philosophy.

- Pruning is like performing surgery on a model, carefully removing parameters or connections that are deemed least important.

- Quantization is like reducing the precision of the numbers in the model, using fewer bits to represent each weight, which shrinks the size.

These methods shrink the existing model. Knowledge distillation trains a new, smaller model from scratch, teaching it the wisdom of the larger one. It transfers behavior, not just structure.

What are the limitations of knowledge distillation?

It’s not a free lunch. The student is almost never better than the teacher. There’s an inherent performance gap, and the goal is to make that gap as small as possible. The process itself can be complex. Choosing the right student architecture and tuning the distillation process requires expertise. If the teacher model has biases, it will dutifully teach those same biases to the student.

How is knowledge distillation used in AI agent systems?

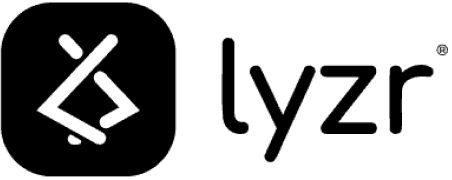

It allows for more agile and efficient agents. An AI agent might rely on a massive, slow “planner” model to think through complex, multi-step strategies. But for immediate, reflexive actions, waiting for the planner is too slow.

Knowledge distillation can be used to train a small, fast “executor” agent. This student agent learns the reactive patterns of the large planner. When a quick decision is needed, the executor can act instantly, mimicking the likely decision of its much larger teacher, while the planner works on the next big-picture strategy in the background.

What technical mechanisms are used for knowledge distillation?

The core idea of soft labels can be enhanced with more advanced techniques. It’s about transferring more than just the final output.

- Temperature Scaling: This is a key trick. By applying a “temperature” value to the teacher’s output calculation, you can soften the probabilities even more. A higher temperature makes the distribution smoother, forcing the teacher to reveal more about the relationships between classes, giving the student more subtle signals to learn from.

- Attention Transfer: Modern models like transformers use “attention mechanisms” to decide which parts of the input data are most important. Instead of just matching the final prediction, you can train the student to mimic the teacher’s attention patterns. The student learns not just what to predict, but where to look.

- Adversarial Distillation: This introduces a third model, a “discriminator.” The student’s job is not only to mimic the teacher but also to produce outputs that are so similar that the discriminator can’t tell them apart. This competitive setup pushes the student to capture the teacher’s behavior more precisely.

Quick Check: What’s the right tool for the job?

You’re a startup with a groundbreaking fraud detection model. It’s incredibly accurate but takes two seconds to process a transaction—far too slow for real-time payment approval. Your server costs are already high. Do you just buy a bigger server, or do you use knowledge distillation?

Answer: Knowledge distillation. Buying a bigger server might make it faster, but it won’t solve the core efficiency problem and will increase your costs. By distilling your large model into a smaller, faster one, you can achieve real-time speeds and reduce your operational expenses, making your product commercially viable.

Deep Dive: Questions That Go Deeper

How does temperature affect knowledge distillation?

A higher temperature creates a softer probability distribution, emphasizing the knowledge in less likely predictions. A lower temperature makes it closer to the original, “harder” labels. Finding the right temperature is a key part of tuning the process.

Can knowledge distillation work across different model architectures?

Yes. This is one of its most powerful features. A massive Transformer-based teacher can distill its knowledge into a much simpler CNN or LSTM-based student, as long as the student has enough capacity to learn the target function.

What metrics should be used to evaluate distilled models?

You evaluate them on two fronts: efficiency (model size, inference speed, power consumption) and performance (accuracy, F1-score, etc., on a test set). The goal is to find the best trade-off between the two.

How does ensemble distillation differ from single-teacher distillation?

Instead of learning from one teacher, the student learns from an ensemble (a group) of teacher models. This often results in a more robust student, as it learns to capture the consensus knowledge of multiple experts.

Can knowledge distillation improve model robustness to adversarial attacks?

Sometimes. By learning from the smoother, softer output distributions of a teacher, the student model can develop smoother decision boundaries, which can make it more resistant to small, malicious perturbations in the input data.

What are the trade-offs between model size and performance in distilled models?

It’s a direct trade-off. Generally, the smaller you make the student model, the larger the performance drop will be compared to the teacher. The art is in designing a student architecture that is as small as possible while still being large enough to capture the essential knowledge.

How is offline distillation different from online distillation?

In offline distillation, the teacher model is pre-trained and frozen. In online distillation, the teacher and student are trained simultaneously, with the teacher updating its knowledge as the student learns, creating a more dynamic and collaborative learning process.

What role does knowledge distillation play in federated learning?

In federated learning, data is kept on local devices. A large global model (teacher) can distill its knowledge into a smaller student model that gets sent to the local devices for training. This preserves privacy and reduces communication overhead.

Knowledge distillation is the great equalizer for AI. It takes the immense power of foundation models and refactors it into a form that can run almost anywhere, turning raw capability into practical, accessible intelligence.