Without a standardized report card, AI progress is just guesswork.

Model Benchmarking is the process of systematically testing and comparing AI models against standardized tasks and metrics to measure their performance, capabilities, and limitations.

It’s a standardized exam for AI systems.

Just as students take tests to objectively measure their knowledge against established criteria, AI models undergo benchmark tests.

These tests evaluate how well they perform specific tasks compared to other models.

This isn’t just an academic exercise. It’s the core discipline that separates real capability from marketing hype and ensures we are building safer, more effective AI.

What is Model Benchmarking in AI?

It’s the scientific method applied to AI development.

Instead of just having a “feeling” that one model is better than another, benchmarking provides objective, quantifiable proof.

The process involves a few key components:

- Standardized Datasets: A fixed set of data that all models are tested on. This ensures a level playing field.

- Specific Tasks: Clearly defined problems the model must solve, like answering questions, summarizing text, or writing code.

- Performance Metrics: The “scores” used to grade the model. This could be accuracy, speed, fairness, or robustness.

- Comparison: The results are then compared against other models or a baseline standard.

This entire framework allows developers to say, “Our model scored an 85% on this reasoning benchmark, while the previous version scored a 78%.”

That’s a concrete measure of progress.

Why is Model Benchmarking important for AI development?

It’s the bedrock of accountability and progress in the AI field.

Without it, we’d be flying blind, relying on impressive but unverified demos.

Benchmarking serves several critical functions:

Tracking Progress Over Time

It allows us to see how the field is evolving. Companies use it to measure their own internal improvements. For example, OpenAI used benchmarks like MMLU (Massive Multitask Language Understanding) to demonstrate that GPT-4 possessed significantly more knowledge across 57 subjects than GPT-3.5.

Informing Decision-Making

For businesses, benchmarking helps answer the question: “Which model is right for my specific use case?” By comparing models on relevant benchmarks, a company can choose the most cost-effective and capable option.

Driving Competition and Innovation

Public leaderboards for benchmarks like the ones from Hugging Face create a competitive environment. This pushes research labs to innovate and develop more powerful models. Google DeepMind often highlights Gemini’s performance on benchmarks like BigBench to showcase its advanced reasoning capabilities.

Identifying Weaknesses and Biases

A good benchmark doesn’t just tell you what a model does well. It exposes its flaws. For instance, Anthropic uses specific benchmarks derived from its RLHF process to test how well its model, Claude, adheres to principles of being helpful, harmless, and honest.

What are the key metrics used in AI Model Benchmarking?

The “grade” an AI gets depends entirely on the subject it’s being tested on.

There’s no single universal metric. The metrics are tailored to the task.

Some common ones you’ll see include:

- Accuracy: The most straightforward metric. How many questions did the model get right? (Expressed as a percentage).

- F1-Score: A more nuanced measure of accuracy that is useful when the data is imbalanced. It balances precision and recall.

- BLEU Score: Used for translation and text generation tasks. It measures how similar the model’s output is to a human-generated reference text.

- Perplexity: For language models, this measures how well the model predicts a sample of text. A lower perplexity score is better.

But modern benchmarks go further, measuring things like:

- Robustness: How does the model perform when inputs are noisy or slightly altered?

- Fairness: Does the model show bias against certain demographic groups?

- Efficiency: How much computational power and time does it take to get an answer?

How does Model Benchmarking differ for Large Language Models vs. other AI systems?

The scope is massively different.

Traditional AI benchmarking was often very specialized.

An image recognition model was tested on its ability to classify images, period.

A chess-playing AI was tested on its ability to win at chess.

Large Language Models (LLMs) are different.

They are generalists.

This means benchmarks for LLMs have to be much broader and more complex. Instead of testing one skill, they test a wide range of cognitive abilities.

This is the key difference between AI benchmarking and traditional software benchmarking. Traditional tests measure things like speed and memory usage. AI benchmarks measure capabilities like reasoning, comprehension, and generalization.

An LLM benchmark might include:

- Grade-school math problems.

- Bar exam legal questions.

- Code generation challenges.

- Tests of moral reasoning.

- Summarizing dense academic papers.

The goal is to get a holistic view of the model’s intelligence, not just its performance on one narrow task.

What are the limitations of current AI Model Benchmarking approaches?

Benchmarks are essential, but they are not perfect.

They are a proxy for real-world performance, not a guarantee.

Here are some of the biggest challenges:

Teaching to the Test

If a benchmark becomes too popular, developers might unintentionally train their models to excel specifically on those tasks. The model gets a high score but may lack general capabilities outside the benchmark’s scope.

Data Contamination

This is a huge problem. An LLM might have been accidentally trained on the benchmark’s questions and answers because they were scraped from the internet. In this case, the model isn’t “reasoning” to find the answer; it’s just “remembering” it.

The Real-World Gap

Scoring high on a multiple-choice benchmark is not the same as being a helpful, reliable assistant in a complex, real-world workflow. Many benchmarks test static knowledge, not the ability to interact and adapt.

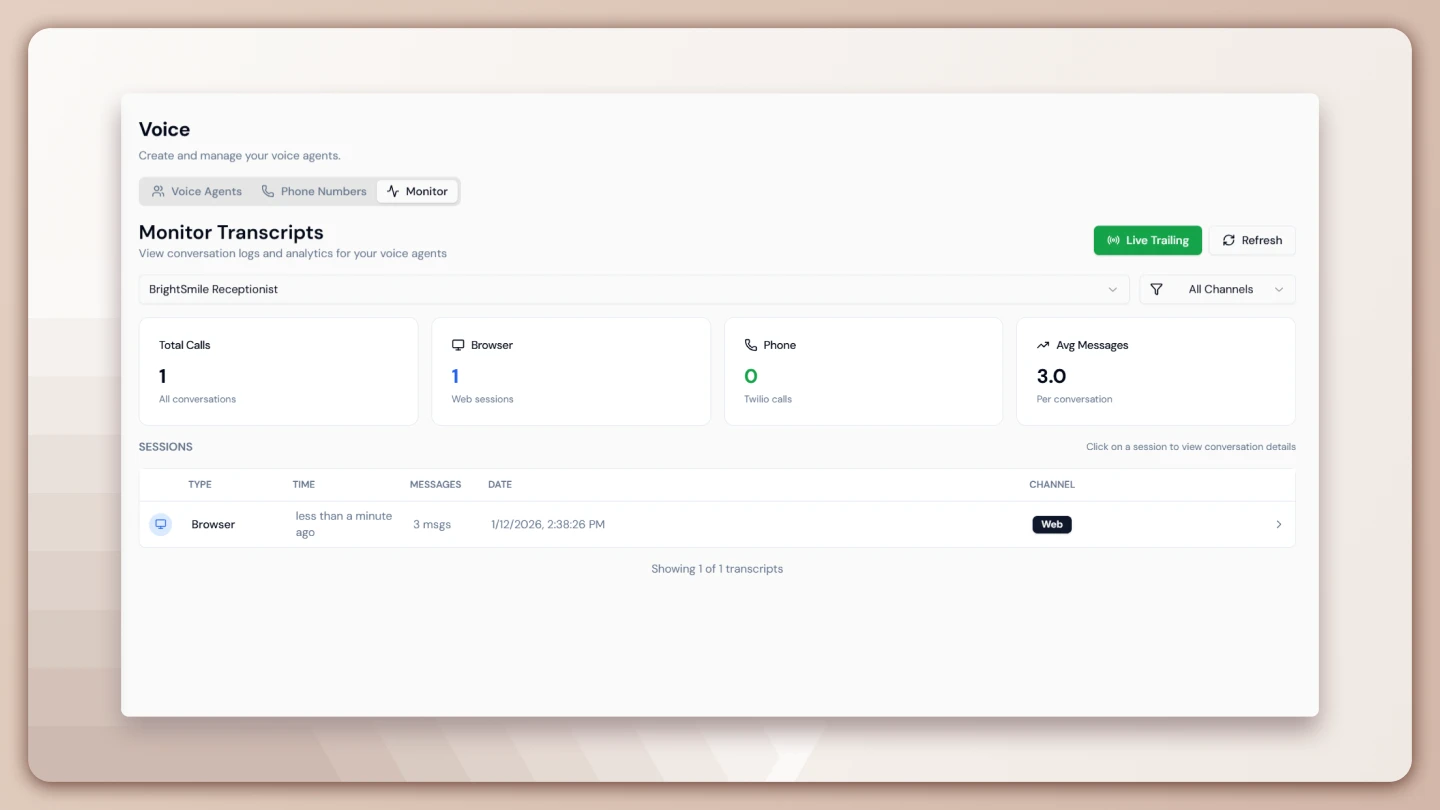

How are Agent-specific benchmarks different from general AI model benchmarks?

This is the next frontier.

Standard LLM benchmarks test passive knowledge. You give it a prompt, it gives you an answer.

AI Agents act.

They use tools. They plan. They interact with environments over multiple steps.

Benchmarking an agent is far more complex. It requires evaluating the entire process, not just the final output.

Agent-specific benchmarks need to measure:

- Task Completion: Can the agent successfully complete a multi-step task, like booking a flight or analyzing a spreadsheet?

- Tool Use: How effectively does the agent use available tools (APIs, code interpreters, web browsers)?

- Planning and Reasoning: Can the agent form a coherent plan and adapt it when things go wrong?

- Efficiency: How many steps or tool calls does it take to complete the task?

These benchmarks are less about static Q&A and more about interactive, dynamic problem-solving.

What technical frameworks are used for Model Benchmarking?

The core isn’t about general coding; it’s about robust evaluation harnesses.

These frameworks provide the datasets, tasks, and scoring mechanisms needed to run benchmarks in a standardized way.

- GLUE and SuperGLUE: Developed by Hugging Face, these are collections of diverse and challenging Natural Language Processing (NLP) tasks. They were the standard for evaluating language models for years.

- Holistic Evaluation of Language Models (HELM): A comprehensive framework that aims to standardize evaluation across many dimensions. It looks at accuracy, calibration, robustness, fairness, bias, and more, providing a much broader picture of a model’s behavior.

- BIG-Bench (Beyond the Imitation Game Benchmark): A massive collaborative benchmark with over 200 tasks designed to probe the capabilities that current language models don’t yet have, pushing the boundaries of what they can do.

Quick Test: Can you spot the risk?

A startup claims their new AI Agent is “SOTA” (state-of-the-art) because it scored 98% on a benchmark that exclusively tests its ability to answer trivia questions from a 2021 dataset. They want to sell this agent as a tool for real-time financial market analysis. What’s the problem here?

The benchmark is a poor fit for the task. High performance on a static knowledge benchmark says nothing about the agent’s ability to handle real-time, dynamic data or use financial analysis tools.

Questions That Move the Conversation

How often should AI models be benchmarked during development?

Constantly. Benchmarking isn’t a one-time event at the end of a project. It’s done continuously throughout the development lifecycle to track progress, catch regressions, and guide research.

What is the difference between academic benchmarks and industry benchmarks for AI?

Academic benchmarks (like SuperGLUE) are often public and designed to push the boundaries of research. Industry benchmarks are typically internal, proprietary, and focused on a specific product’s performance and safety requirements.

How do you create a custom benchmark for a specific AI application?

You start by defining the key capabilities your application needs. Then, you curate a dataset and a set of tasks that directly test those capabilities under realistic conditions. It’s about measuring what matters for your specific use case.

Can Model Benchmarking predict real-world performance of AI systems?

It can provide strong indicators, but it’s not a perfect prediction. A model that performs well on a broad range of robust benchmarks is more likely to be useful in the real world, but live user testing (like A/B testing) is still necessary.

What role does Model Benchmarking play in responsible AI development?

A huge one. Benchmarks are the primary tools used to measure and mitigate issues like bias, toxicity, and misinformation generation. Without them, claims of building “safe AI” would be baseless.

How does benchmark leaderboard competition influence AI research directions?

It can be both a blessing and a curse. It focuses the global research community on solving hard problems, leading to rapid progress. However, it can also lead to “leaderboard hacking,” where researchers over-optimize for a specific metric at the expense of true innovation.

What are emerging benchmarks for multimodal AI systems?

New benchmarks are being developed that test a model’s ability to understand and reason across text, images, audio, and video simultaneously. These often involve tasks like describing a video’s content or answering questions about a complex diagram.

How can benchmarking help identify potential biases in AI models?

By using specially designed datasets that contain demographic information or text known to elicit biased responses. By measuring performance differences across different groups, developers can identify and work to correct these biases.

What is the relationship between Model Benchmarking and AI alignment?

Benchmarking is a key tool for alignment research. To align an AI with human values, we first need to be able to measure its adherence to those values. Benchmarks are created to test for honesty, harmlessness, and helpfulness.

How are benchmarks evolving to address more complex AI agent behaviors?

They are becoming more like interactive simulations or games. Instead of a static set of questions, the agent is placed in a dynamic environment (like a simulated web browser or operating system) and graded on its ability to complete complex, open-ended tasks.

The benchmarks of today are the building blocks for the AI of tomorrow.

As AI agents become more autonomous and capable, our methods for measuring and directing them must evolve just as quickly.

Did I miss a crucial point? Have a better analogy to make this stick? Let me know.