Executive Summary

The current generation of Generative AI faces a reliability crisis. While Large Language Models (LLMs) like GPT-5 and Claude 4.1 are capable of brilliance, they are probabilistic by nature. In an enterprise environment, “mostly correct” is often as dangerous as “completely wrong.”

- A model with 99.9% accuracy sounds impressive. But in a complex enterprise workflow requiring 1,000 sequential steps, that 0.1% error rate compounds catastrophically, resulting in a success rate of only 36.7%. For critical functions-banking, healthcare, customer support-this is unacceptable.

- Enter the Lyzr Six Sigma Agent. Drawing inspiration from the manufacturing philosophy that revolutionized industrial quality, the Lyzr Six Sigma Agent is the first AI architecture engineered for 3.4 defects per million opportunities (DPMO). By moving away from a “Single Genius” model to a “Massively Decomposed” architecture, we achieve verifiable, deterministic outcomes at a fraction of the cost of traditional agents.

1. Introduction: The Reliability Problem

The race for “Smarter” AI has hit a wall of diminishing returns. No matter how much you prompt-engineer a single model, it will occasionally hallucinate, misinterpret instructions, or fail to follow a strict format.

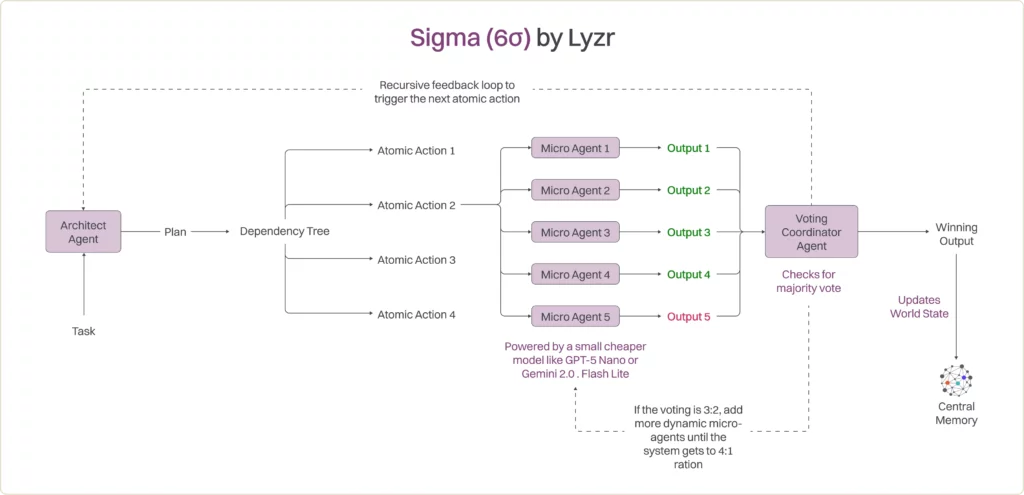

The Old Way (Monolithic Agents): You give a complex goal to a massive, expensive model (e.g., GPT-5). You hope it figures out the 50 sub-steps correctly. If it fails at step 14, the entire process collapses. The Lyzr Way (Six Sigma Architecture): We assume individual models will fail. Instead of trying to fix the model, we fixed the system. We break tasks down into atomic units so simple that small, fast models can solve them, and then we use Consensus Voting to mathematically eliminate errors.

2. Architecture & Workflow

The system operates on a principle called Reliability via Redundancy. It mimics a high-reliability organization (like a nuclear power plant control room) rather than a creative writer.

Step-by-Step Walkthrough: The “Billing Dispute” Example

Scenario: An enterprise customer support system needs to resolve a complex billing dispute where a user claims they were overcharged.

Phase 1: The Architect (Decomposition)

The request enters the system. A high-level Architect Agent (running on a reasoning model) analyzes the goal. It does not try to solve it. It only breaks it down into a Dependency Tree.

- Complex Goal: “Resolve billing dispute for User X.”

- Plan: 1. Verify User Identity -> 2. Pull Invoice Data -> 3. Compare Invoice to Contract Rate -> 4. Calculate Difference -> 5. Process Refund if valid.

Phase 2: Atomic Action & Micro-Agent Execution

The system picks the first atomic leaf node: “Compare Invoice Item A ($50) to Contract Rate ($40).” Instead of asking one AI, the system spins up 5 independent Micro-Agents (using cheaper, faster models like GPT-4o-mini or Llama-3-8B).

Phase 3: Verification (The Voting Coordinator)

The Voting Coordinator Agent collects the outputs. It sees a 4-to-1 consensus.

- Decision: It accepts “YES” as the ground truth.

- Action: It discards the error from Agent 3. This specific step is now “Verified.”

Phase 4: World State Update

The verified fact (“User was overcharged”) is written to the Central Memory. The system loops back to the Architect’s plan to trigger the next step (“Calculate Refund Amount”).

3. The Math: Why “Six Sigma”?

Why does this architecture result in zero errors? It comes down to the probability of concurrent failure. The “Single Genius” Failure Mode – If a Monolithic model has a 1% error rate ($0.01$), it will fail 1 out of 100 times.

P(Error) = 10-2

The “Consensus” Success Mode – For the Lyzr architecture, we deliberately use smaller, cheaper models (“Micro-Agents”). Even if we assume these models are “dumber” and have a 5% error rate, the voting mechanism saves us. For the system to fail, at least 3 of 5 agents must make the exact same error at the exact same time.

P(X>=3) = (5/3)(0.05)3(0.95)2 + (5/4)(0.05)4(0.95)1 + (5/5)(0.05)5

We calculate the probability using the Binomial Probability formula:

P(System Error) = 0.001128 + 0.000029 = 0.00115

Result:

- Single Genius Agent Error Rate: 1.00% (1 in 100)

- 5-Micro Agents Consensus Error Rate: 0.11% (1 in 900)

Even though the individual Micro-Agents are 5x worse than the Genius (5% vs 1%), the voting system is ~9x more reliable.

Achieving True Six Sigma (Dynamic Scaling)

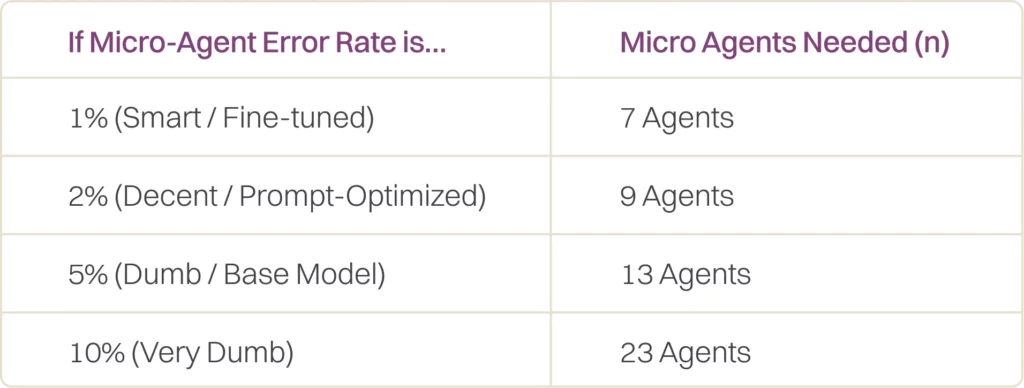

1 in 900 (0.11%) is roughly 3-Sigma reliability. To achieve true Six Sigma (3.4 defects per million), the probability of failure must drop to $0.0000034$. Based on Binomial calculations, we scale the number of agents based on their “dumbness”:

Dynamic Six Sigma Strategy: To optimize costs, the system employs a “Dynamic Six Sigma” strategy. It begins with just 5 Micro-Agents.

- If the vote is 5-0 or 4-1 —> Pass (High Confidence).

- If the vote is contested (e.g., 3-2) —> Trigger 8 more agents (Total 13) to break the tie with Six Sigma certainty.

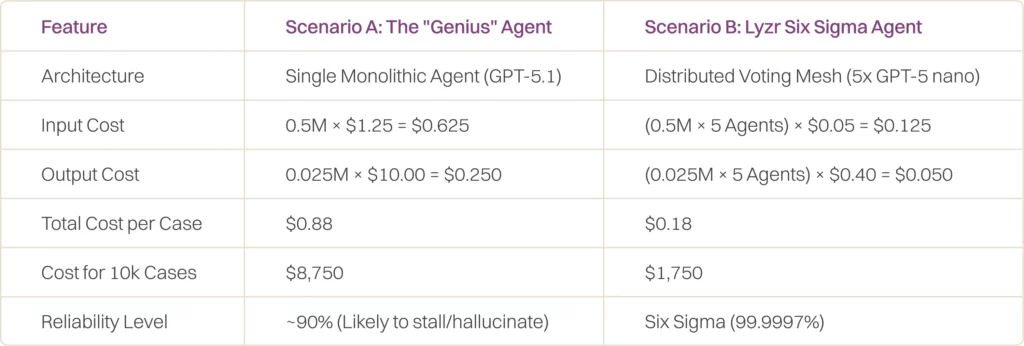

4. Commercial Comparison: Better AND Cheaper

A common misconception in enterprise AI is that “Reliability costs more.” Executives assume that to get Zero Errors, they must pay a premium. The Lyzr Six Sigma architecture proves the opposite: Reliability is cheaper if you engineer it correctly. Based on the latest GPT-5 pricing (November 2025), we observe a 25:1 cost ratio between the “Reasoning” model (GPT-5.1) and the “Atomic” model (GPT-5 nano).

Cost Simulation: Complex Customer Support Workflow: Scenario: An automated agent resolving a mortgage application discrepancy.

- Total Steps: 50 Steps (Verification, Doc Review, Calculation, Email Drafting).

- Context per Step: 10,000 Tokens (Application PDF, Policy Docs).

- Output per Step: 500 Tokens (Reasoning & Decision).

- Total Volume: 500,000 Input Tokens / 25,000 Output Tokens per case.

Result

The Lyzr Six Sigma Agent executes the exact same workflow for 80% less cost ($0.18 vs $0.88) and 99.9997% accuracy.

5. Summary

The Lyzr Six Sigma Agent represents the maturity of the AI industry. We are moving from the “Magic Demo” phase to the “Reliable Operations” phase. By treating AI as a probabilistic component in a deterministic system, we provide enterprises the safety rail they have been waiting for to deploy AI in mission-critical workflows.