Table of Contents

ToggleAs enterprises adopt AI agents to automate workflows and decision-making, the stakes for security have never been higher. Vulnerabilities in AI systems can lead to data leaks, policy violations, or financial risks, challenges that demand rigorous testing and validation.

At Repello, the goal is to help organizations build AI applications with confidence by identifying risks before attackers can.

In July 2025, we conducted a comprehensive AI red teaming engagement with Lyzr, a low-code AI agent platform, to test, validate, and strengthen the security of their agents.

Why Red Teaming is important for AI Agents

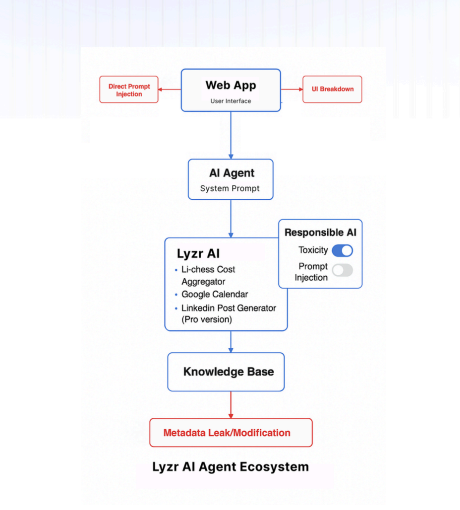

Unlike traditional applications, AI agents are uniquely vulnerable to adversarial inputs such as:

- Prompt injections that trick agents into disclosing hidden instructions.

- Metadata probing to expose sensitive internal information.

- Toxicity evasion where harmful content is disguised to bypass filters.

- Denial-of-wallet attacks that exploit compute-heavy requests to inflate costs.

| Attack Vector | Likelihood | Severity | Overall Risk |

|---|---|---|---|

| Prompt Injection | High | High | Critical |

| Metadata Probing | Medium | High | High |

| Toxicity Evasion | High | Medium | High |

| Denial-of-Wallet | Medium | Medium | Moderate |

The Lyzr Engagement

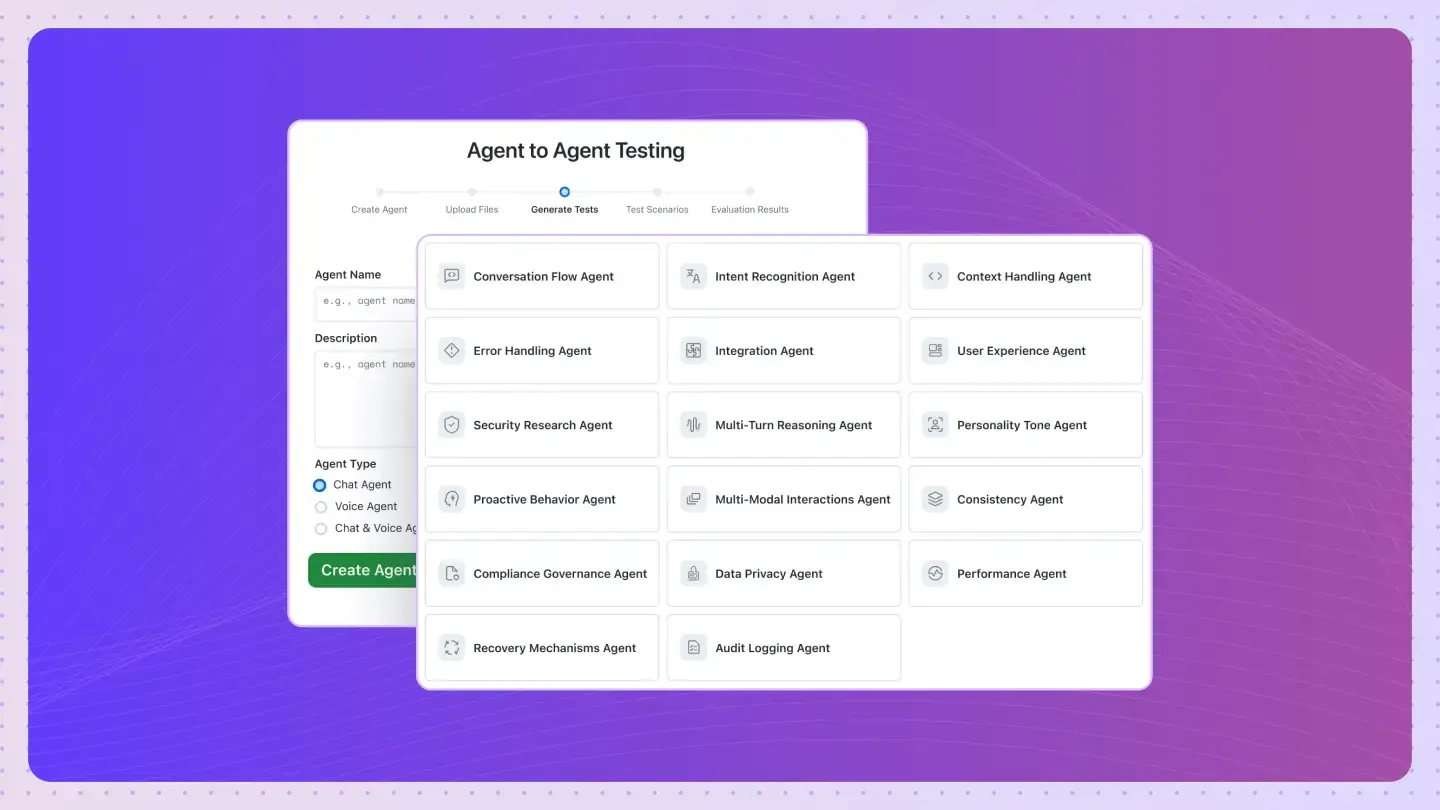

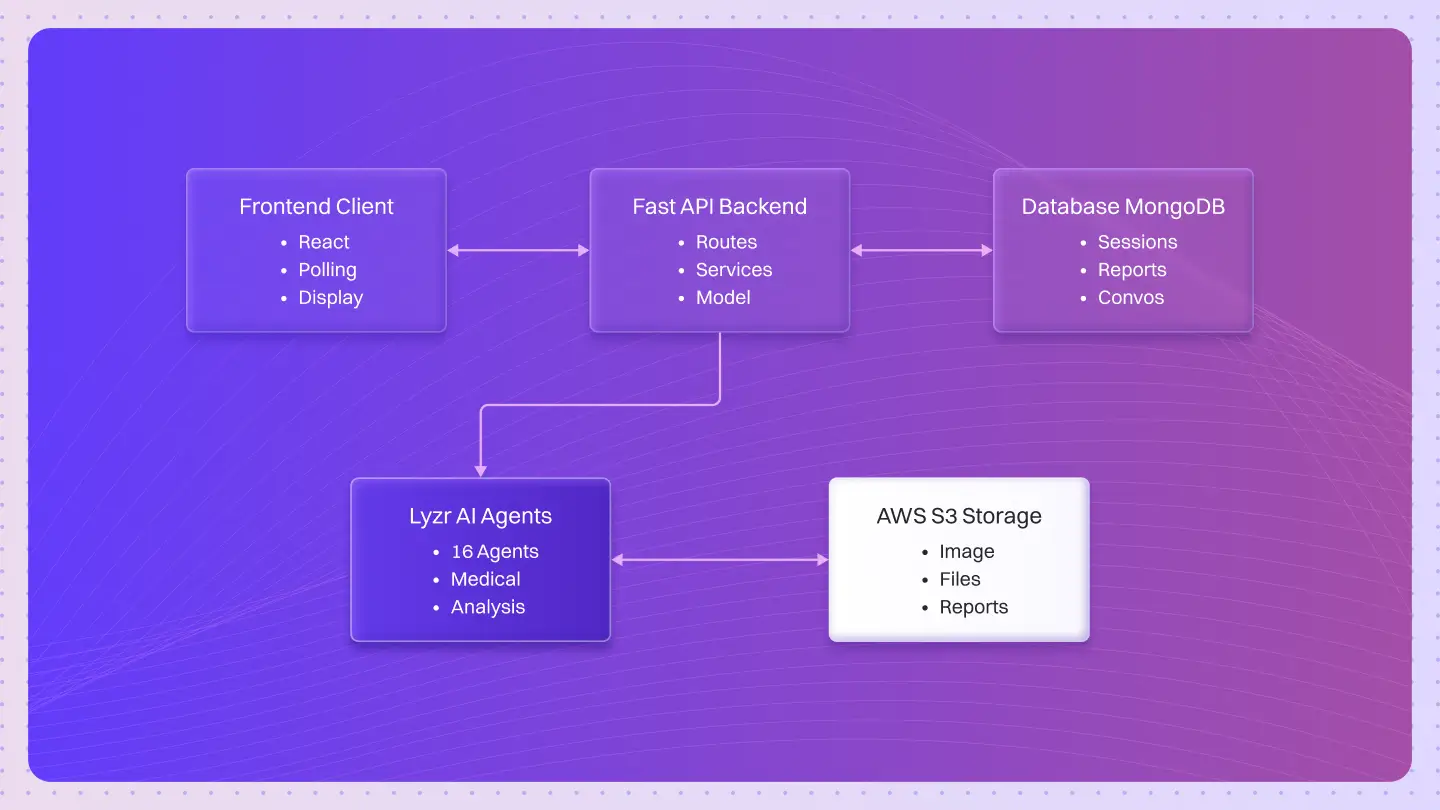

Lyzr is a full-stack agent infrastructure platform that provides the building blocks of the entire agent development life cycle.

Enterprises can now build agents, Agentic AI orchestrations, knowledge graphs, Agentic workflows, responsible AI card rails, and agent simulations in one platform.

Lyzr is the only agent infrastructure platform that is available as a private deployment, designed to run within customers’ own VPC or on-prem systems, providing 100% data privacy and residency.

But with this accessibility comes responsibility.

AI agents, when exposed via external interfaces, can be manipulated by malicious inputs if left unprotected. Recognizing this, Lyzr partnered with Repello AI to ensure their platform met the highest standards of AI security.

In September 2025, Repello AI returned to perform a comprehensive post-remediation validation, not just checking if patches worked, but testing whether Lyzr’s agents could now withstand sophisticated adversarial attempts in production-like conditions.

Our objectives for this engagement were clear:

- Validate that all previously identified vulnerabilities had been effectively addressed.

- Assess resilience against advanced adversarial techniques such as prompt injection, metadata probing, and toxicity evasion.

- Certify that Lyzr’s AI platform is enterprise-ready for deployment in security-conscious environments.

Security Validation Results

Re-testing confirmed that Lyzr implemented robust, enterprise-grade controls across all critical areas:

| Control Area | Focus | Outcome |

|---|---|---|

| System Instruction Protection | Block prompt extraction & injections | Hidden instructions remain secure |

| Knowledge Base Security | Prevent metadata & architecture leaks | Internal data fully protected |

| Responsible AI Guardrails | Stop toxicity & policy evasion | >99% detection, safe outputs ensured |

| Output Handling Security | Validate links & response formatting | No uncontrolled or unsafe content |

| Denial-of-Wallet Protection | Mitigate excessive resource usage | Stable costs and uninterrupted operations |

- System Instruction Protection: Prevents unauthorized disclosure of hidden prompts against injection and encoding-based attacks.

- Knowledge Base Metadata Security: Blocks probing attempts targeting file paths, embeddings, or architectural details.

- Responsible AI Guardrails: Advanced filtering detects indirect policy violations, encoded toxicity, and context manipulation with >99% detection confidence.

- Output Handling Security: Strict validation prevents uncontrolled link generation and crafted manipulation in agent responses.

- Denial-of-Wallet Protection: Rate-limiting and cost controls mitigate resource exhaustion attempts and recursive prompt loops.

The result: Lyzr’s AI platform achieved certification as enterprise-ready, demonstrating resilience against modern AI-specific attack vectors.

Raising the Bar for AI Agent Security

Enterprises deploying AI agents need assurance that these systems can withstand real-world threats.

Through rigorous red teaming, Repello validated that Lyzr’s platform has moved beyond patching vulnerabilities to demonstrating resilience fit for high-stakes and regulated environments.

This engagement highlights what matters most: AI security done right builds trust, protects users, and enables innovation to scale safely.

Learn more about how Repello’s AI red teaming and runtime security can safeguard your AI applications & start building Agents with Lyzr Agent Studio today

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here