Table of Contents

ToggleYou are building on a fault line.

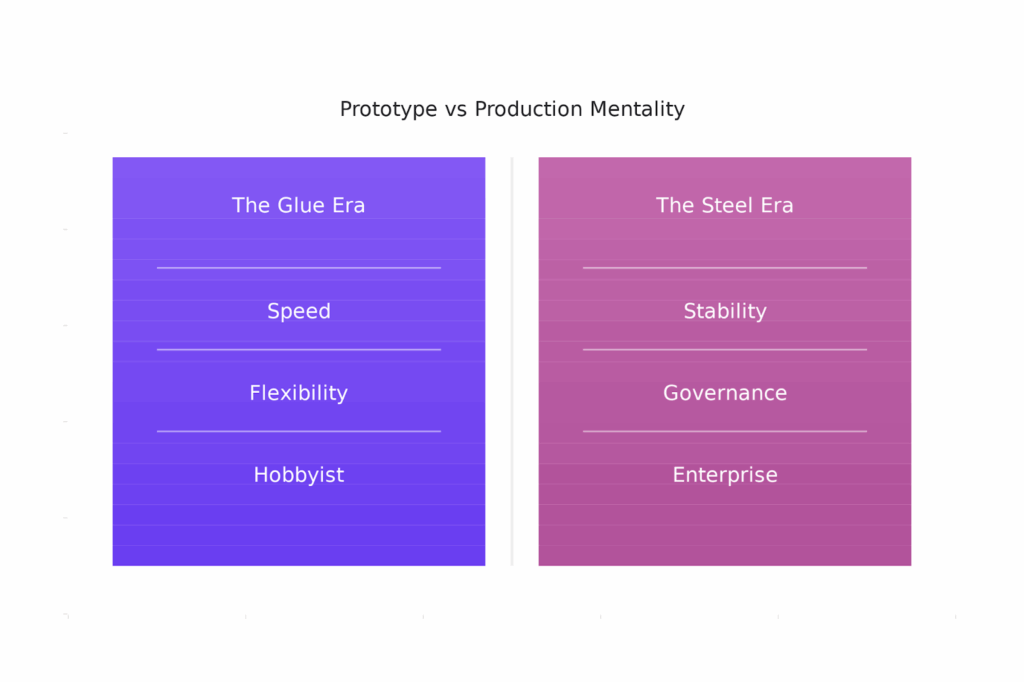

For the last two years, the Generative AI industry has been held together by “glue code.”

When Large Language Models first exploded onto the scene, the immediate imperative was speed.

Developers needed a way to stick disparate components together such as prompts, parsers, and vector stores to get a prototype working before the weekend was over.

We used libraries that acted as digital duct tape to string together linear sequences. It was messy and fragile, but it worked for the demo.

But 2025 has brought a harsh reality check. As we graduated from chatbots that write haikus to autonomous agents that process insurance claims, the glue began to crack. The industry is now facing a staggering failure rate for AI projects that puts billions in enterprise spending at risk.

The conventional wisdom, the advice you will hear in every Reddit thread and GitHub discussion, has always been simple. “Just use open-source libraries to build your own orchestration because it gives you maximum control.”

It is time to challenge that wisdom. The tool that built your prototype is not the tool that will scale your production. The reliance on brittle chains and abstraction-heavy libraries has created a “Valley of Death” for enterprise AI.

You do not need more glue. You need a steel frame. You need a LangChain alternative that is engineered specifically for the rigors, security, and determinism of the enterprise AI environment.

This is the story of the Great Decoupling, the moment where professional engineering teams stop building chains and start building agents.

The Crisis of Complexity: The High Cost of “Free” Glue

If you are an engineering leader or a senior developer, you have likely felt the shift. The excitement of the first “Hello World” with an LLM has been replaced by the dread of the “Dependency Hell” loop.

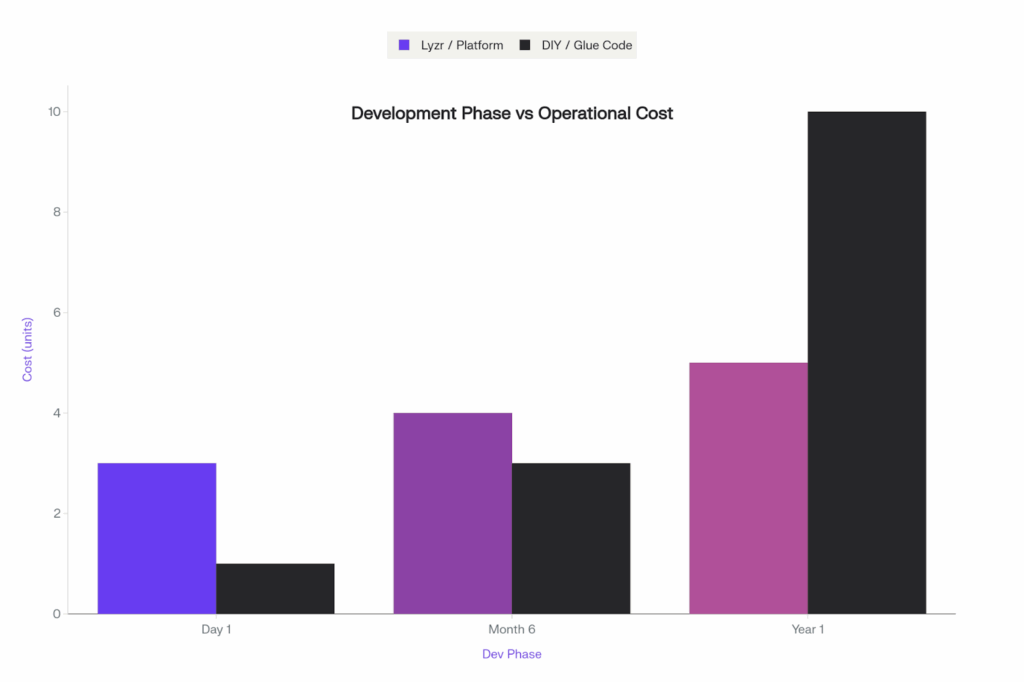

The “glue code” approach, popularized by frameworks like LangChain, served a vital purpose in democratizing access to AI. But in production, its layers of abstraction have become a liability.

The Abstraction Trap and Debugging Nightmares

A recurring theme in modern DevOps surveys regarding GenAI is the concept of “abstraction debt.” When you use a general-purpose orchestration library, you are often wrapping your logic in layers of code you didn’t write and cannot easily see. While this offers the convenience of swapping OpenAI for Anthropic with a single line of code, it creates a “black box” effect during debugging.

Consider the scenario where it is 3:00 AM and your production RAG pipeline has stalled.

It is throwing a generic error deep within a library stack trace that is both voluminous and cryptic. To fix it, you aren’t debugging your business logic.

You are peeling back layers of framework code to find out why a RunnableSequence isn’t passing a variable correctly. This is why developers are increasingly voicing frustration. Reports from the community highlight that frameworks often offer the same feature being done in three different ways, leading to massive cognitive load and architectural inconsistency within teams.

When a framework tries to be everything to everyone by supporting every vector store, every model, and every tool via a unified wrapper, it inevitably suffers from bloat. For an enterprise where software reliability is paramount, this lack of transparency is an unacceptable risk.

The Instability of the “Chain”

In the fast-moving world of AI, stability is a currency. Yet, the open-source ecosystem is often defined by breaking changes. A minor update to an underlying library can deprecate core functionalities, forcing your team to spend valuable sprint cycles refactoring code that worked perfectly yesterday.

This phenomenon is known as the “Maintenance Tax.”

Data suggests that 62% of enterprises today lack a clear starting point for scaling their AI specifically because their initial experiments are too fragile to move out of the sandbox. They are stuck in “Proof of Concept Purgatory,” unable to cross the bridge to production because the bridge is made of duct tape.

The macro-economic implication is severe. Forrester predicts that three out of four firms that attempt to build aspirational agentic architectures on their own will fail. The DIY approach, once seen as a badge of engineering prowess, is becoming a path to technical bankruptcy. The complexity of orchestrating dozens of agents, managing their memory, ensuring their security, and integrating them with legacy systems is simply too high for a custom build using generalist libraries.

The Core Insight: From Linear Chains to Agentic Reasoning

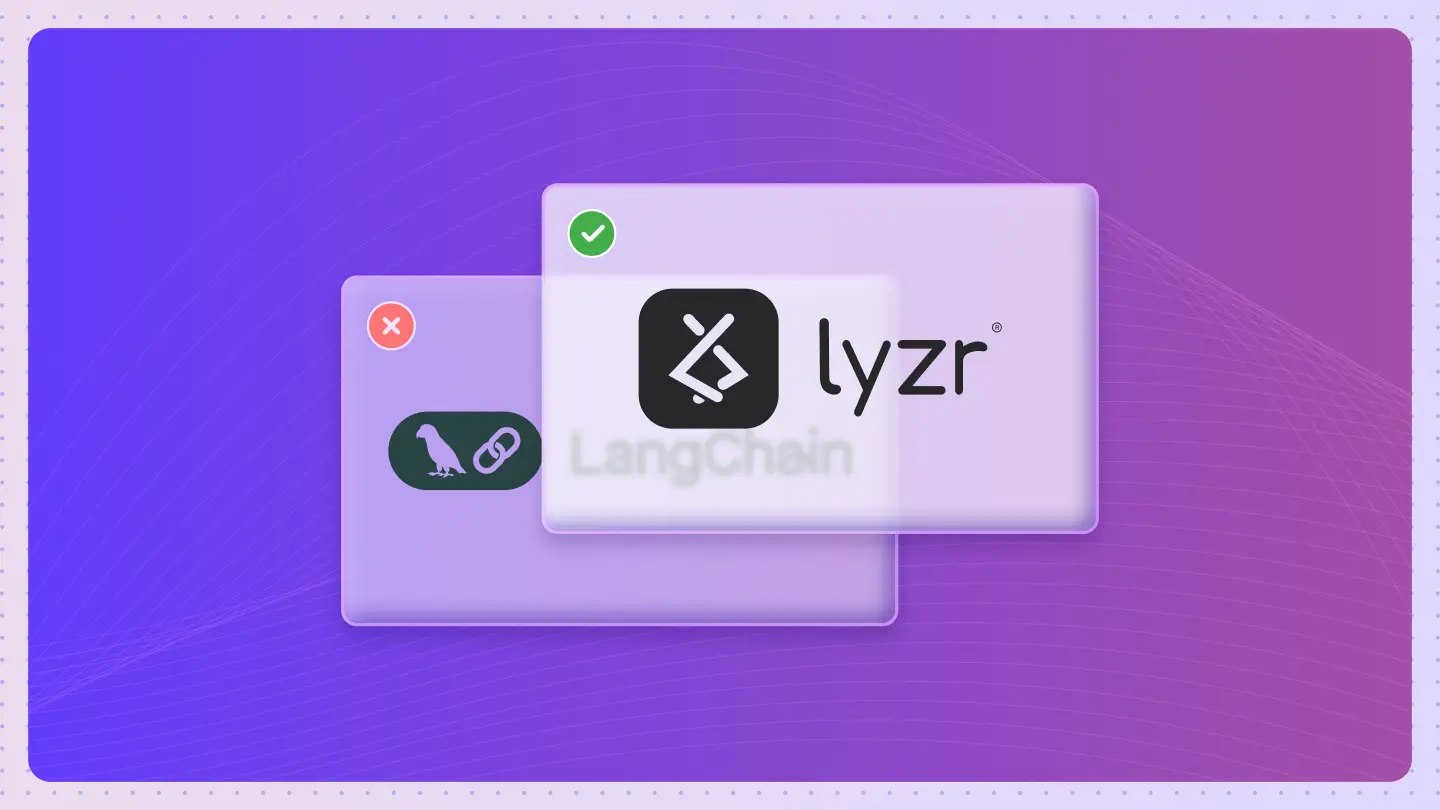

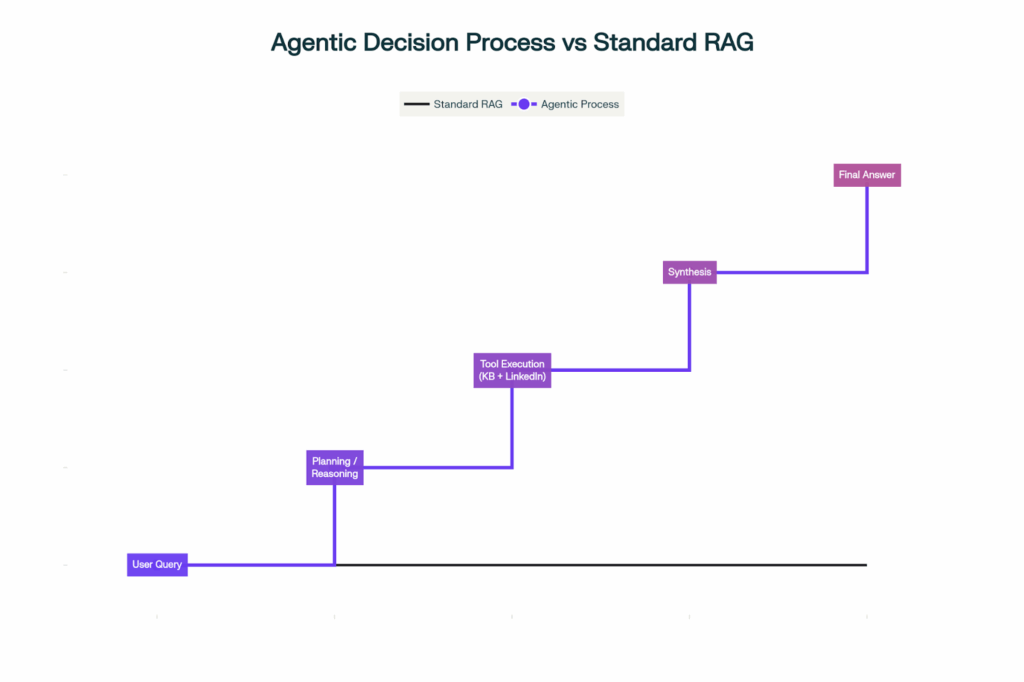

The fundamental flaw in the “old way” is the linear mindset. A “Chain” implies a rigid sequence where Step A leads to Step B, which leads to Step C. If Step B fails, the chain breaks. But the real world of enterprise business processes is non-linear. It loops. It requires judgment. It needs to pause, wait for human input, retry, and pivot based on new data. This requires a shift from Chains to Automata.

This is where Lyzr AI enters the conversation. Lyzr represents a divergence in design philosophy. It is not a library of loose components designed for hobbyists. It is an enterprise operating system for agents. It replaces the fragility of infinite dependencies with the stability of Deterministic Orchestration.

To see how Lyzr makes enterprise-grade agent building simple and robust, check out this hands-on walkthrough of Lyzr Studio in action:

The Distinction: The Calculator vs. The Analyst

To understand the shift, we must look at the difference between Standard RAG and Agentic RAG.

Standard RAG functions like a calculator. You ask a question, and the system fetches the top three documents that match your keywords and summarizes them. It is static, single-pass, and unintelligent. If the answer isn’t in the top three documents, it fails.

Agentic RAG functions like a junior analyst. You ask a question, and the agent analyzes your intent. It decides which documents to look at. It might search, realize the data is incomplete, search a different database, cross-reference the two, and then perform a calculation before answering. It plans, reasons, and acts.

| Feature | Standard RAG (The Calculator) | Agentic RAG (The Analyst) |

| Core Logic | Linear Retrieval (A -> B -> C) | Agentic Reasoning (Observe -> Plan -> Act) |

| Handling Uncertainty | Returns “I don’t know” or hallucinates | Retries search with new queries or asks for clarification |

| Data Source | Static Vector Store | Dynamic access to Tools and APIs |

| Ideal User | Simple FAQ Bots | Complex Enterprise Workflows |

This is the “aha!” moment for CTOs. You don’t need a tool that helps you string prompts together. You don’t need a better calculator. You need a digital employee.

The New Approach in Practice: Building Agentic RAG in Minutes

In the past, building an “Agentic” system required a team of senior Python engineers, weeks of configuring vector databases, and complex graph theory to manage state. Lyzr Agent Studio has democratized this capability. It transforms what was once a codebase heavy with boilerplate into a visual, intuitive process. It is the shift from “Pro-Code” fragility to “Low-Code” stability.

Here is how an enterprise can build a production-ready Agentic RAG system in minutes rather than months.

- The Persona Definition (No More Blank Scripts)

Instead of writing a class definition in Python, you start in the Studio by defining the agent’s role. You give it a specific Role, such as “Senior Legal Analyst,” and a clear Goal, like “Review procurement contracts and flag compliance risks against company policy.” You simply type natural language instructions. You might say, “You are thorough and conservative. Always cross-reference the liability clauses with our standard insurance policy.” Lyzr’s architecture handles the “System Prompt” engineering for you, ensuring the agent adheres to these constraints without you needing to tweak temperature settings or token limits manually. - The “One-Click” Knowledge Base

In a traditional setup, adding data is a chore. You have to chunk the text, select an embedding model, initialize a vector store like Pinecone or Milvus, and write the retrieval logic. In Lyzr Agent Studio, this is a drag-and-drop operation. You upload your “Procurement Policy.pdf” and “Master Services Agreement.docx,” and the automation handles the chunking, embedding, and indexing in a secure, private vector store. Crucially, you are not just building a search bar. You are equipping the agent with a “Knowledge Tool.” The agent now knows it has access to these documents and will autonomously decide when to read them during a conversation. - Adding “Arms and Legs” (Tool Integration)

A brain in a jar is useless because an agent needs to act. In the “old way,” connecting an LLM to the internet or your email required complex API wrappers and authentication handling. In the Studio, you select from a library of pre-built tools. You can enable Perplexity Search to give the agent the ability to fact-check contract clauses against real-time laws. You can connect Gmail or Outlook to allow the agent to draft the summary email to the vendor. With a simple toggle, your “Legal Analyst” can now look up the vendor on LinkedIn to verify their identity, check the latest regulations on the web, and draft an email, all without a single line of Python. - 4. The “Agentic” Difference in Action Once deployed, the difference becomes obvious. If you ask a Standard RAG system, “Is this contract risky?”, it will passively reply, “Here is a summary of the indemnity clause.” However, the Lyzr Agentic response is distinct. It might say, “I’ve reviewed the contract. The indemnity clause limits liability to $10k, but our policy requires $1M. I also checked the vendor on LinkedIn and they seem to be a shell company with no employees. Recommendation: Reject.” This behavior, reasoning across documents and external tools is the hallmark of Agentic RAG.

Lyzr: The Alternative You are Looking For

The shift from LangChain to Lyzr is not just technical; it is strategic. It aligns with the massive economic shift that Sequoia Capital calls Service-as-Software. In the old SaaS model, you bought a tool to help a human work faster. In the new Agentic model, you buy the work itself. You don’t want a “CRM Helper”; you want an “AI SDR” for prospects, emails, and book meetings.

The “Private Environment” Advantage

For an enterprise to embrace this, one thing is non-negotiable: Data Sovereignty. In an era where data leakage to public model providers is a C-level concern, Lyzr promotes a “Data Residency” model. Unlike many SaaS wrappers that force you to pipe your data through their servers, Lyzr SDKs and the Agent Studio are designed to run locally in your cloud.

Local Execution: Your agents run within your Virtual Private Cloud (AWS, Azure, GCP). Your data never leaves your perimeter.

Compliance: The platform is SOC2 and GDPR compliant out of the box.

AI-Only Data Lake: Lyzr creates a secure environment where vector stores and agent memories reside solely within your infrastructure.

This is a critical differentiator. A developer using an open-source library is responsible for building their own security wrappers. Lyzr integrates these guardrails natively.

Centralized Command with Enterprise Hub

Scale brings chaos. Managing one agent is easy, but managing a thousand is a governance crisis. The Lyzr Enterprise Hub acts as the command center. It allows IT administrators to manage secret keys, eliminating the risk of .env files on laptops, while monitoring agent performance and strictly controlling access. Crucially, it includes a “Kill Switch.” If an autonomous agent begins to behave unexpectedly, perhaps entering a loop or spending excessive tokens, admins can sever its access instantly. This level of control is absent in the “glue code” ecosystem and is essential for moving from pilot to production.

Empirical Evidence: The Shift in Action

The true test of any framework is not how well it performs in a hackathon, but how it survives the crushing pressure of enterprise operations. While LangChain is ubiquitous in the prototyping phase, Lyzr is proving its value where it counts: in the P&L.

Case Study 1: AirAsia – The 95% Efficiency Leap

AirAsia, a global aviation leader, faced a massive bottleneck in their support operations. Handling customer queries across multiple markets and languages was leading to slow response times and high operational costs. By deploying Lyzr’s agentic workflow, they didn’t just “assist” their support staff; they transformed the process.

The Result: A 95% reduction in agent response time. The system automated the retrieval and synthesis of support data, allowing queries to be resolved in seconds rather than minutes. This is the power of a stable, low-latency agentic architecture.

Case Study 2: Keka HR – Halving the Workload

Keka HR, a leading HR tech innovator, struggled with the sheer volume of recruitment screening. Recruiters were drowning in resumes and initial screening calls, leaving little time for high-value candidate engagement. They deployed Jazon, Lyzr’s autonomous SDR and interaction architect, adapted for hiring.

The Result: A 50% time saving for recruiters. The AI Hiring Assistant now handles the initial outreach, screening, and scheduling, effectively acting as a digital employee that never sleeps.

Case Study 3: HFS Research – The Knowledge Engine

HFS Research, a premier analyst firm, had a “library problem.” They possessed over 4,000 high-value research assets (PDFs, reports, audio), but clients couldn’t find the specific insights they needed using keyword search. They built an AI-Driven Knowledge Engine using Lyzr. Unlike a standard search bar, this Agentic RAG system ingests complex queries and synthesizes answers from across the document corpus.

The Result: “Instant insights.” The system transformed static documents into an interactive consultant, unlocking the value trapped in their archives.

These examples validate the State of AI Agents 2025 report findings: 80% of enterprises now prefer AI hosted inside their own AWS cloud due to compliance risks. Lyzr is the only platform delivering this “Private Agent” capability at scale.

Comparisons: Why Lyzr Wins the “Alternative” Debate

When you are searching for a “LangChain alternative,” you are typically trying to solve one of three specific problems: Complexity, Reliability, or Security. Let’s look at how Lyzr stacks up against the field.

| Feature | LangGraph | Microsoft AutoGen | Lyzr AI |

| Primary Focus | Low-level graph control | Multi-agent conversation research | Enterprise Production & Governance |

| Complexity | High: Requires deep graph theory knowledge | Medium: Easy to start, hard to control loops | Low: “Task-Agent-Pipeline” abstraction |

| Reliability | Brittle if nodes are undefined | Prone to “conversational loops” | High: Deterministic execution |

| Security | Developer must build wrappers | Basic local execution | Native: SOC2, ISO 27001, Secret Management |

- Solving Complexity: Lyzr vs. LangGraph

LangGraph is powerful, but it is academic. It requires you to manually define nodes and edges, demanding a steep learning curve. Lyzr Agent Studio uses an intuitive “Task-Agent-Pipeline” structure. You define what needs to be done and who does it, and the platform handles the graph topology.

- Solving Reliability: Lyzr vs. AutoGen

Microsoft AutoGen is excellent for research but notoriously difficult to control in production, often entering infinite loops of compliments or arguments. Lyzr focuses on Deterministic Orchestration, using “Reflexion” modules to ensure agents adhere to Standard Operating Procedures.

- Solving Security: Lyzr vs. CrewAI

CrewAI is popular for role-playing but lacks native enterprise governance. Lyzr adds the Enterprise Hub layer monitoring, secret key management, and data residency enforcement that is non-negotiable for large companies.

Organizational General Intelligence (OGI)

We are standing at the precipice of a new era. The goal is no longer just “Generative AI” it is Organizational General Intelligence.

OGI is the state where AI agents equipped with long-term memory, self-reflection, and tool access collaborate seamlessly across an organization. In this future, your “Marketing Team” isn’t just people; it’s a mix of humans and agents like Skott (Lyzr’s AI Marketer) working in tandem.

The failure rate of 2025 is a signal. It is telling you that the tools of the prototyping era are finished. If you want to build a toy, use glue. If you want to build the future of your enterprise, build on steel.

Build your first private AI Agent today and turn your workforce into a scalable, autonomous engine.

Frequently Asked Questions

1. Is Lyzr a direct replacement for LangChain?

Yes, for enterprise use cases. Lyzr replaces the orchestration and application layers of LangChain with a more robust, production-ready platform. It abstracts away the low-level complexity of chains in favor of secure Agents, Tasks, and Pipelines.

2. Can I build an agent without writing code?

Yes. Lyzr Agent Studio is a low-code/no-code platform that allows you to build, test, and deploy complex RAG and Agentic workflows using a visual interface in minutes.

3. What is “Agentic RAG” and how is it different?

Standard RAG just fetches data based on keywords. Agentic RAG uses an AI agent to reason about the query, decide which data to fetch, plan a response, and even use external tools to verify information. It is “Active” reasoning vs. “Passive” retrieval.

4. Can I host Lyzr agents on my own servers?

Absolutely. Lyzr is “Privacy-First.” All agents, vector stores, and data processing can be deployed within your own Virtual Private Cloud (VPC) or on-premise infrastructure, ensuring 100% data sovereignty.

5. What is the failure rate for AI projects mentioned?

In 2025, the failure rate for AI projects hit 42%, primarily due to the complexity of moving from prototype to production using brittle frameworks. Lyzr is designed specifically to solve this “Valley of Death.”

6. Does Lyzr support multi-agent workflows?

Yes. Lyzr Automata is built for multi-agent orchestration. You can create a “mesh” of agents that communicate, hand off tasks, and collaborate to solve complex problems.

7. What models does Lyzr support?

Lyzr is model-agnostic. You can use OpenAI, Anthropic, Gemini, or open-source models (like Llama 3) via integrations. You are not locked into a single model provider.

8. How does Lyzr handle data security?

Lyzr is SOC2, GDPR, and ISO 27001 compliant. It includes native features like PII redaction, secret key management via the Enterprise Hub, and local execution to prevent data leakage.

9. What is the “State of AI Agents 2025” report?

It is a comprehensive industry report by Lyzr highlighting key trends, such as the finding that 80% of enterprises now prefer private cloud hosting for their AI agents over public SaaS solutions.

10. How quickly can I get started?

With Lyzr Agent Studio, you can build your first Agentic RAG system in under 5 minutes by using pre-built templates and the drag-and-drop builder.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here