Table of Contents

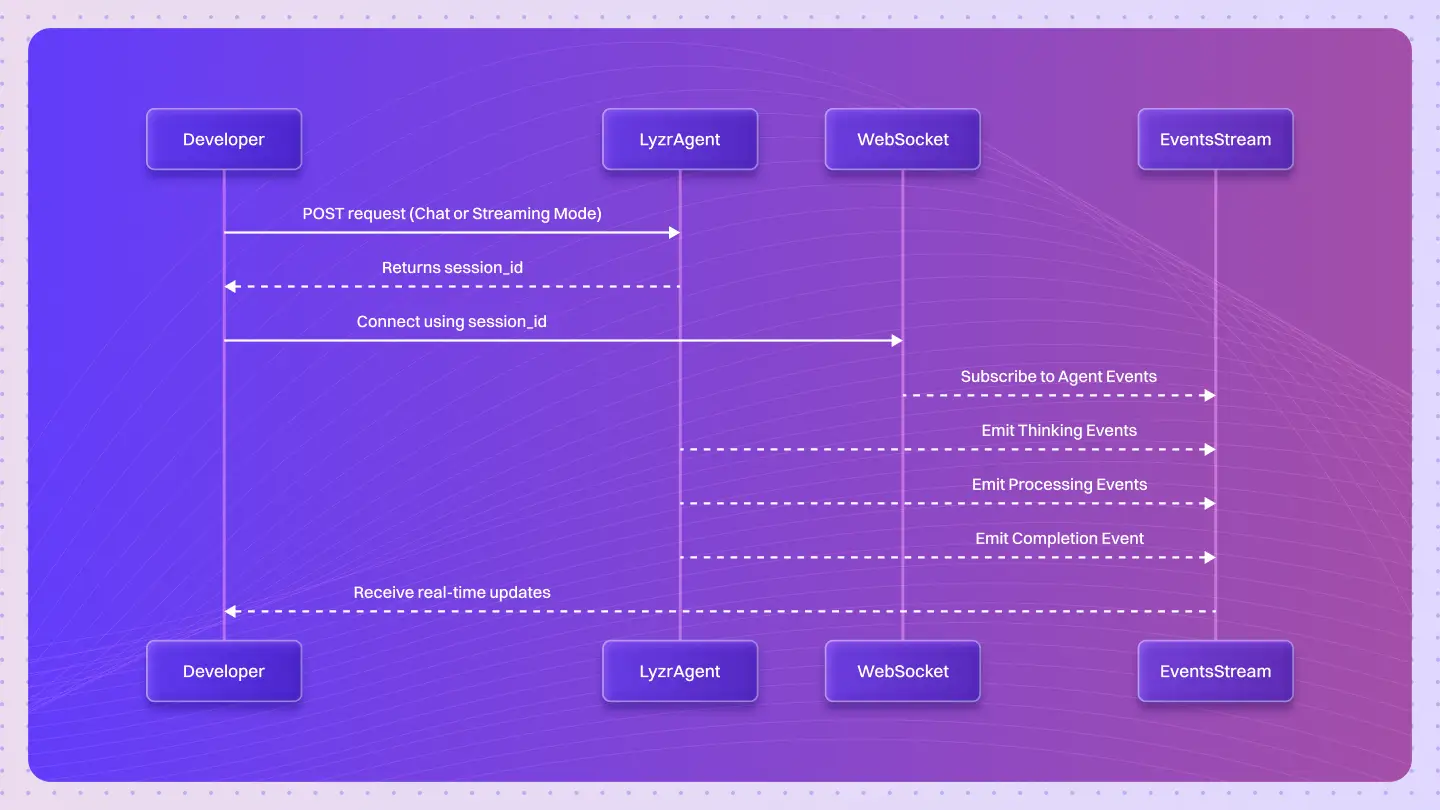

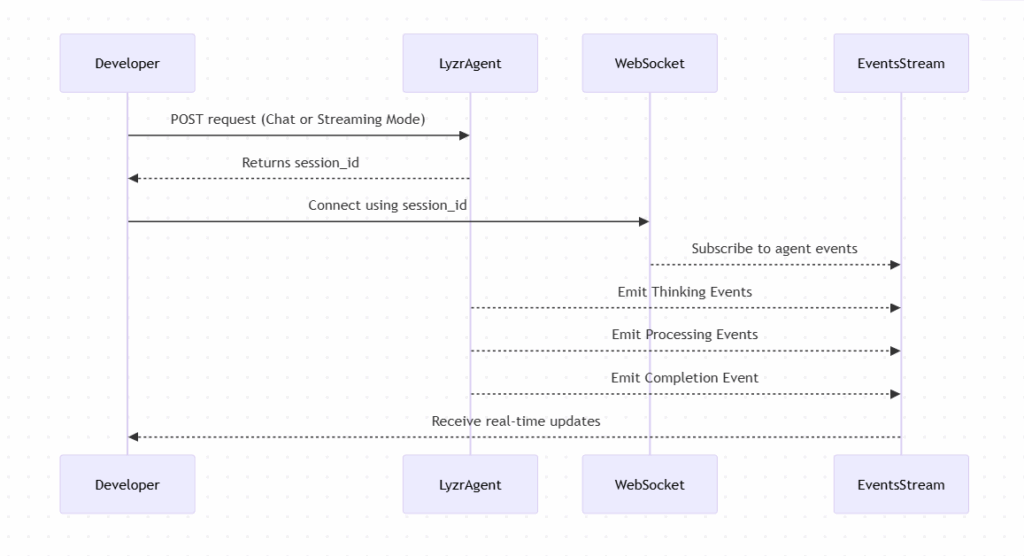

ToggleWhen an AI agent runs a request, it goes through multiple steps, reasoning, processing, generating a response, but these steps often remain hidden from developers.

How do you know what the agent is doing at each stage? How can you detect errors or monitor progress in real time?

Lyzr Studio solves this with Agent Events.

Every time an agent is invoked, it emits a stream of events that reveal its internal workflow. From intermediate reasoning steps to final responses, and even error handling, these events give developers a detailed view of agent execution.

A single session can produce dozens of events, each providing structured insights into the agent’s reasoning, model responses, and lifecycle updates.

With this visibility, developers can monitor performance, debug faster, and even provide users with live progress updates.

In this blog, we’ll get into how Agent Events work, how to capture them, and best practices for using them to gain full observability into your AI agents.

How Agents Are Invoked

Every journey of an agent starts with an invocation. In Lyzr Studio, agents can be invoked in two main ways: Chat Mode and Streaming Mode.

In Chat Mode, the agent processes a single request and returns a complete response, it’s like sending a message and waiting for the full reply.

In Streaming Mode, the agent sends responses incrementally as they are generated, which is useful for long-running tasks or when you want to display partial results to users in real time.

Each invocation generates a unique session_id, acting as the agent’s ID for that interaction. This ID is critical because it links the agent’s actions to the events you’ll receive later. Without it, there’s no way to track what the agent is doing in real time.

For instance, a single session might produce:

- Thinking events capturing intermediate reasoning steps,

- Processing events showing progress updates, and

- Completion events when the final response is ready.

By capturing the session_id at the start, you can subscribe to all these events and get a live view of the agent’s execution.

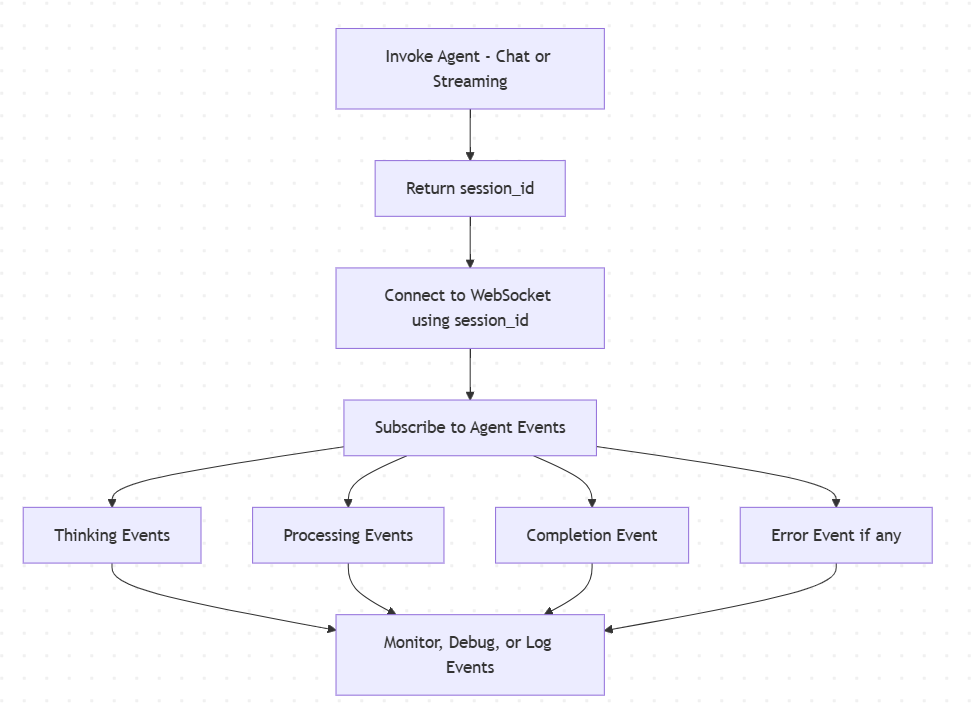

Receiving Agent Events

Once an agent is invoked, the next step is capturing its runtime events. In Lyzr Studio, these events are delivered in real time through a WebSocket connection, allowing developers to monitor agent behavior as it happens.

To receive events, you connect to the WebSocket endpoint using the session_id generated during invocation. This session_id links the incoming events to the correct agent session. Using an incorrect or expired session_id will prevent events from being streamed, so it’s important to capture and reuse the correct ID.

During the session, the WebSocket connection delivers various types of events:

- Thinking events – intermediate reasoning steps (Manager agents only)

- Processing events – structured progress updates from the LLM and orchestrator

- Completion events – indicate the final response is ready

- Error events – capture any failures during inference or integrations

It’s important to keep the WebSocket client open for the duration of the session. Closing the connection too early can result in missing events, especially for long-running tasks.

By subscribing to these events, developers can:

- Monitor agent execution in real time

- Debug and trace agent behavior step by step

- Provide live progress updates to users

Next, we’ll explore the event lifecycle, showing how these events fit together to give a complete picture of agent execution.

Event Payload and Fields

Each agent event in Lyzr Studio is delivered as a JSON object, providing structured information about the agent’s execution. Understanding the key fields is essential for monitoring, debugging, or logging agent behavior in real time.

Here are the most important fields:

| Field | Description |

| feature | Component generating the event (e.g., llm_generation) |

| level | Logging level (DEBUG, INFO, ERROR) |

| status | Process status (in_progress, completed, failed) |

| event_type | Type of event (e.g., thinking, processing, llm_generation) |

| run_id | Unique identifier for this agent run |

| session_id | Identifier linking the event to the agent session |

| model | LLM model used (e.g., gpt-4o-mini) |

Here’s a sample event payload for reference:

{

"feature": "llm_generation",

"level": "DEBUG",

"status": "in_progress",

"event_type": "llm_generation",

"run_id": "0ecb09b6-77d7-4709-a7ce-a43d3f5d748c",

"session_id": "68d0bbdef8c41f367a3f3a11-6fwxvvb1xxr",

"model": "gpt-4o-mini"

}Focusing on these fields first helps developers quickly identify and track relevant events, making real-time observability practical and actionable.

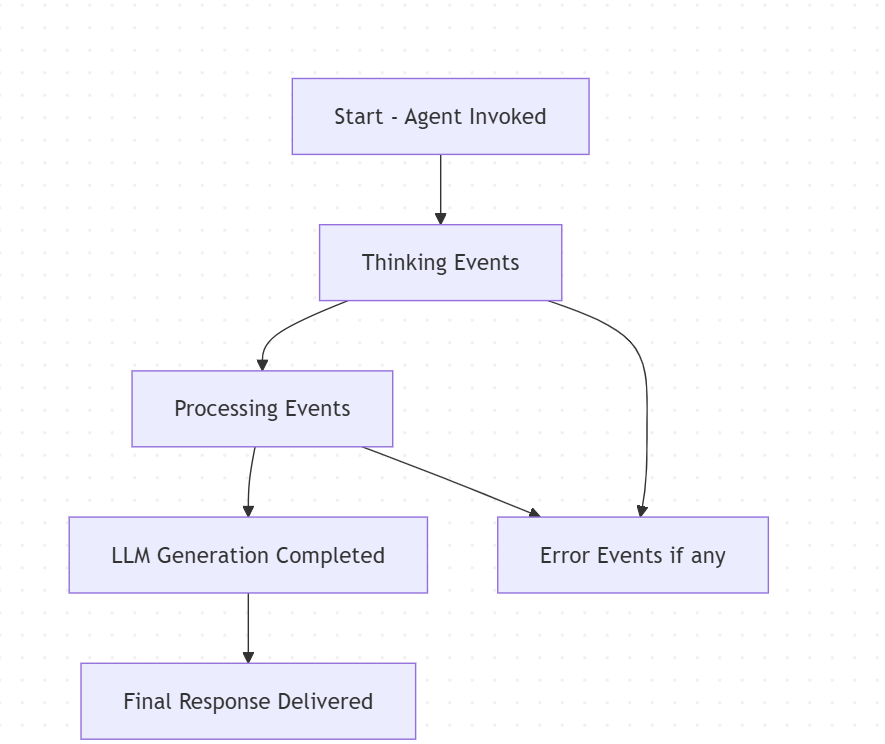

Event Lifecycle

When an agent runs, it doesn’t just jump from start to finish. It goes through several stages, and each stage emits events that provide visibility into what’s happening behind the scenes. Understanding this lifecycle is key to monitoring, debugging, and optimizing agent behavior.

A typical sequence of events during an agent invocation includes:

- Start – The agent is invoked and the session begins.

- Thinking Events – Intermediate reasoning steps, especially for Manager agents.

- Processing Events – Updates from the orchestrator or LLM, such as memory usage, KB retrieval, or tool calls.

- LLM Generation Completed – The agent finishes generating its response.

- Final Response Delivered – The completed output is returned to the caller.

- Error Events – Any failures during inference or integration are captured.

This workflow can be visualized as follows:

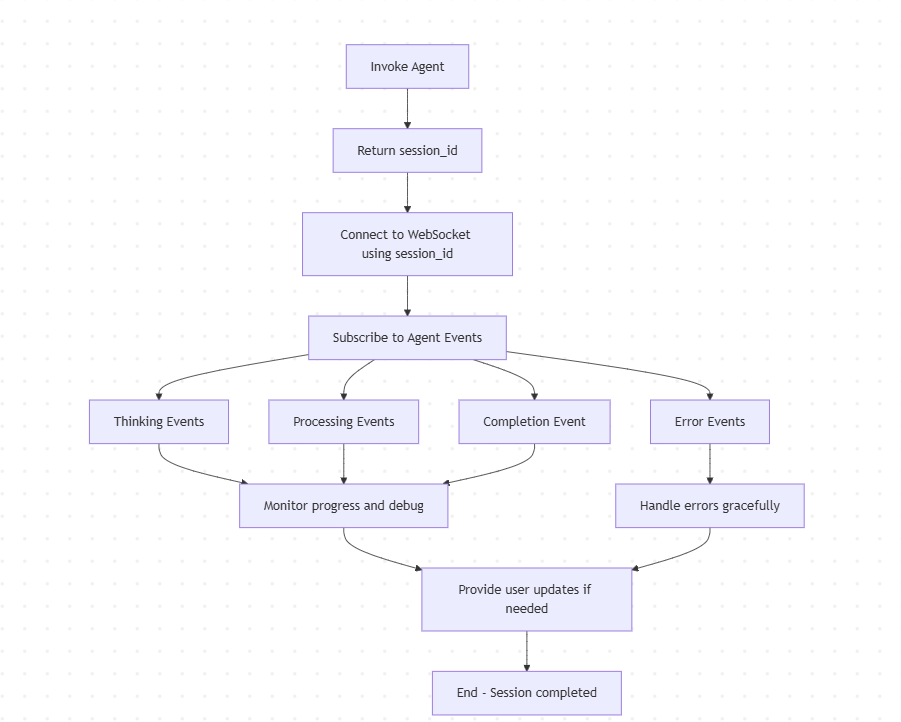

Best Practices

Working with agent events effectively requires more than just capturing them. Following a few best practices ensures monitoring, debugging, and user updates remain reliable and useful.

Start by reusing the same session_id for both agent invocation and WebSocket subscription. This keeps the event stream linked to the correct session and prevents missed events.

Next, handle error events gracefully. Agents may encounter failures during reasoning, processing, or integrations. Catching these errors without breaking downstream applications is essential.

Use events strategically. They can help with:

- Real-time monitoring

- Debugging agent behavior

- Providing users with live progress updates

Finally, maintain persistent WebSocket connections for long-running agents. Closing the connection too early can result in lost events and incomplete monitoring.

Following these practices helps developers get the most out of agent events, ensuring AI agents operate transparently, reliably, and efficiently.

Wrapping Up

Agent events in Lyzr Studio give real-time visibility into every stage of an AI agent, from invocation to final response and error handling. This allows developers to monitor execution, debug issues, and provide users with live progress updates.

Following best practices like reusing session_id, maintaining persistent connections, and handling errors ensures this visibility is reliable and actionable.

Start building with Lyzr Agent Studio to capture every step of your agent’s workflow and make AI agents fully observable and easier to manage.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here