Table of Contents

ToggleMost enterprise research systems are designed to retrieve documents, not deliver answers. They rely on structured filters and keyword matches, which work well for straightforward lookups. But as the volume of content grows and the nature of questions becomes more layered, that approach starts to show its limits.

Users want to know what’s changed, what’s most recent, what a specific analyst has written, or how a position has evolved across different reports. Traditional search isn’t built for that level of reasoning or context.

That’s the challenge HFS Research set out to solve.

With a repository of over 4,000 research assets, spanning IT services and enterprise technology, they needed a system that could do more than index and retrieve. It had to understand different types of queries, navigate specialized knowledge bases, and return precise, cited answers.

With Lyzr, HFS built a multi-agent research assistant that could interpret intent, pull from the right sources, and generate responses with the kind of clarity and depth today’s users expect. Let’s see how.

Why traditional search couldn’t handle the questions?

Research platforms often rely on full-text search or basic metadata filters to surface results. This works for exact-match queries, but breaks down when users expect the system to interpret intent, weigh relevance, and return synthesized answers. HFS began seeing the limitations of this approach across five common query patterns:

- Overview-style queries Example: “What are the key research themes on GenAI in 2024?”

These required scanning across multiple documents, summarizing key ideas, and organizing them contextually. Traditional search engines returned isolated files, with no ability to group related insights or highlight dominant themes. - Deep-dive questions Example: “What is HFS’s position on cloud-native modernization for large enterprises?”

These needed reasoning over long-form documents, sometimes across multiple sections or papers. Full-text search lacked the semantic understanding to surface specific arguments or evolving viewpoints. - Author-specific lookups Example: “What is written about IT operations in the last 12 months?”

Results depended entirely on consistent metadata. In practice, documents had missing or inconsistent author tags, leading to poor recall and irrelevant matches. - Recency-sensitive queries Example: “What’s the most recent point of view on automation trends?”

The system couldn’t reliably sort based on publication date or weight newer content more heavily. Without dynamic sorting or freshness scoring, users often received older research first. - Organizational or support queries Example: “Who leads research for BFSI at HFS?”

These were out-of-scope for document search engines, which returned keyword matches from unrelated reports.

From a system design perspective, the architecture assumed a single index could handle all content types and intents. But research consumption is far more varied.

Some queries need summarization. Others need document comparison. Some require structural metadata, like author timelines or type hierarchies. Keyword matching alone couldn’t parse those distinctions.

With over 4,000 documents spanning PDFs, HTML pages, and transcripts, the platform needed more than indexing. It needed intent classification, metadata enrichment, and multi-source reasoning, all of which required an architectural shift.

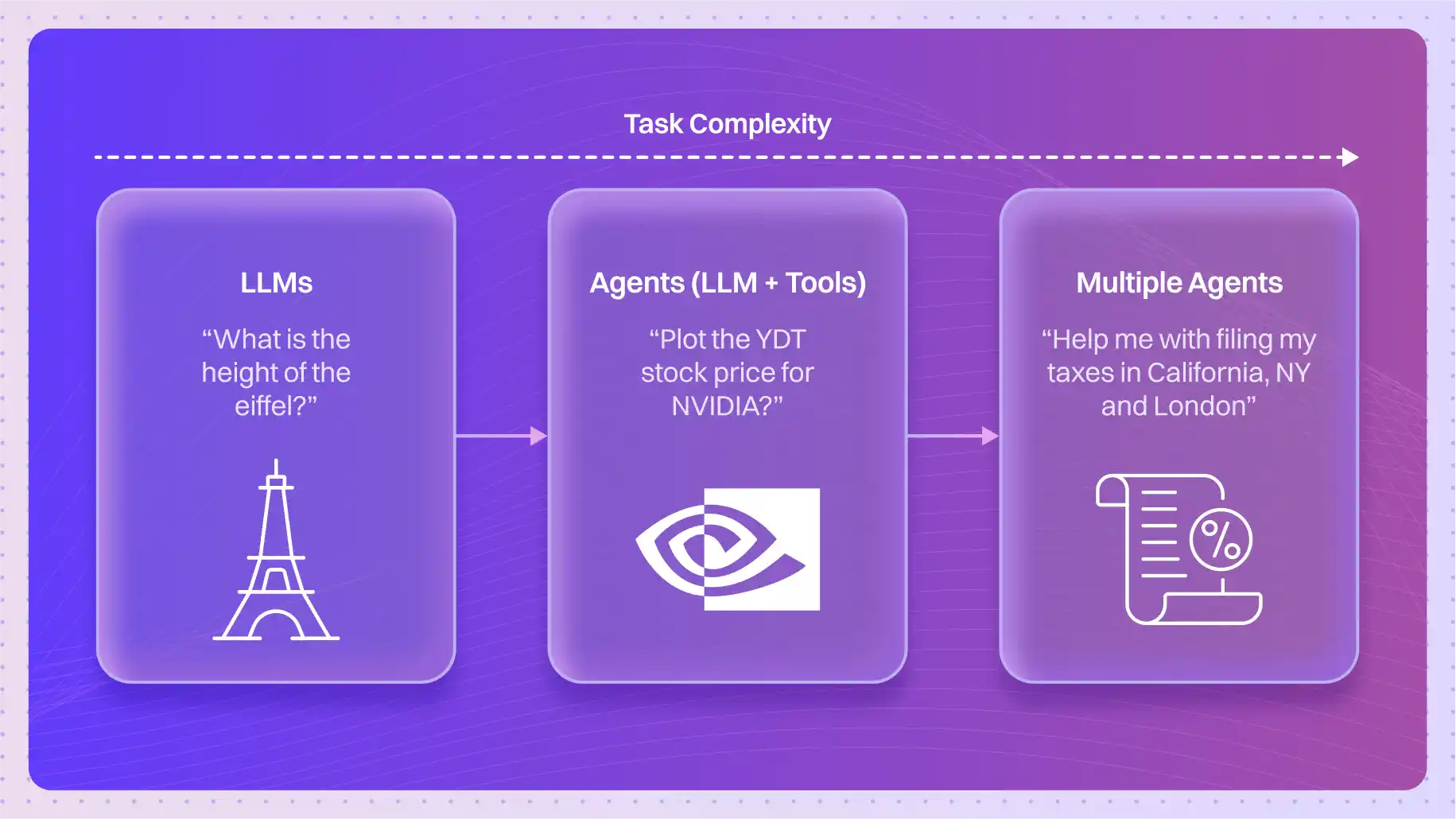

Building a system that knows where to look

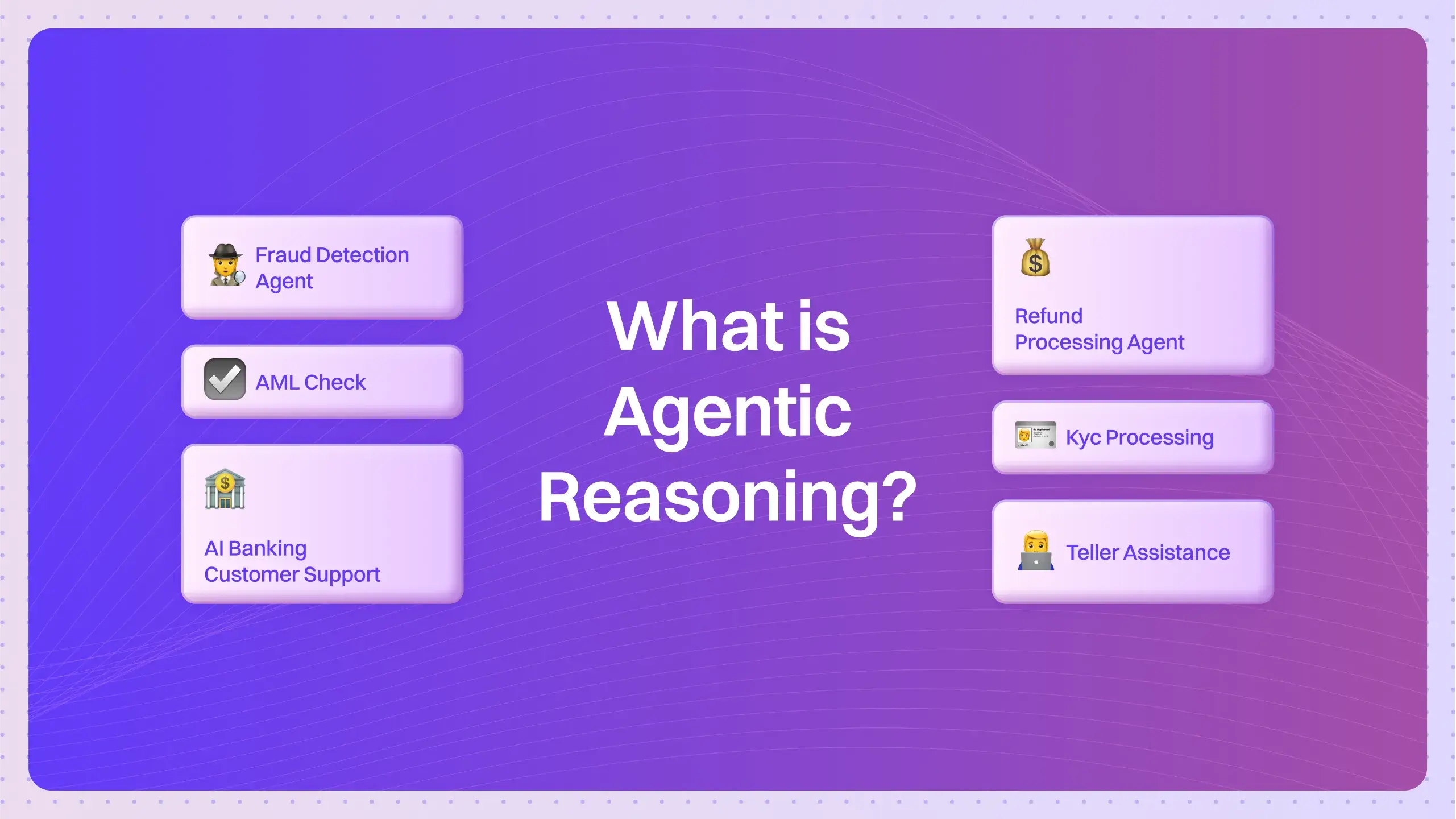

Instead of trying to force every query through one system, HFS and Lyzr took a different route. They broke the problem down. Different types of questions needed different types of logic, and different sources of truth. So the solution was to create a multi-agent architecture, where each agent handled a specific task, and each knowledge base served a distinct function.

At the center was a conversational agent that received the user’s question and classified its intent. Was this a request for a summary? A deep dive into a specific topic? Something author-specific? Or a question about the organization itself? Once the intent was clear, the query was routed to the right agent or knowledge base.

Here’s how the architecture was structured:

| Knowledge Base | Purpose | Examples of Queries Handled |

|---|---|---|

| Metadata & Summary KB | Quick filtering using document-level metadata and AI-generated summaries | “GenAI reports published in 2024”“Recent automation themes” |

| Full Research KB | In-depth content analysis of full documents | “HFS’s stance on cloud-native modernization” |

| Author-Document Map KB | Handles author-specific questions using a custom mapping | “Reports written by Reetika on IT operations” |

| Offset KB | Non-research content: team info, support, org structure | “Who leads BFSI research at HFS?” |

Each knowledge base was optimized for its specific use case.

For example, the Metadata & Summary KB combined HFS’s existing metadata with AI-generated tags and abstracts, enabling fast filtering. The Full Research KB indexed entire documents to support deeper semantic retrieval. And the Author-Document Map KB solved a critical pain point: inaccurate or missing author metadata.

This modular setup ensured queries were handled based on what they needed—not what the system could tolerate. Instead of returning generic matches, agents could extract, rank, and prioritize content from the most relevant source.

The system also handled query routing conditionally. If a question started broad but needed depth, it could escalate the response path from the Metadata KB to the Full Research KB automatically. This tiered approach improved both response time and accuracy, delivering fast answers when possible, and deep analysis when needed.

Solving for scale: what had to be fixed before it worked

With over 4,000 documents in various formats (PDFs, HTML pages, embedded transcripts), there was no central metadata file, no uniform tagging, and no structured index to support intelligent retrieval. Some documents had incomplete author fields.

Others lacked research type, publish dates, or even consistent titles. This made it nearly impossible to build a usable knowledge graph or support reliable filtering.

Manual metadata creation was ruled out early, it would’ve taken weeks of human effort, and that didn’t fit the timeline.

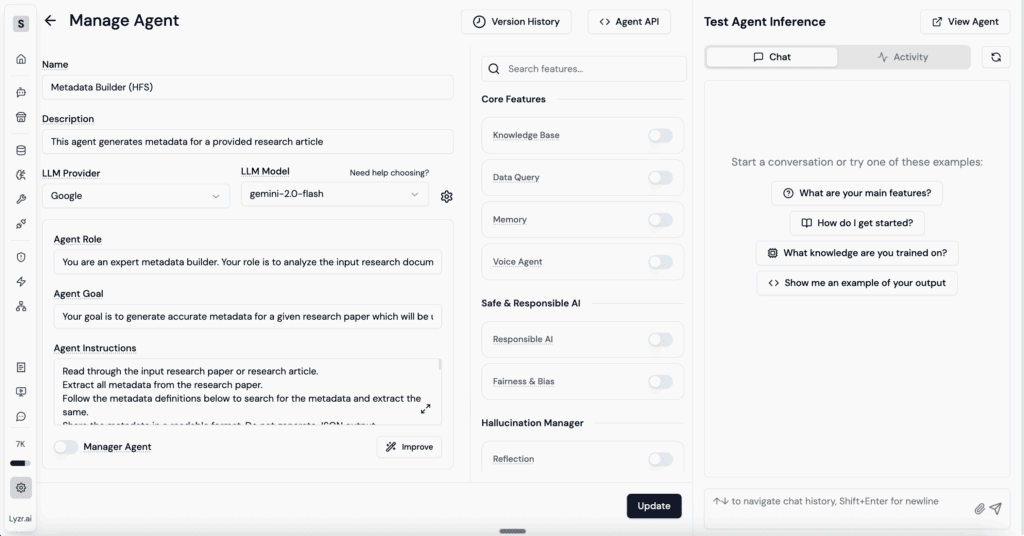

Instead, Lyzr built a Metadata Generator Agent. This agent could:

- Parse input content across formats (PDF, web pages, etc.)

- Auto-detect and tag fields across 29 different parameters

- Including: author names, publish dates, research themes, document types, enterprise verticals, and more

- Fill missing fields using AI in context of surrounding documents

- Feed the enriched metadata directly into the knowledge graph and vector store

The result: a complete, consistent metadata layer generated over a weekend, ready to support conditional routing, relevance scoring, and personalized retrieval.

Under the hood: How did we build it

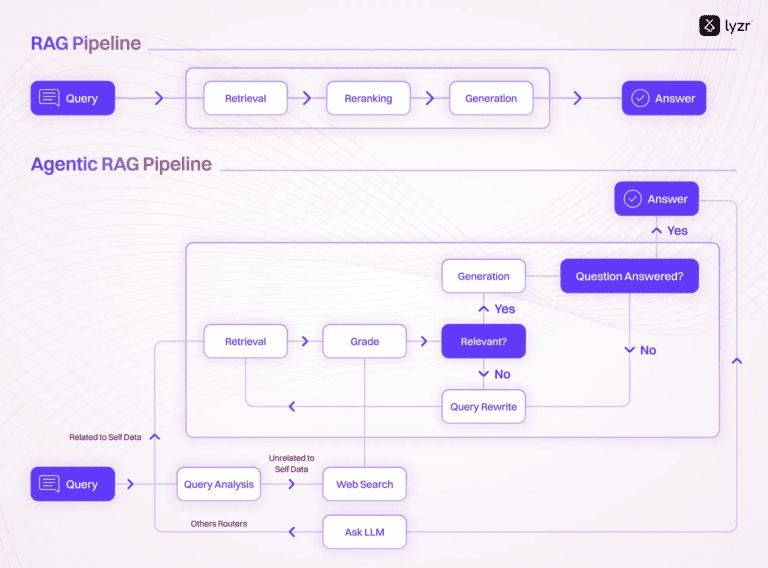

Once the architecture was in place, the focus shifted to how queries would actually flow through it.

The goal wasn’t just to retrieve documents, it was to interpret the question, understand the user’s intent, and return a complete, cited response. That meant designing a query pipeline that could adapt in real time.

The entire system is designed to respond like a reasoning engine, not just a retriever. And that starts the moment a query is submitted.

Each part of the architecture plays a distinct role in breaking down the question, pulling the right data, and composing a well-grounded answer.

Let’s walk through the flow:

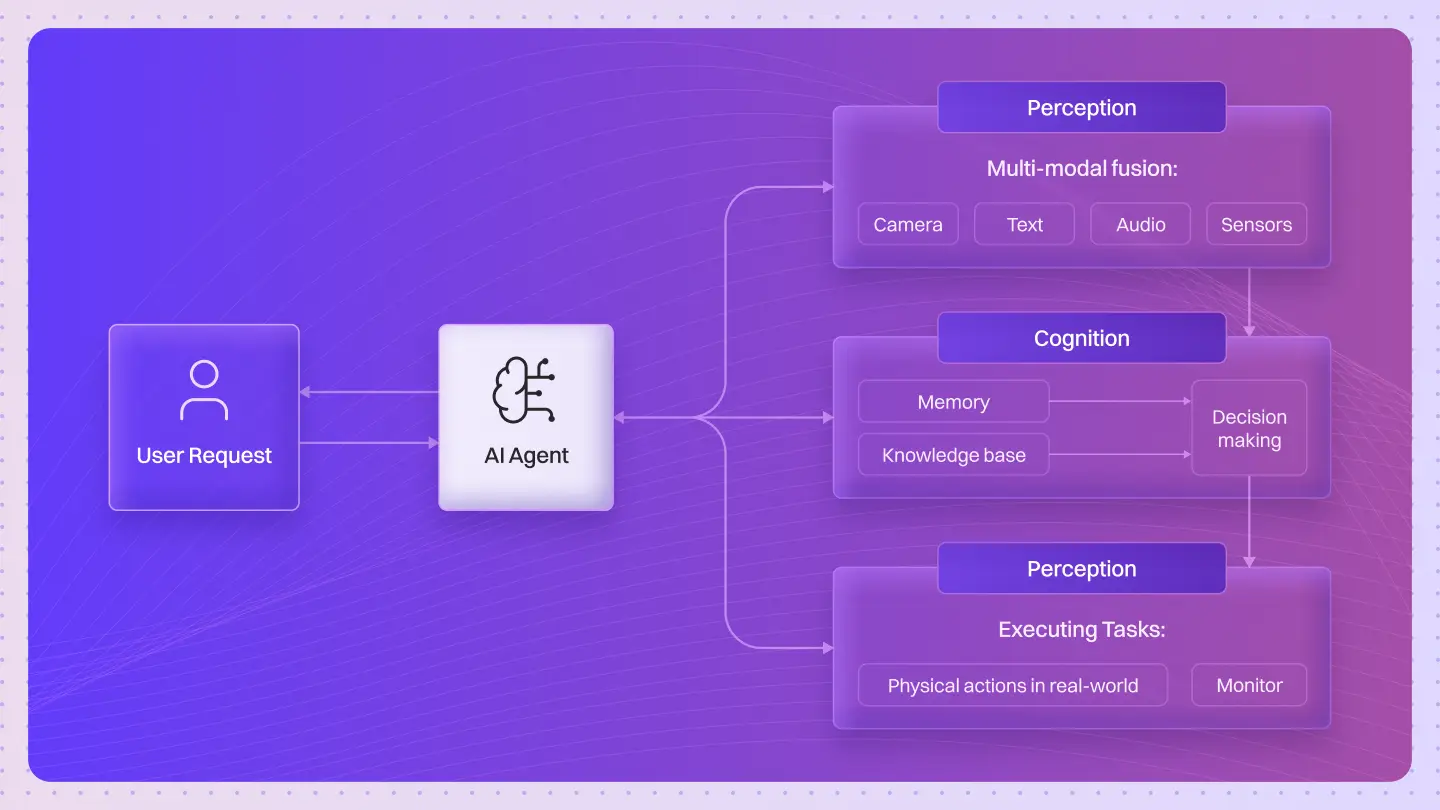

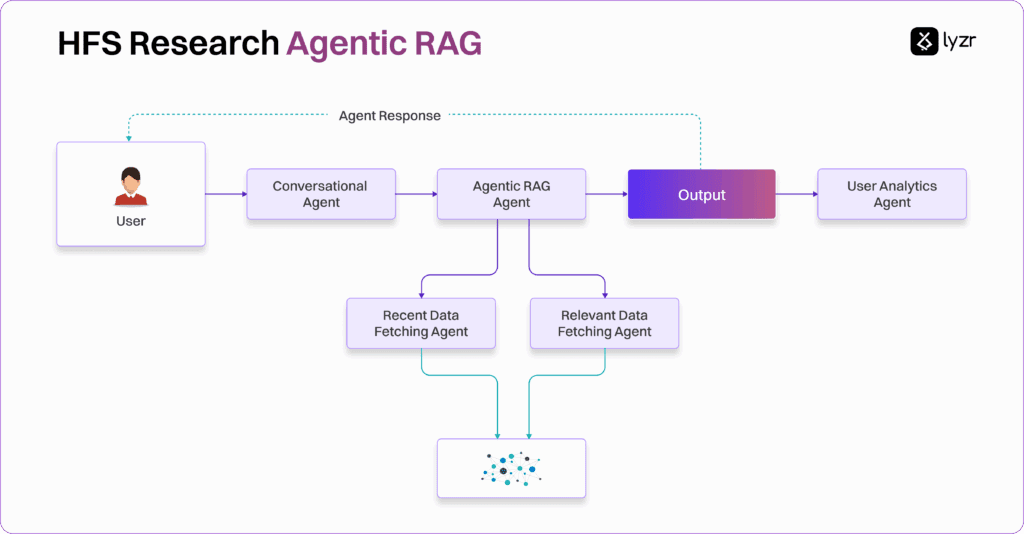

- Conversational Agent takes the input: The user interacts with a natural language interface, where the conversational agent receives the query. It parses the language, identifies the structure of the question, and forwards it to the core reasoning layer.

- Agentic RAG Agent takes over reasoning: The Agentic RAG Agent is the heart of the system. It determines how to handle the query, whether it needs recent information, specific authorship, deeper context, or metadata-based filtering. It also decides how to orchestrate the agents downstream.

- Data Fetching Agents run in parallel The Agentic RAG Agent activates two specialized agents:

- Recent Data Fetching Agent checks the graph database for the most recent documents relevant to the topic. This is key for time-sensitive insights.

- Relevant Data Fetching Agent pulls semantically matched passages based on embeddings stored in the vector DB, ensuring context and meaning are captured, even when keywords differ.

- Fused retrieval from graph + vector layers: Both fetching agents pull from a shared data layer, one optimized for freshness, the other for semantic relevance. This layered retrieval ensures that the system doesn’t just return what’s most recent or what matches best, it balances both.

- Output Generation with citations: The Agentic RAG Agent synthesizes the results into a single, coherent answer. It adds citations, ranks passages, and ensures that the final response is concise and verifiable.

- User Analytics Agent logs behavior: Once the response is delivered, a separate User Analytics Agent captures usage patterns, preferences, and feedback to improve future query handling.

The result is a system that doesn’t just fetch, it decides how to fetch. Whether the user wants a summary, deep research, or the latest view on a topic, the system adapts its approach dynamically, powered by agents with specialized responsibilities.

Rethinking research access, one agent at a time

What HFS built wasn’t just a smarter search engine. It was a complete shift in how enterprise knowledge gets discovered, interpreted, and delivered.

By breaking queries down by intent, routing them through specialized agents, and grounding responses in source content, the system became more than a research portal, it became a reasoning layer for the entire knowledge base.

This architecture is now live, answering complex research queries, surfacing fresh insight, and helping users get to decisions faster. And it’s built to scale, across formats, teams, and topics.

If your organization is sitting on a deep pool of content but struggling to make it discoverable, usable, or actionable, this approach is now real, and repeatable.

Want to see how it could work for you? Let’s set up a demo.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here