Table of Contents

ToggleLarge Language Models (LLMs) have quickly become the backbone of enterprise AI systems. But generic models—trained on internet-scale data—often fall short when applied to domain-specific problems. Whether it’s responding to customer queries in fintech or automating policy checks in insurance, businesses require models that understand their context.

That’s where fine-tuning comes in. And when paired with agentic frameworks like Lyzr and the scalable infrastructure of AWS, it unlocks a new level of intelligence—specialized, automated, and secure.

This blog unpacks how to fine-tune Meta’s LLaMA models on AWS using Amazon SageMaker, and then put those models to work through Lyzr agents, autonomous, decision-making AI entities orchestrated via a developer-friendly framework.

Why Fine-Tune LLaMA Models on AWS?

Before diving into the how, let’s clarify the why.

1. Domain Adaptation

Pretrained LLMs like LLaMA are trained on broad corpora. They know “a little about a lot.” But they don’t know your business.

Fine-tuning adapts the model to your domain, be it legal document drafting, financial analytics, or healthcare reporting, by teaching it from examples specific to your data, tone, and processes.

2. Scalable Training Infrastructure

AWS provides purpose-built infrastructure through Amazon SageMaker, EC2, and EFS, enabling businesses to fine-tune even massive models (e.g., LLaMA 70B) with distributed training across GPUs and CPUs.

3. Built-In Security and Compliance

Data-sensitive industries require isolated environments. With AWS, you can run all workloads inside your Virtual Private Cloud (VPC), ensure IAM-based access control, and adhere to compliance standards like HIPAA, SOC 2, or GDPR.

Understanding the LLaMA Fine-Tuning Process on AWS

Fine-tuning LLaMA models using SageMaker is more than just a few API calls—it’s a structured pipeline that includes data prep, environment configuration, training optimization, and secure deployment.

Prerequisites

Before starting, ensure the following:

- An active AWS account

- Permissions to use SageMaker, S3, and optional services like CloudWatch

- IAM roles configured for model training and access

- A prepared dataset in instruction-tuned format (e.g., question-answer pairs, prompts and completions)

Step 1: Choose the Right LLaMA Model

Amazon SageMaker JumpStart supports different versions of LLaMA:

| Model Name | Parameters | Ideal Use Cases |

|---|---|---|

| LLaMA 3 – 8B | 8 Billion | QA bots, knowledge agents, light workflows |

| LLaMA 3 – 70B | 70 Billion | Research agents, deep logic automation |

Choose based on your workload and cost-performance ratio.

Step 2: Prepare Your Dataset

Use instruction-tuned data formats such as:

- JSON:

{"prompt": "Summarize this article", "completion": "The article discusses..."} - CSV / JSONL for parallel or streaming jobs

Ensure data quality:

- Avoid hallucination-prone samples

- Cover edge cases and domain-specific phrasing

- Maintain balanced class distribution for classification tasks

Step 3: Launch the Fine-Tuning Job

a. Using SageMaker Studio UI:

- Go to JumpStart

- Select your LLaMA model (e.g.,

meta-textgeneration-llama-3-8b) - Provide the S3 path to your training data

- Configure compute instance (e.g.,

ml.g5.12xlargefor 8B) - Submit training job

b. Using Python SDK:

from sagemaker.jumpstart.estimator import JumpStartEstimator

estimator = JumpStartEstimator(

model_id="meta-textgeneration-llama-3-8b",

environment={"accept_eula": "true"}

)

estimator.set_hyperparameters(

instruction_tuned="True",

epoch="5"

)

estimator.fit({"training": "s3://your-bucket/path-to-data"})

Step 4: Optimize the Training Process

Optimization techniques ensure cost-effective training while preserving performance.

1. Low-Rank Adaptation (LoRA)

Reduces trainable parameters by focusing only on the core attention matrices. Saves compute and memory.

2. Int8 Quantization

Reduces parameter precision to 8 bits. Maintains accuracy with less memory usage—ideal for inference-time efficiency.

3. Fully Sharded Data Parallelism (FSDP)

Splits model weights and optimizer states across GPUs. Enables training of massive models like LLaMA 70B.

Step 5: Deploy the Model

After training, deploy the fine-tuned model to a secure SageMaker endpoint:

aws sagemaker create-endpoint-config ...

aws sagemaker create-endpoint ...

Or use the SageMaker console to manage versions, monitor logs, and enable autoscaling.

Lyzr + LLaMA + AWS

Once your fine-tuned model is ready, the next step is orchestration. That’s where Lyzr Agent Framework comes in.

What is Lyzr?

Lyzr is a full-stack agent platform that allows developers to build, run, and manage intelligent agents that:

- Perform multi-step tasks autonomously

- Integrate APIs, databases, and internal tools

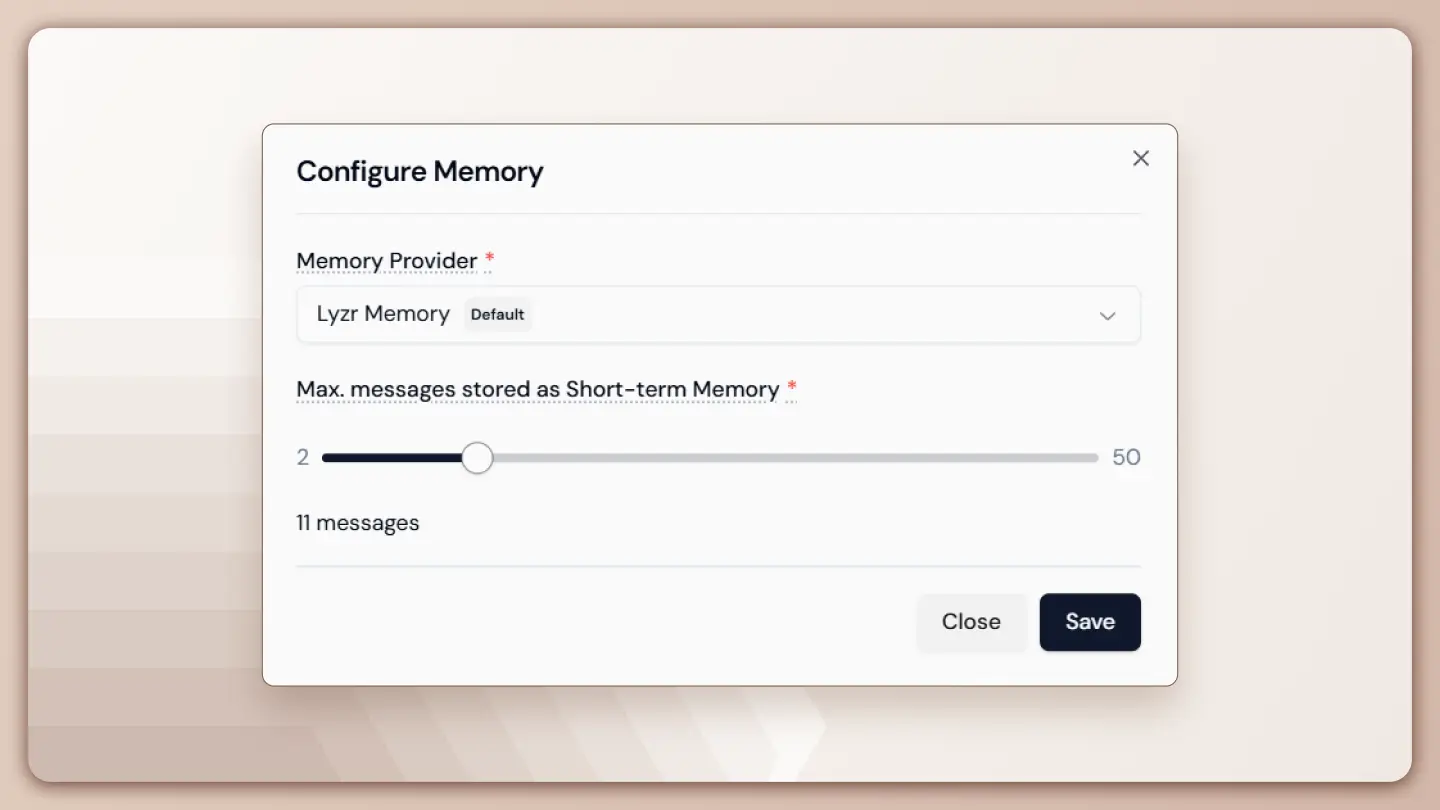

- Maintain memory and follow long-term context

- Operate within enterprise-safe environments (VPC, IAM, etc.)

How Lyzr Integrates with AWS and LLaMA

| Component | Purpose |

|---|---|

| SageMaker | Hosts fine-tuned LLaMA model |

| Lyzr Agent Framework | Orchestrates AI agents with LLaMA as the backbone |

| AWS Services | Provides infrastructure, storage, monitoring, etc. |

Two integration paths:

- Use SageMaker endpoints with Lyzr’s Python SDK

- Use Amazon Bedrock for serverless orchestration with Lyzr (coming soon)

Step-by-Step: Deploy Lyzr Agents with Your Fine-Tuned Model

| Step | Action |

|---|---|

| Model Preparation | Fine-tune LLaMA and deploy via SageMaker |

| Endpoint Registration | Add model endpoint in Lyzr Studio or via SDK |

| Agent Definition | Assign roles, objectives, instructions |

| Tool Integration | Connect APIs, databases, and external systems |

| Workflow Setup | Define task triggers and multi-agent flows |

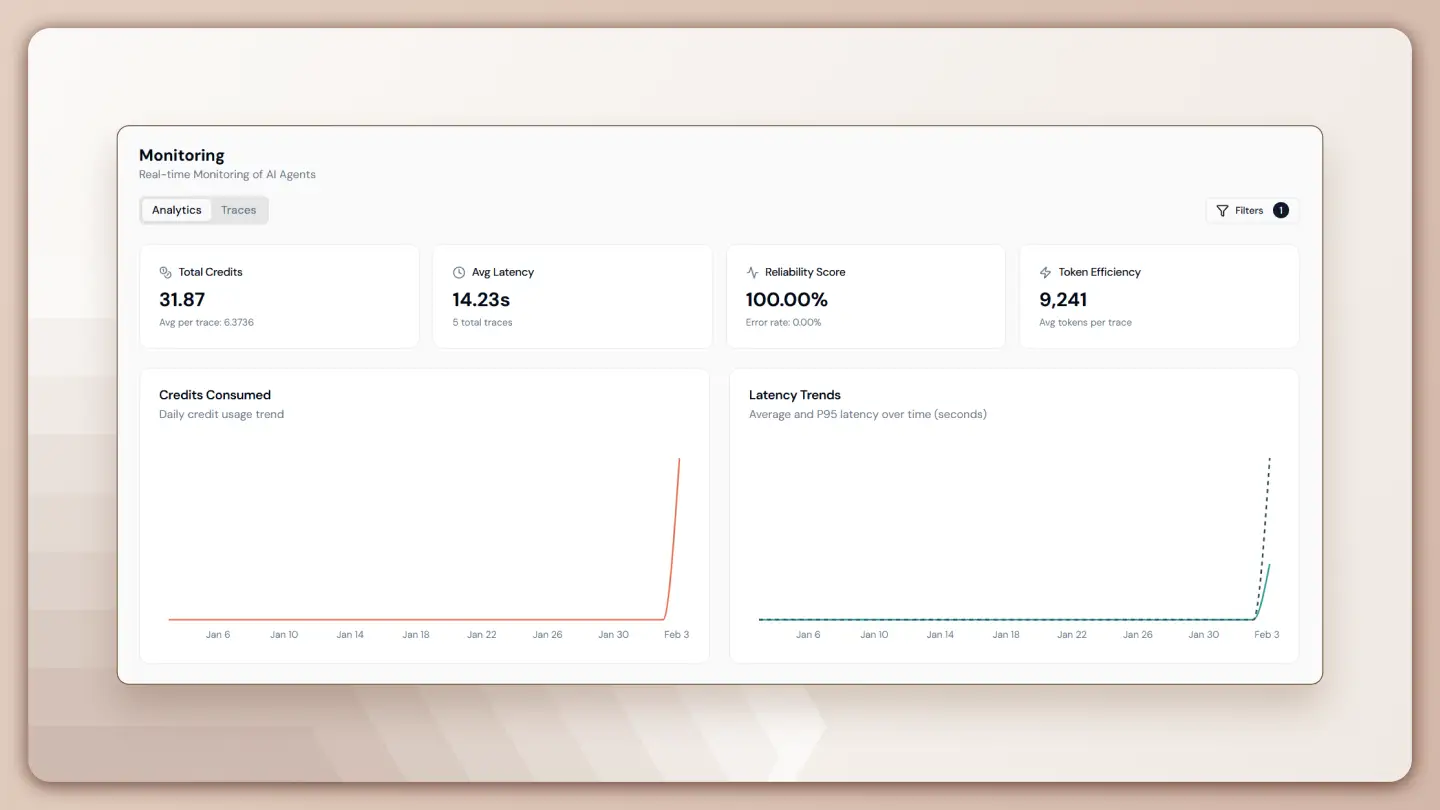

| Monitoring & Scaling | Use AWS CloudWatch + Lyzr dashboards for metrics |

Sample agent SDK code:

from lyzr import Agent

support_agent = Agent(

name="customer_support_agent",

model_endpoint="https://sagemaker-endpoint-url",

instructions="Use LLaMA to answer customer queries about insurance policies."

)

Real-World Use Cases

1. Customer Support Automation

A fine-tuned LLaMA model trained on your product documents + CRM conversations allows Lyzr agents to:

- Answer policy-related questions

- Escalate edge cases to humans

- Automatically retrieve and summarize customer history

2. Sales Enablement

Sales agents can:

- Generate outbound emails using fine-tuned tone

- Qualify leads based on CRM data

- Maintain follow-up cadences with minimal manual intervention

3. Knowledge Work & Research

With retrieval-augmented generation (RAG) capabilities, Lyzr agents using LLaMA can:

- Parse PDFs, web pages, or reports

- Generate summaries or insight extraction

- Help internal teams make informed decisions

Key Tips for Success

1. Data Quality Matters

- Use clean, diverse, and context-rich datasets.

- Avoid overfitting by including general + edge cases.

- Format samples for instruction tuning to teach behavior.

2. Optimize for Budget

- Start with 8B model, scale to 70B only if necessary.

- Use LoRA, Int8, and FSDP to manage costs.

3. Plan Security from Day One

- Always deploy in VPC.

- Set IAM roles per service (SageMaker, Lambda, Redshift).

- Use Lyzr’s compliance logging and audit tracking for enterprise readiness.

Conclusion: Intelligent Agents, Fine-Tuned for Action

Fine-tuning LLaMA models on AWS allows businesses to build highly specialized language capabilities. When paired with Lyzr’s agent framework, these models evolve beyond simple inference—they become part of an intelligent workflow engine.

Whether you’re handling tickets in a support desk, summarizing legal contracts, or automating lead generation, the LLaMA + Lyzr + AWS stack offers flexibility, security, and operational depth to do it at scale.

Now’s the time to move beyond generic AI. With Lyzr and AWS, build agents that know your world, and act in it

Book a demo to know more

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here