Table of Contents

ToggleExternal APIs look fast, until you have to explain where your data went.

That’s the line many enterprises are hitting. The model works, the use case is proven, but legal, compliance, and infosec teams are still in the room. And they’re asking hard questions about control, cost, and accountability.

GPT-4 might be great at writing, but it’s not solving procurement friction, egress bills, or the headache of non-native integrations.

That’s why enterprises are moving to Lyzr on AWS Bedrock. It’s not just a technical shift, it’s a structural one. Inference stays inside your VPC. Costs stay predictable. And control stays exactly where it should be: with the enterprise

The Real Enterprise Friction Points

Most AI providers solve for developer excitement, not enterprise realities. The issues creep in after deployment:

| Pain Point | OpenAI Enterprise Challenge | Lyzr + AWS Bedrock Advantage |

| Data Egress Fees | Unpredictable costs for data transfer outside the cloud | Zero fees — inference happens within AWS VPC |

| Compliance Overhead | Data leaves infrastructure; custom controls required | AWS-native controls: IAM, CloudTrail, KMS |

| Integration Complexity | External APIs mean custom work, retraining teams | GPT-compatible interface; reuse existing code |

| Service & Support | Fragmented support outside cloud ecosystem | Full AWS-native integration, observable and auditable |

| Procurement | New vendor contracts, security reviews, billing friction | Covered under AWS Enterprise Discount Programs |

| Monitoring & Governance | Limited transparency into external agent operations | Native observability via CloudWatch, GuardDuty, and CloudTrail |

OpenAI is great for experimentation. But Lyzr is built for production-grade AI inside the architecture you already trust.

AWS-Native Advantage: Inference Inside the VPC

Lyzr leverages AWS Bedrock to run AI agents directly inside your environment. That means:

- Inference never leaves your network

- No custom security review required

- Data stays private, auditable, and governed

Lyzr on AWS: How It Works

✅ Data stays within your cloud

✅ IAM, VPC, CloudTrail policies apply

✅ Easily auditable for internal compliance

✅ Compatible with AWS-native observabilit

Real-World Outcomes: Proof That It Works

Case 1: Fortune 500 Financial Institution

- Initial Setup: OpenAI GPT-4 agents for client onboarding and report summarization

- Problem: Unpredictable egress fees, slow procurement

- Migration: Moved to Lyzr + AWS Bedrock using Claude + Titan

- Result:

- 45% reduction in total costs in the first year

- Zero procurement friction — rolled into existing AWS billing

- Better auditability for regulatory compliance

- 45% reduction in total costs in the first year

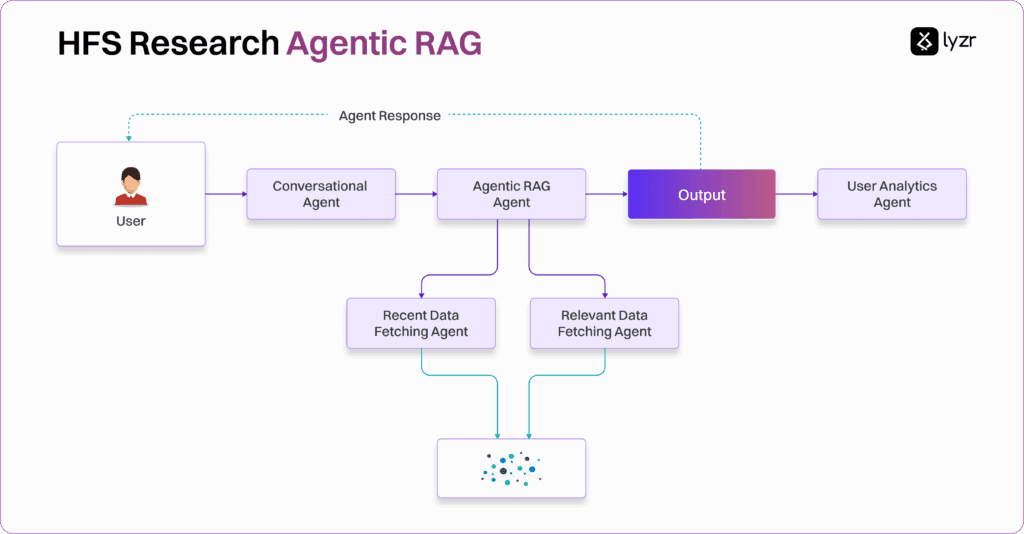

Case 2: HFS Research

- Use Case: Ingest and analyze 4,000+ PDFs with metadata enrichment

- OpenAI Limitation: API rate limits, latency, external processing

- Lyzr Setup: Agents running inside VPC with full access to S3, RDS, and internal auth

- Result:

- Completed at 2x speed

- Entire pipeline observable via CloudWatch

- Compliance team approved in one review cycle

- Completed at 2x speed

Understanding the Total Cost of Ownership

The sticker price of an OpenAI API is just the beginning. Enterprises incur real, compounding costs when adopting AI across departments.

| Cost Component | OpenAI Enterprise | Lyzr + AWS Bedrock |

| Inference/API Fees | High per-call fees with unpredictable scale | Standard AWS pricing with EDP coverage |

| Data Transfer/Egress | Additional cost when data leaves VPC | No egress — stays internal |

| Compliance & Governance | Extra tooling and approvals required | Uses existing AWS controls |

| Integration Time | Custom work, retraining | Minimal — GPT-compatible API |

| Security & Monitoring | External model behavior hard to monitor | CloudTrail, IAM, KMS logs everything |

| Vendor Management | New contract, billing, procurement | Included in AWS procurement framework |

Drop-In Migration: GPT-Compatible by Design

One of the biggest concerns with switching from OpenAI: “Will we have to rewrite everything?”

Short answer: No.

Lyzr maintains GPT-compatible APIs, meaning:

- Existing prompts and workflows continue working

- Client SDKs are backward-compatible

- Minimal config changes required

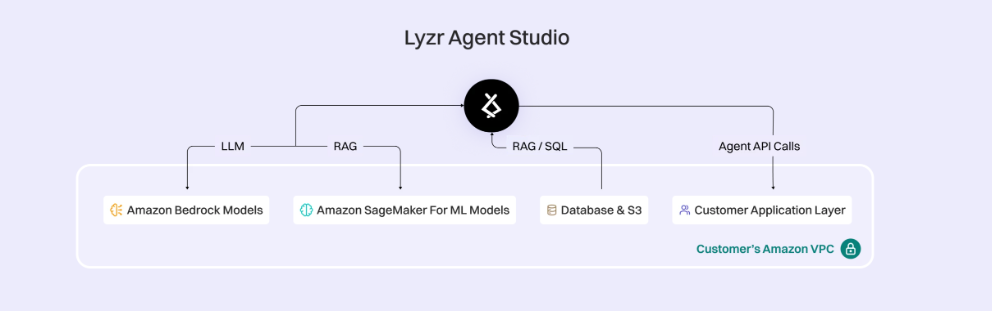

Inside the Architecture: A Fully Private AI Agent System

Let’s take a closer look at how a typical Lyzr agent operates within an enterprise AWS setup:

The Future: Voice Agents With Nova Sonic

Launching in H1 2025, Nova Sonic brings the next evolution: voice-first agents that operate entirely inside your AWS infrastructure.

No audio data leaves your environment. Agents can:

- Accept voice input from apps or call centers

- Process it using in-VPC transcription and models

- Respond with real-time generated speech

Use Case Example: Voice Agent for Compliance Teams

- Agent listens to incoming customer query via internal phone system

- Transcribes audio in real time using Bedrock-hosted Whisper variant

- Runs prompt chain locally using Lyzr Agent

- Responds with voice output, generated and delivered entirely within the VPC

This will enable voice interfaces in sectors like banking, insurance, healthcare, without ever giving up data control.

Conclusion: It’s Not About the Hype, It’s About the Stack

OpenAI opened the door to what’s possible. But Lyzr + AWS Bedrock is how enterprises walk through it, safely and at scale.

Why Enterprises Choose Lyzr + Bedrock:

- ✅ 45% lower cost in first year

- ✅ No procurement friction

- ✅ Full data sovereignty

- ✅ Observability, IAM, and native compliance

- ✅ Voice agents coming next with Nova Sonic

OpenAI is optimized for public-scale experimentation. Lyzr + Bedrock is optimized for enterprise-scale execution.

Choose the stack that works for your systems, your policies, and your future.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here