Performance is pointless without trust.

Trustworthy AI Agents are artificial intelligence systems designed to be reliable, safe, transparent, fair, and aligned with human values, ensuring they can be dependably deployed in real-world applications with minimal risk of harmful outcomes.

Think of a certified airplane pilot.

They don’t just know how to fly.

They undergo rigorous training, continuous monitoring, and adhere to strict safety protocols.

They communicate clearly. They prioritize safety above all else.

Trustworthy AI Agents are built with the same philosophy.

They have built-in safeguards, transparency mechanisms, and ethical guidelines to ensure they operate reliably and safely, even when things get weird.

This isn’t an optional add-on.

It’s the very foundation required to move AI from the lab into our daily lives, from our hospitals to our highways.

What are Trustworthy AI Agents?

They are AI systems engineered with a conscience.

A Trustworthy AI Agent isn’t just about getting the right answer.

It’s about getting the right answer in the right way, for the right reasons.

This breaks down into several key pillars:

- Reliable & Robust: The agent performs consistently and predictably, even when faced with unexpected or adversarial inputs.

- Safe: It operates without causing unintended harm to people, property, or itself.

- Transparent: Its decision-making process is understandable to humans. We can look “under the hood” to see why it did what it did.

- Fair: The agent makes decisions without reinforcing harmful biases or discriminating against certain groups.

- Accountable: There are clear mechanisms to determine who is responsible when the agent makes a mistake.

- Value-Aligned: Its goals and behaviors are aligned with human values and ethical principles.

An agent that is only accurate but fails on these other points is not trustworthy. It’s a liability.

How do Trustworthy AI Agents differ from traditional AI systems?

The difference is in the definition of “good.”

Traditional AI Systems:

- Their primary goal is performance.

- Success is measured by metrics like accuracy, speed, and efficiency.

- The focus is on optimizing for a single objective.

Trustworthy AI Agents:

- They prioritize a holistic set of principles, including reliability, safety, fairness, and transparency.

- Performance is still important, but it’s balanced against these other critical factors.

A performance-optimized agent might be 99% accurate in diagnosing a disease from scans.

But if that 1% failure happens in an unpredictable, biased, or catastrophic way, it’s not trustworthy.

A trustworthy agent might be slightly less accurate, say 98%, but it incorporates robust safeguards.

It might flag ambiguous cases for human review or provide a clear explanation for its diagnosis.

This ensures its behavior is safer and more predictable across all scenarios, not just the easy ones.

What are the core principles of Trustworthy AI?

It’s a framework for responsible innovation.

Most industry and government bodies have converged on a set of core principles that define what makes an AI system trustworthy. While the wording may vary, they consistently include:

- Reliability and Robustness: It works correctly, every time, even under stress.

- Safety: It has built-in guardrails to prevent physical, psychological, or financial harm.

- Fairness and Non-Discrimination: It treats all individuals and groups equitably.

- Transparency and Explainability: We can understand its reasoning.

- Accountability and Governance: Clear lines of responsibility for the AI’s actions are established.

- Privacy and Data Governance: It respects user privacy and handles data responsibly.

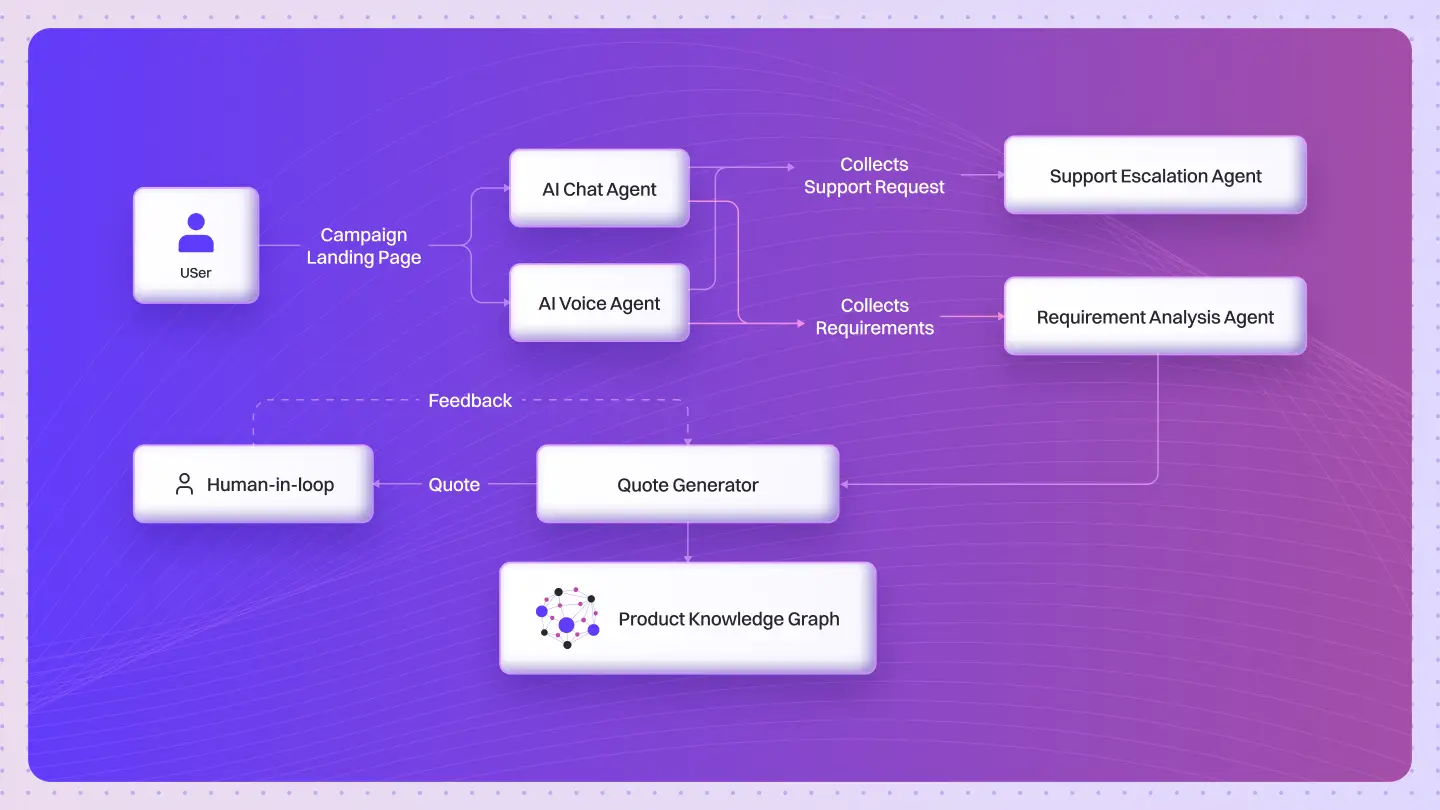

- Human-in-the-Loop: It is designed to augment human capabilities, not replace human oversight in critical domains.

These principles are not just a checklist.

They represent a deep engineering and ethical commitment throughout the AI lifecycle.

Why is trustworthiness critical for AI agent deployment?

Because without trust, AI will never reach its potential.

Powerful technology requires strong guardrails.

When AI agents are deployed in high-stakes fields like medicine, finance, or transportation, the consequences of failure are severe.

Trustworthiness is critical for:

- User Adoption: People will not use or rely on systems they do not trust.

- Risk Management: It helps organizations identify and mitigate potential harms before they occur.

- Regulatory Compliance: Governments worldwide are introducing regulations that mandate AI systems to be safe and fair.

- Preventing Harm: Ensuring AI does not perpetuate societal biases or make catastrophic errors.

Companies like Google DeepMind, Anthropic, and Microsoft aren’t just pursuing performance.

They have entire teams dedicated to AI safety and alignment.

Anthropic’s “Constitutional AI” approach, for example, bakes ethical principles directly into its models. This isn’t just good ethics; it’s good business and essential engineering.

What key technical mechanisms ensure AI trustworthiness?

Trust is not achieved by accident; it is engineered.

Developers use a growing toolkit of specialized techniques to build and validate these principles directly into AI agents. The core isn’t about general coding; it’s about creating verifiable and safe systems.

Formal Verification:

This uses mathematical proofs to guarantee an AI system will adhere to certain rules. For example, proving that a self-driving car’s AI will never accelerate when a red light is detected. It’s about providing mathematical certainty for critical safety properties.

Interpretability Methods:

These are techniques that shine a light inside the AI’s “black box.” Methods like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) help us understand which input features an agent weighed most heavily in making a specific decision.

Robustness Testing:

This goes beyond standard testing. It involves actively trying to break the AI.

This includes:

- Adversarial Testing: Feeding the agent subtly modified inputs designed to fool it.

- Distributional Shift Testing: Checking how the agent performs when the data it sees in the real world starts to differ from its training data.

Quick Test: Spot the Trust Gap

An insurance company deploys a new AI agent to approve or deny loan applications. It is 99.5% accurate compared to historical human decisions. However, an audit reveals it denies loans to applicants from a specific zip code at a rate 50% higher than average, even when their financial profiles are identical to approved applicants.

Where is the trust gap?

The system is accurate but fails the principle of Fairness. Its performance metric hides a harmful, embedded bias. A trustworthy AI would have been developed with fairness-aware algorithms and audited for bias before deployment.

Diving Deeper into Trustworthy AI

How is trustworthiness measured in AI agents?

It’s measured with a broader set of metrics beyond just accuracy. This includes fairness metrics (e.g., demographic parity, equalized odds), robustness scores against adversarial attacks, explainability scores, and formal audits against established responsible AI frameworks like the NIST AI Risk Management Framework.

What role does transparency play in Trustworthy AI?

Transparency is fundamental. It allows for accountability. If we don’t know why an AI agent made a decision, we can’t debug it, we can’t contest it, and we can’t trust it. It’s the difference between a doctor saying “you need surgery” and a doctor showing you the X-ray and explaining exactly why.

How do developers balance performance and safety in Trustworthy AI Agents?

It’s a deliberate trade-off. Sometimes, adding a safety guardrail or a fairness constraint might slightly lower a raw performance metric. The principle of trustworthy AI dictates that safety and fairness are non-negotiable requirements, not features to be optimized away for a minor gain in accuracy.

What are the regulatory approaches to ensuring AI trustworthiness?

Governments are stepping in. The EU’s AI Act is a landmark example, classifying AI systems by risk level and imposing strict requirements on high-risk applications. Other regions are developing similar frameworks, moving trustworthy AI from a best practice to a legal requirement.

How does value alignment contribute to trustworthy AI?

Value alignment is the process of ensuring an AI agent’s goals are aligned with human values. This is crucial for autonomous agents. We need to ensure that when an agent pursues its objective, it does so in a way that is beneficial and not harmful to humans, even if it finds a shortcut we didn’t anticipate.

What testing methodologies are used to verify AI trustworthiness?

Beyond standard software testing, it involves red teaming (where experts try to break the AI’s safety rules), bias and fairness audits, simulations of edge-case scenarios, and formal verification methods.

How can bias be detected and mitigated in AI agents?

Bias can be detected using specialized auditing tools that analyze an agent’s decisions across different demographic groups. Mitigation strategies include curating more diverse and representative training data, using fairness-aware machine learning algorithms, and implementing post-processing techniques to adjust model outputs.

What are the industry standards for Trustworthy AI development?

Standards are emerging rapidly. The NIST AI Risk Management Framework (RMF) in the U.S. and ISO/IEC standards (like ISO/IEC 42001) provide guidelines for managing risks and implementing trustworthy AI principles in a structured, repeatable way.

The future of AI isn’t just about creating more powerful intelligence.

It’s about creating intelligence we can fundamentally trust.