Zero-Shot AI Agents are autonomous systems built on large language models that can understand and perform tasks they weren’t explicitly trained on, using general reasoning abilities rather than specific training examples.

Think of a Zero-Shot AI Agent like a human expert. They can solve a problem they’ve never seen before by applying general knowledge and reasoning skills. If you explain the rules of a new board game to a smart friend, they can play it immediately without practice. That’s how Zero-Shot AI Agents approach new tasks. They don’t need practice games.

This capability is a massive leap. It removes the bottleneck of collecting massive, task-specific datasets, making AI far more flexible and immediately useful for a wider range of unique problems.

What is a Zero-Shot AI Agent?

It’s an AI that can improvise. A Zero-Shot Agent leverages the vast, generalized knowledge embedded within its foundation model (like GPT-4 or Claude). When given a novel task, it uses its understanding of language, logic, and concepts to reason its way to a solution.

It doesn’t look for a specific memory of how to perform that exact task. Instead, it understands the intent behind the request and formulates a plan from first principles. This allows it to handle tasks that its creators never anticipated.

How do Zero-Shot AI Agents work?

They operate on pure instruction and reasoning. The process is surprisingly intuitive:

- Instruction: The agent receives a task in natural language, like “Summarize the customer feedback from these three emails and identify the most common complaint.”

- Reasoning: The agent’s core LLM analyzes the request. It understands concepts like “summarize,” “customer feedback,” and “common complaint” from its general training.

- Planning: It breaks the task down into logical steps. “First, I need to read email one. Then email two. Then email three. Then I need to list the complaints from each. Finally, I will count them to find the most common one and write a summary.”

- Execution: The agent performs these steps, often using tools if needed, to arrive at the final answer.

It does all of this without ever having been explicitly trained on a “summarize customer feedback” dataset.

What makes Zero-Shot Agents different from other AI systems?

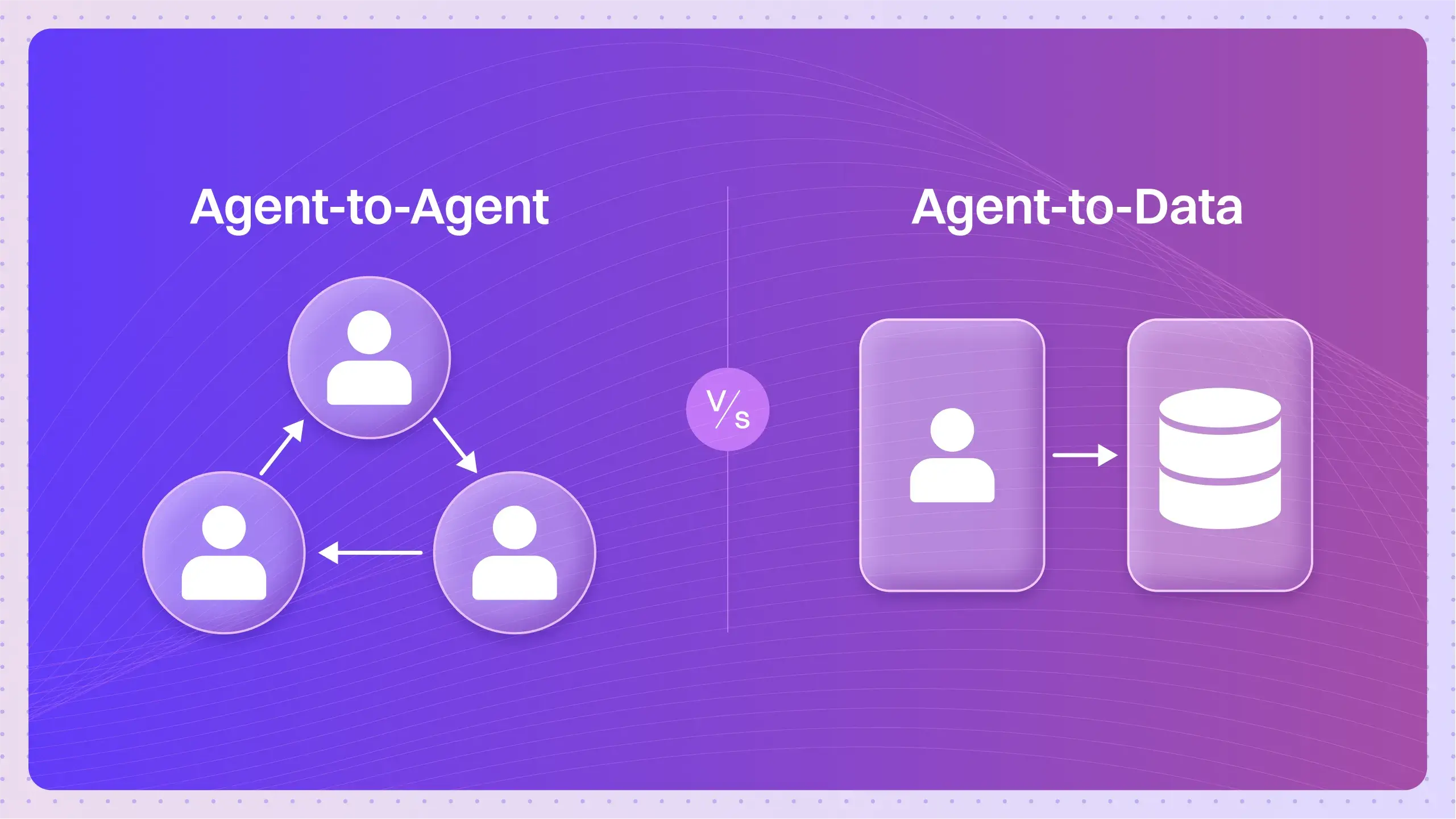

The key difference is the need for examples.

Traditional Machine Learning systems require thousands of specific examples to learn a task. You’d need a huge dataset of emails and their corresponding “common complaints” to train it.

Few-Shot Agents are a step up. You can show them a few examples (the “few shots”) in the prompt to teach them the pattern you want them to follow.

Zero-Shot Agents need zero examples. They are designed to understand the instruction alone. This makes them incredibly powerful for dynamic environments where new tasks appear constantly. You don’t need to stop and gather data or provide examples; you just tell the agent what to do.

Look at OpenAI’s GPT-4. You can ask it to perform a coding task in a new or obscure framework it wasn’t specifically trained on. It uses its general knowledge of programming principles to figure it out on the fly. That is a zero-shot capability in action.

What are the capabilities and limitations of Zero-Shot AI Agents?

Their main capability is extreme flexibility. They can adapt to new problems instantly, making them ideal for:

- General-purpose assistants.

- Creative problem-solving.

- Handling unique, one-off tasks.

However, they have limitations.

- Reliability: For highly specialized, niche tasks, a fine-tuned model trained on specific data will almost always outperform a zero-shot agent.

- Ambiguity: Their performance is highly dependent on the quality of the instruction. A vague or poorly worded prompt can lead to incorrect or nonsensical results.

- Complexity: They can struggle with extremely complex, multi-step tasks that require deep domain expertise without a solid plan.

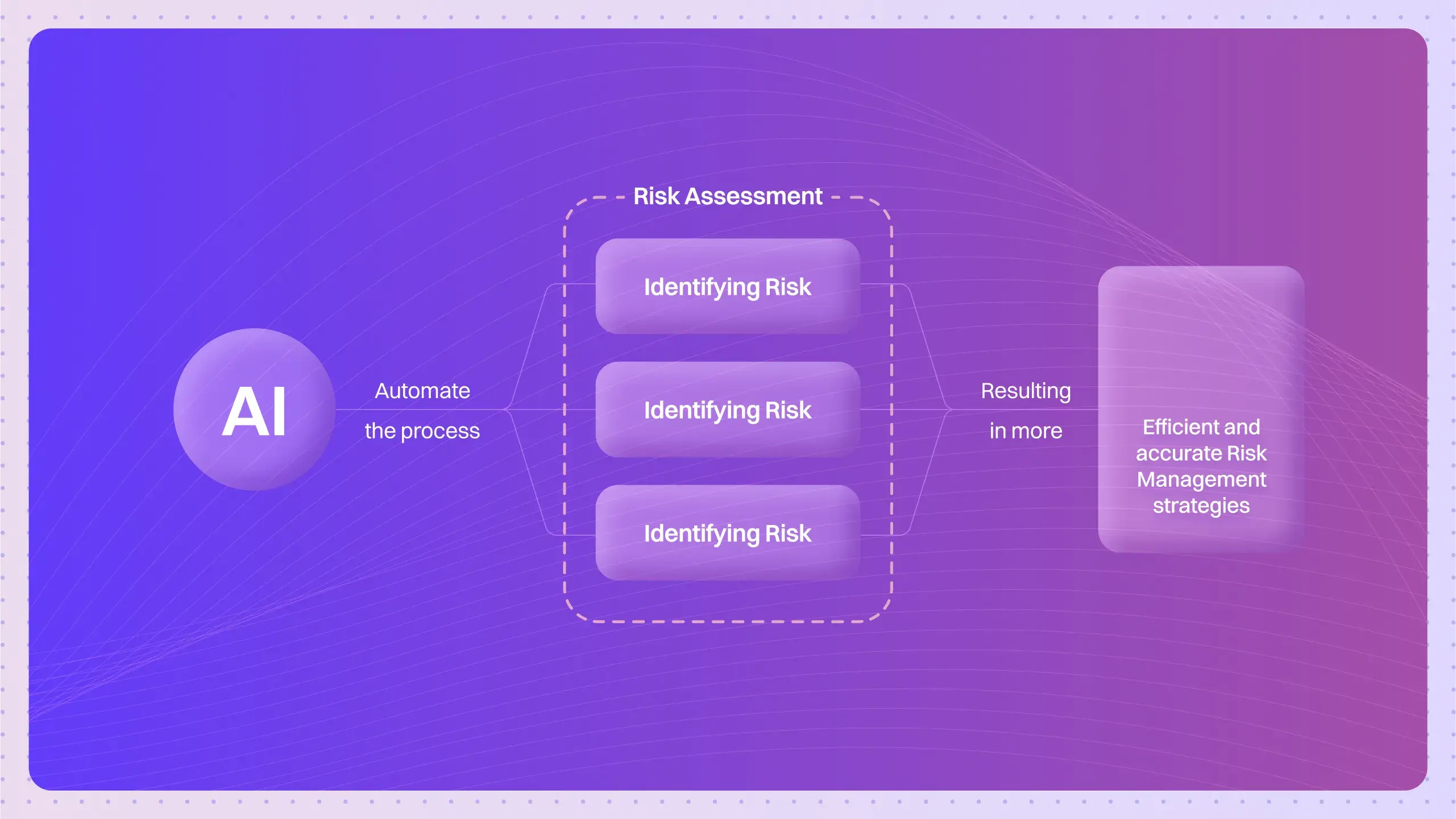

What technical mechanisms enable Zero-Shot AI Agent capabilities?

This isn’t just about a bigger model; it’s about how the model is prompted and structured to think. The core isn’t about general coding, it’s about robust frameworks for reasoning.

- In-context learning: The LLM’s ability to learn from the information provided solely within the prompt itself, without updating its internal weights. This allows it to adapt its behavior based on the immediate instruction.

- Chain-of-thought reasoning: This is a prompting technique that encourages the agent to “think step-by-step.” By breaking a problem down into a sequence of logical thoughts and actions, it dramatically improves its accuracy on complex, novel tasks.

- Task decomposition: The agent’s ability to look at a large, complex goal and break it down into smaller, manageable subtasks that it can execute sequentially.

Quick Test: Can you write a zero-shot prompt?

Imagine you have an agent that can read documents. You want it to act as a compliance officer for a new, internal marketing document. You can’t provide it with examples of “good” or “bad” documents.

How would you prompt it to check for potential issues using a zero-shot approach?

A good prompt would rely on general principles: “Review the attached marketing document. Act as a compliance officer. Identify any statements that make specific, unproven promises or guarantees. Also, flag any language that could be considered pressuring or overly aggressive. List these statements and explain why they are problematic.”

This prompt gives the agent a role, a clear goal, and relies on its general understanding of concepts like “unproven promises” and “aggressive language.”

Questions That Push the Boundaries

Why are Zero-Shot capabilities important for AI agents?

They make AI scalable and accessible. Businesses don’t need to invest in massive data collection and training for every new task. This lowers the barrier to entry and allows for much faster deployment of AI solutions.

Can Zero-Shot AI Agents truly handle any arbitrary task?

No. Their capabilities are limited by the knowledge contained in their foundation model. They can’t perform tasks that require information or skills completely outside of their training data, like predicting the outcome of a real-world physical experiment.

What are the primary challenges in developing effective Zero-Shot AI Agents?

The main challenges are reliability and safety. Ensuring an agent will consistently interpret instructions correctly and not take harmful or unintended actions when faced with a novel problem is a major area of research.

How do prompt engineering techniques affect Zero-Shot Agent performance?

Dramatically. The prompt is the only guidance the agent has. A well-structured, clear, and unambiguous prompt can be the difference between success and total failure.

What role does reasoning play in Zero-Shot AI Agent functionality?

It is the absolute core of their functionality. Without the ability to reason, infer, and plan, an LLM is just a text-completion engine. Reasoning is what allows it to understand a novel task and figure out how to do it.

How do Zero-Shot AI Agents compare to Few-Shot and Fine-Tuned approaches?

- Zero-Shot: No examples needed. Fast and flexible, but potentially less reliable.

- Few-Shot: A few examples are provided in the prompt. Better for guiding the model on specific formats or patterns.

- Fine-Tuned: The model is retrained on a large, task-specific dataset. Highest reliability for that one task, but slow, expensive, and inflexible.

What are the most promising real-world applications of Zero-Shot AI Agents?

Personalized assistants, complex data analysis where queries are always unique, creative content generation, and dynamic problem-solving tools for professionals like programmers, lawyers, and researchers.

What safety considerations are unique to Zero-Shot AI Agents?

Their unpredictability. Because they can tackle novel tasks, they might interpret an instruction in an unforeseen and potentially dangerous way. Setting clear constraints and having human oversight is critical.

How can businesses effectively implement Zero-Shot AI Agents?

Start with low-risk, high-value tasks. Use them as assistants to augment human experts, not replace them. Invest heavily in clear prompt design and establish robust testing and validation processes.

What future developments can we expect in Zero-Shot Agent technology?

We can expect improvements in their reasoning and planning abilities, allowing them to handle more complex, multi-step tasks. We’ll also see better tools for constraining their behavior to ensure they operate safely and reliably.

The move towards zero-shot capabilities is turning AI from a set of specialized tools into a single, general-purpose problem solver.