What is Model Inference?

Model inference is the process of using a trained machine learning model to make predictions based on new data. It enables applications to derive insights and outcomes in real time, providing benefits like timely decision-making and improved accuracy in predictions.

How does Model Inference operate for accurate predictions?

Model inference operates as a core function in machine learning workflows. It applies pre-trained models to unseen data to generate outputs, leveraging patterns learned during training. Here’s how it works:

- Data Input: New data, similar to the training set, is fed into the model. Consistent preprocessing ensures compatibility.

- Model Computation: The model uses learned parameters to perform computations and predict outputs.

- Prediction Generation: The result could be a classification label, regression value, or probabilities.

- Real-Time Processing: For applications needing instant responses, models are optimized to minimize latency.

- Post-Processing: Outputs are transformed or interpreted for practical use, ensuring relevance to the application.

Key benefits:

- Enhanced decision-making

- Real-time processing capabilities

- Support for diverse use cases, including real-time inference

Techniques such as model optimization and hardware acceleration ensure fast, accurate, and resource-efficient predictions.

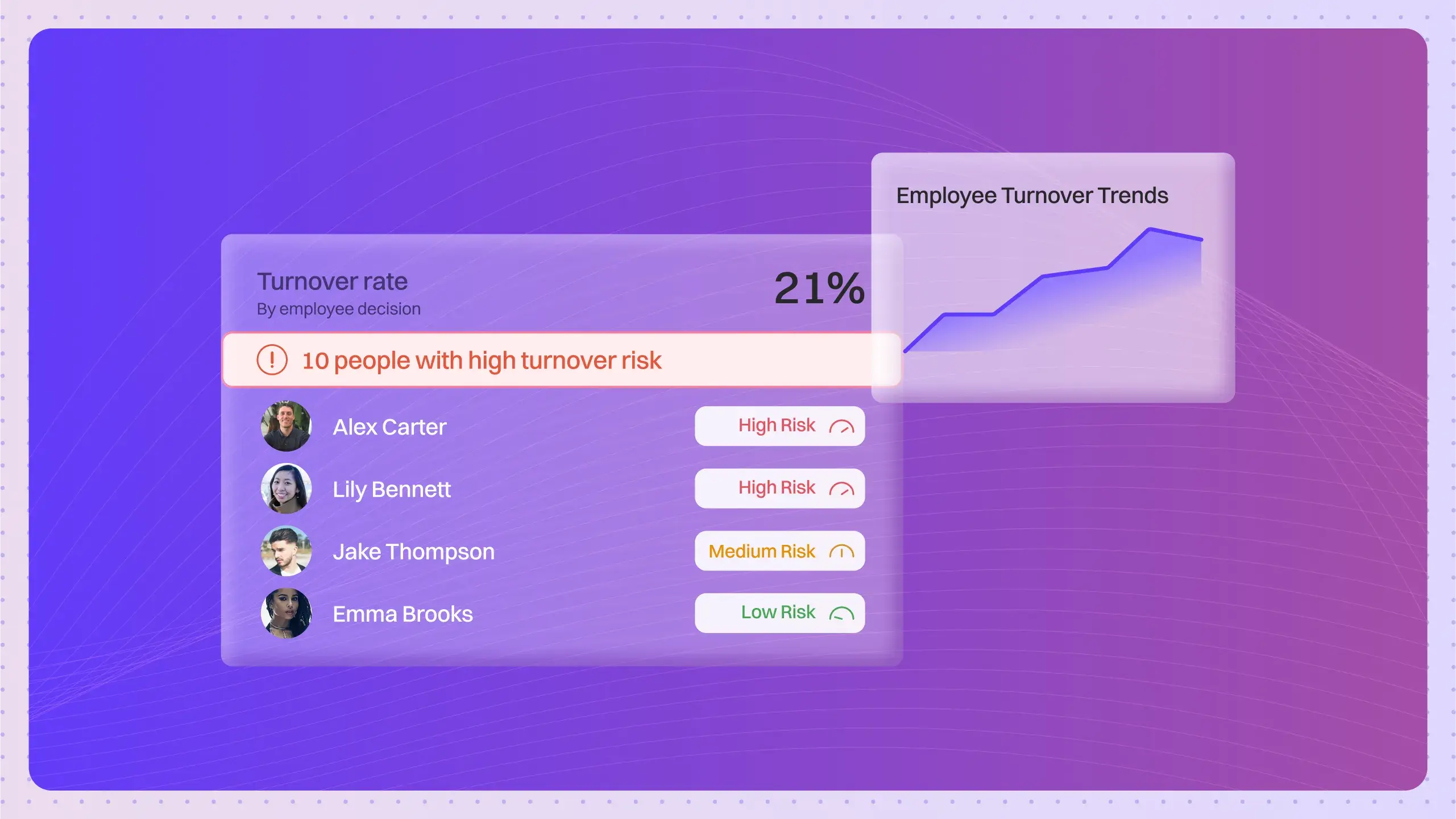

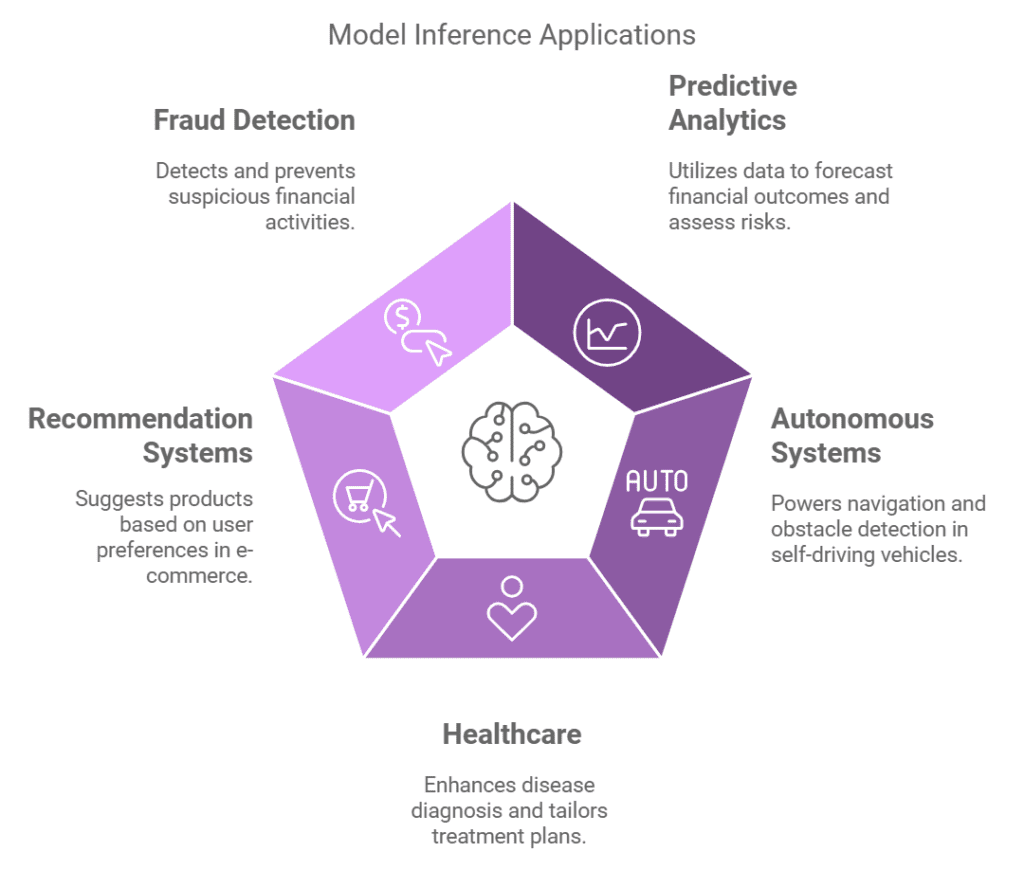

Common uses and applications of Model Inference

Model inference is vital in many industries, powering applications that require swift and accurate predictions. Here are some notable applications:

- Predictive Analytics: Applied in finance for credit scoring and risk modeling.

- Autonomous Systems: Used in self-driving cars for navigation and obstacle detection.

- Healthcare: Supports disease diagnosis and personalized treatment recommendations.

- Recommendation Systems: Drives product suggestions in e-commerce and streaming platforms.

- Fraud Detection: Identifies suspicious activities in banking and online transactions.

- Voice Assistants: Enables real-time responses in devices like Alexa or Siri.

By enabling accurate and efficient predictions, model inference drives innovation and improves operational efficiency across sectors.

What are the advantages of Model Inference?

Implementing model inference offers several benefits, including:

- Real-Time Predictions: Enables dynamic responses, improving user experiences.

- Improved Accuracy: Delivers reliable outcomes by leveraging trained patterns.

- Scalability: Handles high data volumes with minimal performance degradation.

- Versatility: Supports diverse applications, from healthcare to entertainment.

- Cost Efficiency: Reduces manual intervention and automates decision-making.

Are there any drawbacks or limitations associated with Model Inference?

While model inference is highly beneficial, it has its challenges:

- Latency Issues: Real-time predictions can suffer delays in high-demand scenarios.

- Data Dependency: Inference accuracy depends on the quality of training data.

- Model Drift: Over time, models may become less accurate without retraining.

- Integration Complexity: Real-time systems require efficient infrastructure to support inference.

Addressing these limitations is critical for ensuring robust and reliable performance.

Real-Life Examples of Model Inference

- Netflix: Uses model inference to recommend shows based on user viewing habits, leveraging past interactions for highly personalized suggestions.

- Tesla: Applies real-time inference processes in autonomous vehicles, analyzing road conditions and making split-second decisions for safety.

How does Model Inference compare to similar concepts?

Compared to batch processing, model inference excels in real-time prediction scenarios, where immediate results are critical. Batch processing analyzes data in bulk, making it better suited for offline analysis. Inference techniques, on the other hand, prioritize speed and accuracy, especially for applications requiring constant updates.

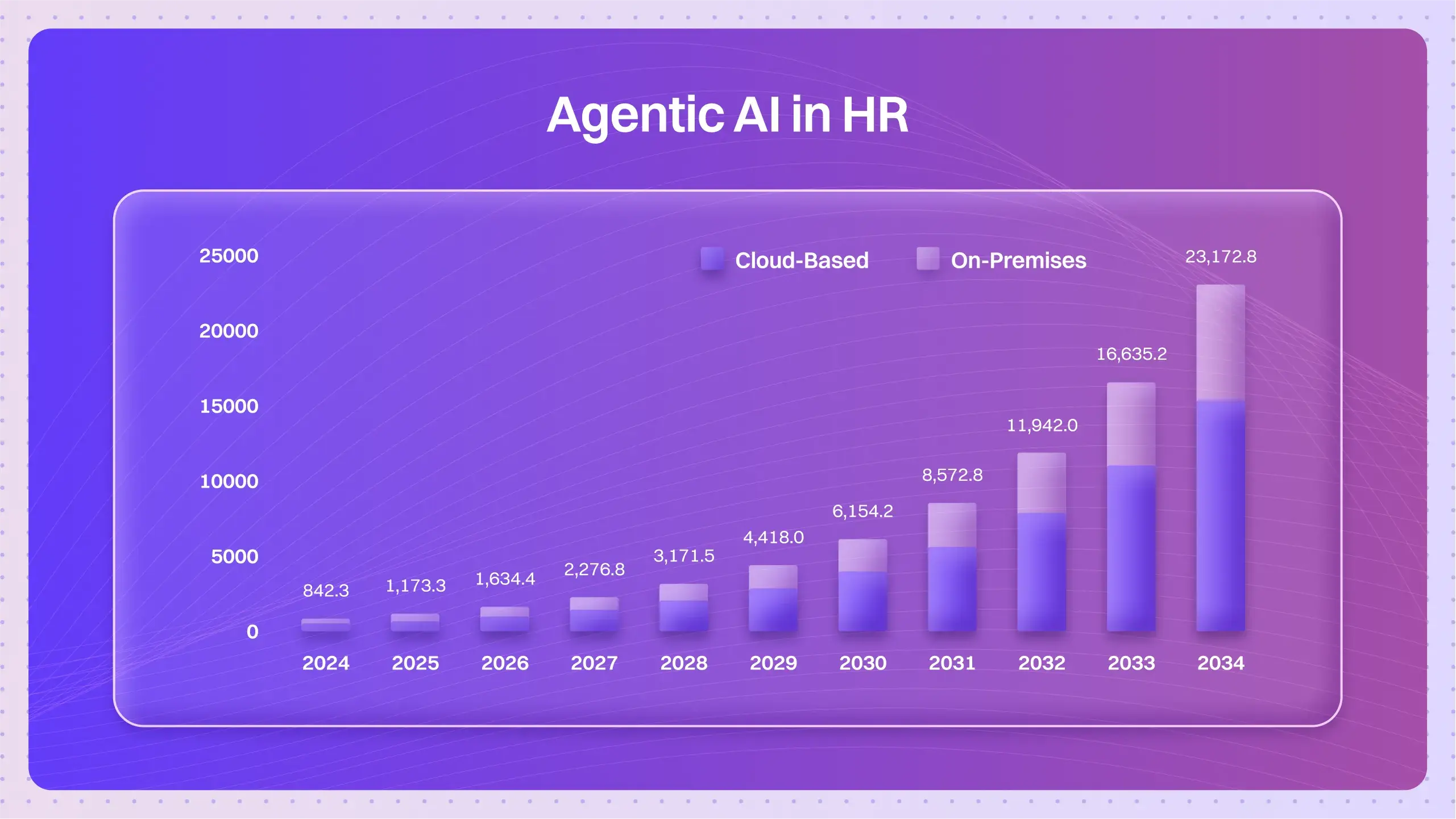

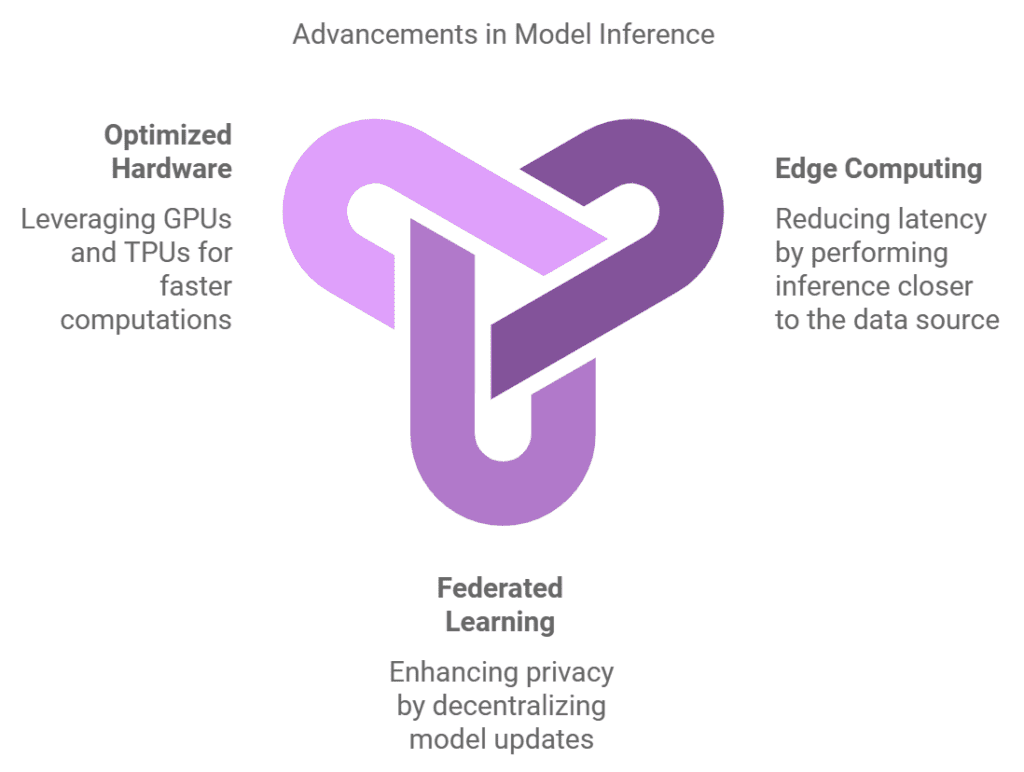

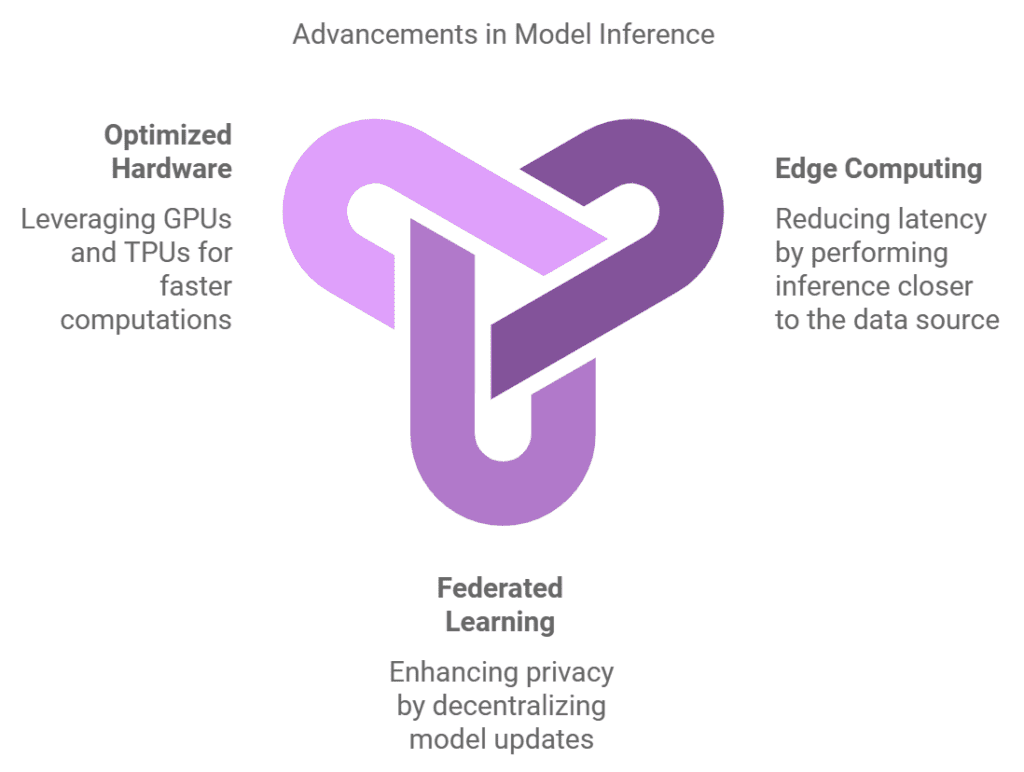

Future Trends in Model Inference

The future of model inference includes advancements in:

- Edge Computing: Reducing latency by performing inference closer to the data source.

- Federated Learning: Enhancing privacy by decentralizing model updates.

- Optimized Hardware: Leveraging GPUs and TPUs for faster computations.

These trends will expand the scope and efficiency of real-time inference applications.

Best Practices for Model Inference

To ensure successful implementation:

- Update Models Regularly: Avoid model drift by retraining with fresh data.

- Optimize Infrastructure: Use GPUs and cloud services to handle high workloads.

- Tune Hyperparameters: Regularly refine parameters to improve performance.

- Test in Real Scenarios: Evaluate the model with live data to measure reliability.

Case Study

Google Photos: By applying model inference for image recognition, Google Photos improved search accuracy by 30%, enhancing user experience. This highlights the effectiveness of real-time inference in consumer-focused applications.

Related Terms

- Predictive Modeling: The process of using models to predict future outcomes.

- Real-Time Processing: Systems that provide immediate responses to inputs.

Understanding these terms provides a broader context for model inference applications.

Step-by-Step Instructions for Implementing Model Inference

- Data Preparation: Preprocess input data to match the training set format.

- Model Deployment: Deploy the trained model on suitable infrastructure.

- Integrate System: Link the model to applications requiring predictions.

- Monitor Performance: Continuously evaluate inference accuracy and speed.

- Refine Outputs: Optimize the system based on feedback.

Frequently Asked Questions

Q1: What is model inference?

A1: Model inference is the process of using a trained model to predict outcomes from new data.

Q2: Why is real-time inference important?

A2: Real-time inference enables immediate responses, enhancing decision-making in dynamic applications.

Q3: What techniques improve inference efficiency?

A3: Techniques like hardware optimization and batching reduce latency and improve scalability.

Q4: What types of models support inference?

A4: Models like neural networks, decision trees, and ensemble methods are commonly used.

Q5: How is inference different from training?

A5: Training builds the model, while inference applies it to new data for predictions.