What is Dimensionality Reduction?

Dimensionality reduction is a technique used in data science to simplify datasets by reducing the number of features or variables while retaining the most important information. Techniques such as PCA (Principal Component Analysis) help improve model efficiency, reduce complexity, and make data easier to visualize and analyze.

How Does Dimensionality Reduction Operate?

Dimensionality reduction involves condensing high-dimensional datasets into fewer dimensions, preserving critical information. Here’s how it functions:

- Data Analysis: High-dimensional data is evaluated to identify redundancy or irrelevant features.

- Feature Transformation: Techniques like PCA transform original features into a new coordinate system, prioritizing variance.

- Visualization: Tools such as t-SNE reduce dimensions for easier data visualization in 2D or 3D.

- Noise Removal: It eliminates irrelevant or noisy features, improving model performance.

- Model Training: Reduced dimensions speed up computations and help models generalize better.

Benefits:

- Improved Model Efficiency: Reducing features speeds up training times.

- Enhanced Interpretability: Simplifies data visualization.

- Reduced Overfitting: Focuses only on the most relevant data points.

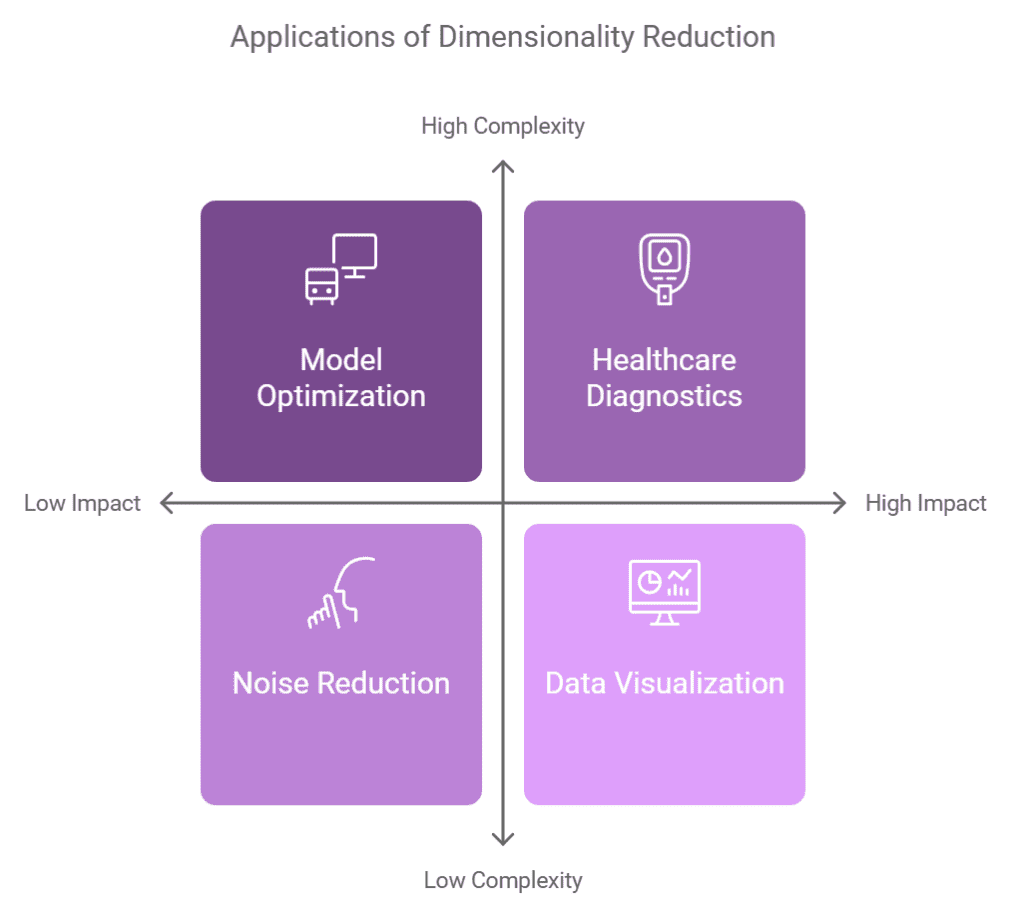

Common Uses of Dimensionality Reduction

Dimensionality reduction is applied across various domains to manage and analyze complex datasets:

- Data Visualization: Transform high-dimensional datasets into visual formats for better interpretation.

- Noise Reduction: Remove irrelevant data, improving the signal-to-noise ratio.

- Model Optimization: Speed up training by reducing computational complexity.

- Clustering: Enhance the performance of clustering algorithms like K-means.

- Healthcare: Identify key variables in patient data for diagnostics.

Advantages of Using Dimensionality Reduction

- Efficiency: Speeds up computation and reduces storage requirements.

- Accuracy: Helps minimize overfitting by simplifying datasets.

- Visualization: Makes high-dimensional data easier to understand and present.

- Automation: Reduces the need for manual feature engineering.

- Scalability: Makes working with large datasets more manageable.

Are There Any Drawbacks to Dimensionality Reduction?

Yes, dimensionality reduction has potential drawbacks:

- Loss of Information: Reducing dimensions can sometimes eliminate critical data.

- Computational Cost: Techniques like t-SNE may require substantial resources for large datasets.

- Interpretability: Transformed features may lack real-world interpretability.

Real-Life Example: How is Dimensionality Reduction Used?

In finance, dimensionality reduction is employed for credit risk analysis. By reducing the dimensions of customer data using PCA, banks identify critical factors that influence credit risk. This improves the accuracy and speed of decision-making.

How Does Dimensionality Reduction Compare to Feature Selection?

While both reduce complexity, dimensionality reduction transforms data into a new space (e.g., PCA), whereas feature selection directly eliminates irrelevant features. Dimensionality reduction is particularly effective for visualizing and handling noisy datasets.

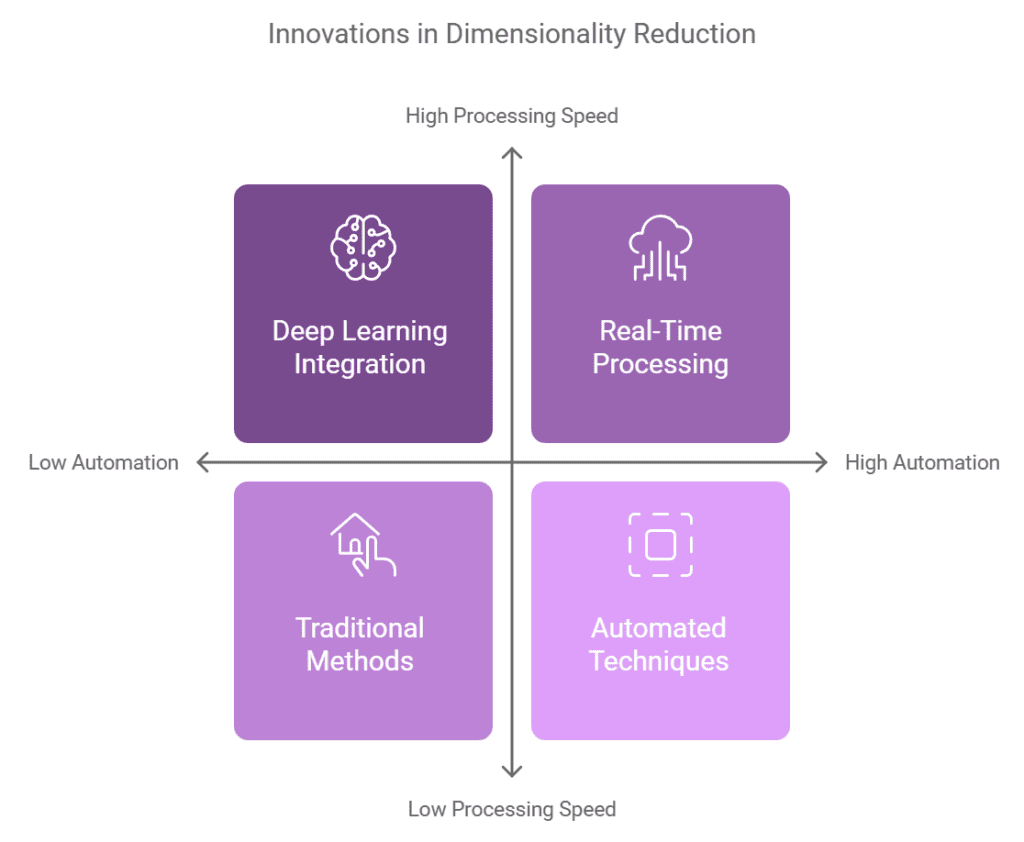

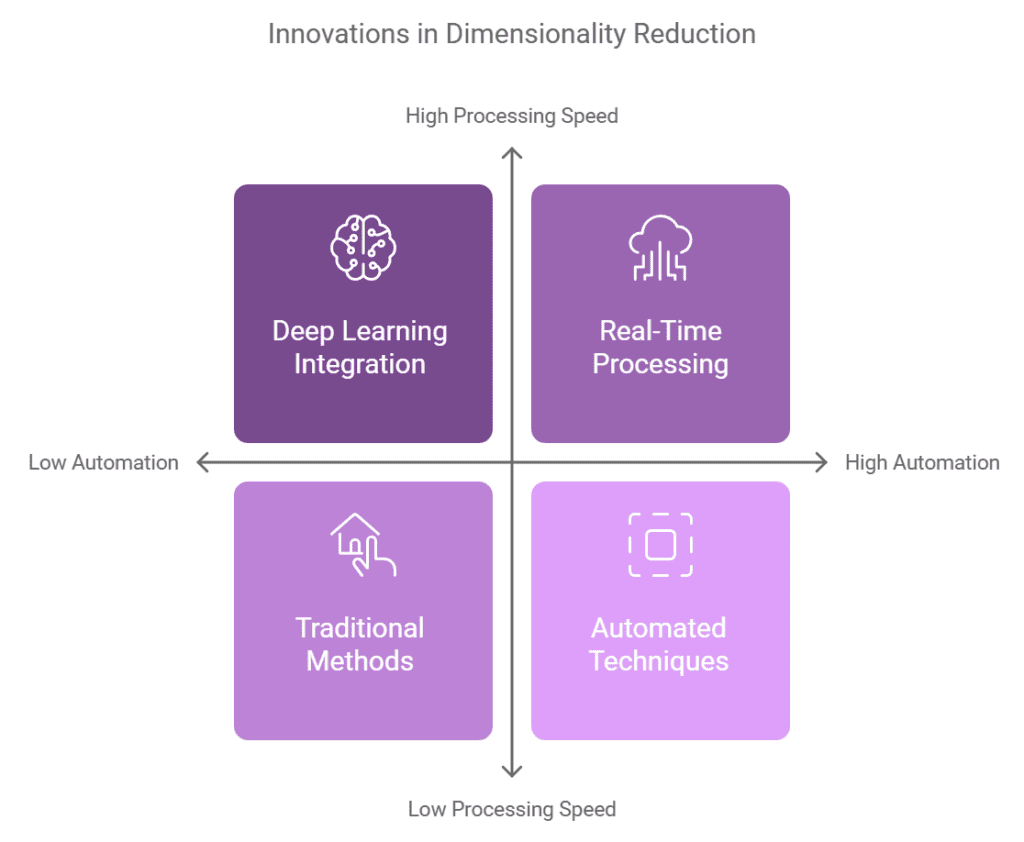

Future Trends in Dimensionality Reduction

- Deep Learning Integration: Combining dimensionality reduction with deep learning models to handle massive datasets.

- Automated Techniques: AI-driven approaches for choosing optimal dimensions.

- Real-Time Processing: Faster algorithms enabling real-time dimensionality reduction.

Best Practices for Dimensionality Reduction

- Preprocess Data: Ensure data is cleaned and normalized.

- Choose the Right Method: Use PCA for preserving variance or t-SNE for visualization.

- Validate Results: Regularly test model performance after applying dimensionality reduction.

- Iterative Approach: Experiment with different techniques and compare their outcomes.

Case Study: Improving Predictive Models with Dimensionality Reduction

A retail company applied PCA to its customer purchase data. By reducing the number of variables, they identified key purchasing behaviors, which led to a 20% increase in recommendation accuracy.

Related Terms to Dimensionality Reduction

- Feature Selection: Selecting the most relevant features without transforming data.

- Data Compression: Reducing data storage requirements while retaining usability.

- t-SNE: A technique for visualizing high-dimensional data in a lower-dimensional space.

Step-by-Step Guide to Implement Dimensionality Reduction

- Collect Data: Gather high-dimensional data.

- Preprocess: Clean and normalize datasets.

- Choose a Technique: Apply methods like PCA or t-SNE.

- Transform Data: Reduce dimensions while preserving variance.

- Validate: Test model performance and interpret results.

- Deploy: Use the reduced dataset for analysis or model training.

Frequently Asked Questions

What is Dimensionality Reduction?

Dimensionality reduction simplifies datasets by reducing the number of features while retaining the most critical information.

What are Common Techniques?

- PCA: Focuses on maximizing variance.

- t-SNE: Specializes in visualizing high-dimensional data.

Why Use Dimensionality Reduction?

It reduces complexity, prevents overfitting, and enhances interpretability.

Can It Be Used with Any Dataset?

Yes, but it works best with high-dimensional and noisy data.

How Do I Choose Between PCA and Feature Selection?

Use PCA for transformation-based reduction, and feature selection for eliminating irrelevant features.

By adopting dimensionality reduction, organizations can streamline complex datasets and optimize their machine learning workflows.