Table of Contents

ToggleThere are two fundamental ways to automate work. You can give a system a precise instruction, or you can give it a goal. This single difference determines how an AI system performs, scales, and ultimately serves your business.

On one side, you have LLM-based task runners. They are specialists designed to execute a single action perfectly. Give them text, and they will summarize it. Ask a question, and they will generate an answer. They are fast, reliable, and stateless, meaning each task is a fresh start.

On the other side are Agentic AI systems. They are goal-driven problem-solvers. An agent can plan multiple steps, use tools, access memory, and adapt its approach until the objective is met. It operates with autonomy, figuring out the “how” on its own.

The specialist is fast and the problem-solver is powerful. So which one in the Agentic AI vs LLM debate truly scales?

Choosing between them is not about picking the more advanced technology. It is about matching the architecture to the job. This guide clarifies which approach scales best across performance, cost, and complexity, helping you understand not just what works, but what works when.

The choice becomes clear when you focus on a single question: are you automating a task or an outcome? Everything, from architecture to cost, flows from that answer.

Instruction vs. Intention

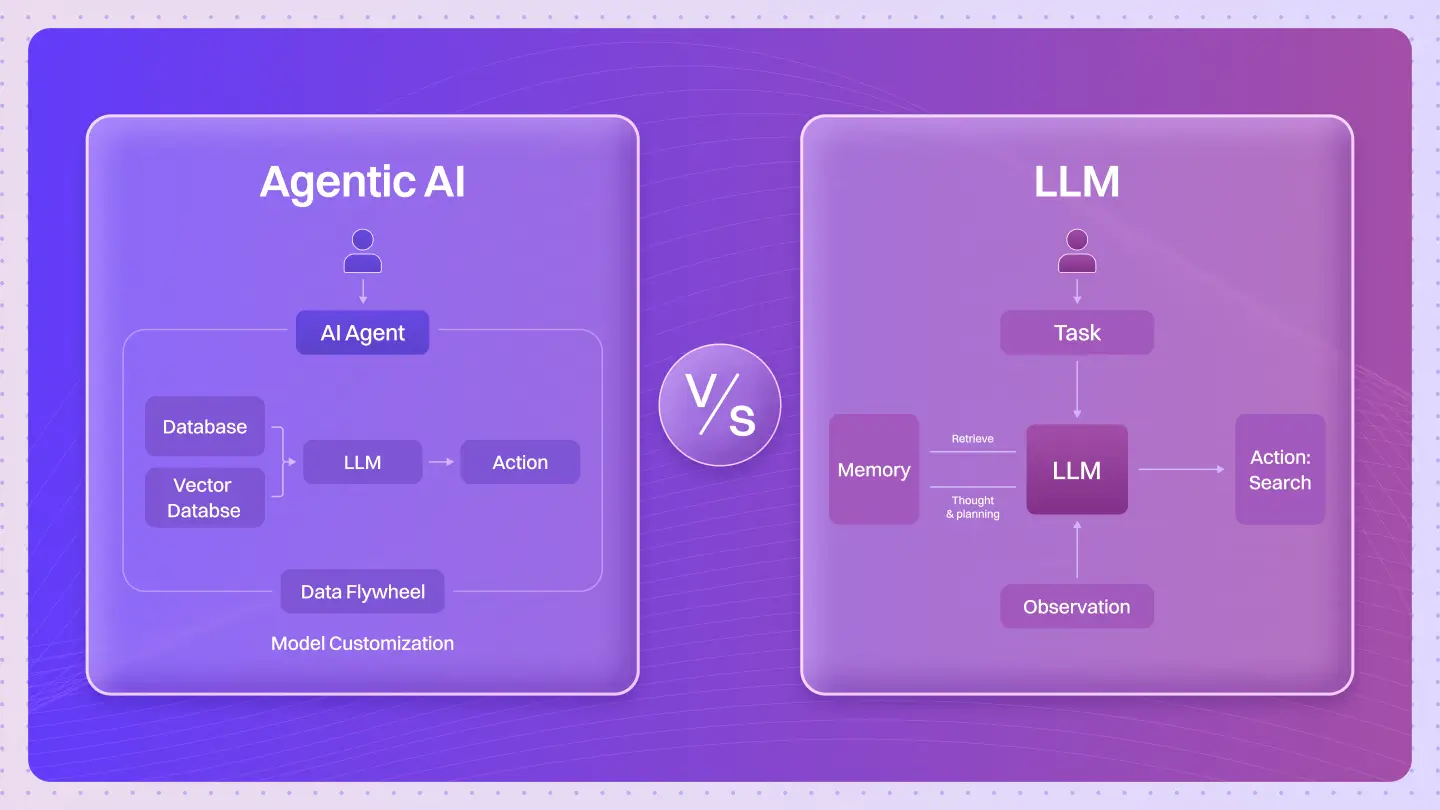

The distinction between these two models comes down to their architecture. One is built for a single exchange, while the other is built for an entire process.

The LLM Task Runner: Executing Instructions

An Large language models (LLMs) based task runner operates on a simple principle: you give it one prompt, and it returns one response. Think of tasks like summarization, email drafting, or data classification. The system completes the request without any memory of past interactions.

This stateless design is its greatest strength. It ensures that every task is fast, predictable, and self-contained. The workflow is defined by you, and the model executes a single, clear instruction without deviation.

Agentic AI: Fulfilling Intentions

An Agentic AI system is designed to achieve a goal, not just answer a prompt. It operates autonomously to complete complex, multi-step jobs. To do this, an agent relies on a core structure: a planner, an executor, memory, and a toolchain.

This architecture allows an agent to reason through a problem, create a plan, use external tools like APIs, and learn from its actions. It remembers what it has done and adjusts its plan until your final goal is met.

Agentic AI vs LLM: Key Architectural Differences

The table below summarizes these foundational distinctions.

| Feature | LLM Task Runner | Agentic AI System |

| State | Stateless | Stateful (via memory) |

| Step Count | Single-step | Multi-step |

| Control | User-driven | Goal-driven autonomy |

| Tool Usage | Rare | Frequent |

| Complexity Handling | Minimal | Supports nested logic |

These architectural decisions, stateless versus stateful, single-step versus multi-step, have immediate consequences. The most important consequence is how each system handles growth, which reveals the crucial difference between scaling for volume and scaling for complexity.

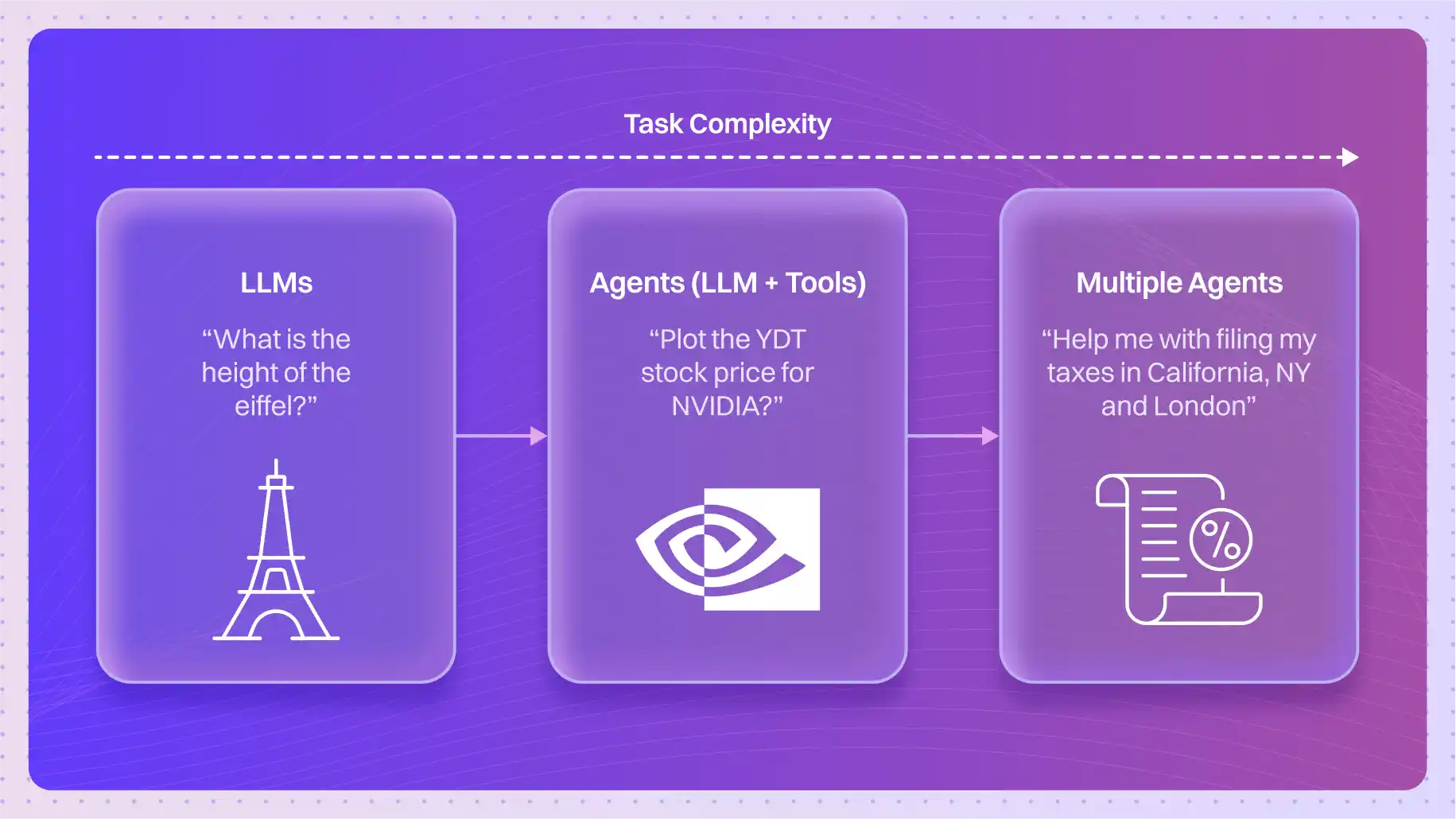

Scaling Width vs. Scaling Depth

How a system scales reveals its true purpose. One is designed to handle massive volume, while the other is built to manage profound complexity. We can think of this as scaling for width versus scaling for depth.

LLM task runners scale for width. Because each task is independent, you can run ten thousand of them in parallel as easily as you can run one. This horizontal scaling is perfect for high-volume, repetitive jobs where the core task does not change. There is very little orchestration required.

Agentic AI scales for depth. It handles complexity by breaking a large goal into a chain of smaller tasks. This vertical scaling allows an agent to tackle sophisticated workflows, like testing an entire codebase or managing a customer issue from start to finish. But this power comes with a tradeoff: greater complexity means more engineering effort and slower execution for any single workflow.

Agentic AI vs LLM: Scaling Comparison

This table outlines the relationship between the two scaling models.

| Metric | LLM Runners | Agentic AI |

| Throughput | High | Moderate |

| Task Complexity | Low | High |

| Parallel Execution | Easy | Needs orchestration |

| Developer Overhead | Low | High |

This choice between scaling for width or depth is not theoretical. It has direct, measurable effects on three critical resources: your time, your budget, and your engineering overhead.

The Price of Autonomy

Greater capability is never free. The autonomy and complexity of an agentic system come with a higher operational cost than a simple task runner. Understanding this tradeoff is essential.

Time: Transactional vs. Process Speed

An LLM runner is built for transactional speed. A single, stateless call is incredibly fast, typically taking less than two seconds. You get an immediate response because the scope of work is small.

An agent operates at a process speed. Each reasoning loop, where it thinks, plans, and acts(can take several seconds). When a workflow requires multiple steps, the total time can extend to minutes. This is not a flaw; it is the natural pace of a system that performs complex work.

Cost: A Single Call vs. a Full Workflow

The financial cost follows the same logic. A single LLM call is exceptionally cheap, often costing fractions of a cent. Agentic workflows are more expensive because they are composed of many such calls, plus tool usage and memory access.

For example, using an LLM to draft one email might cost you a penny. In contrast, a sales agent that researches a contact in your CRM, personalizes that email, and schedules the delivery could cost a dollar. The agent delivers far more value, and its price reflects the work involved.

Resources: The Infrastructure Footprint

LLM runners are lightweight. Their stateless design means they require almost no persistent storage or complex management. They are simple to deploy and maintain.

Agentic systems have a larger infrastructure footprint. To function, they need dedicated components for memory (like vector databases), integrations for their tools (APIs), and layers for logging and debugging. This operational burden is the foundation that makes their autonomy possible.

Beyond the direct costs of time and money, autonomy introduces a new variable: unpredictability. A system that thinks for itself can also fail in more complex ways, which forces you to manage reliability and risk differently.

Predictability vs. Power

A simple system fails in simple ways. A powerful system fails in powerful ways. This principle governs the reliability of LLM runners and agentic systems.

Task Completion and Reliability

An LLM runner is highly predictable. If you give it a well-defined task, it will complete it reliably. Failures are usually straightforward and can be corrected by adjusting the prompt.

An agent’s autonomy is both its greatest strength and its primary source of risk. When given an ambiguous goal, an agent can misinterpret the plan, spiral into irrelevant tasks, or fail completely. Open-ended systems like the original AutoGPT often saw failure rates as high as 20-40%. This is why modern agentic frameworks like Lyzr AI is essential; they provide guardrails and structure to contain this risk.

Monitoring and Debugging

When an LLM runner fails, debugging is simple. You check the prompt and try again.

When an agent fails, you are not debugging a single error; you are diagnosing a broken process. The failure could stem from flawed planning logic, incorrect tool usage, corrupted memory, or an infinite loop. Identifying the root cause requires a far more sophisticated approach, including step-by-step logging, trace visualizations, and sometimes, a human in the loop to supervise.

These differences in reliability directly influence how ready each technology is for widespread, unsupervised use. A system’s maturity is not just about its capabilities, but about how predictably it performs in the real world.

Proven Tools vs. The Emerging Frontier

Every new technology follows a path from pioneering concept to trusted tool. LLM runners and agentic systems are at different points on this journey.

LLM Runners: Stable and Scalable

LLM task runners are a mature technology. They are used at a massive scale by enterprises today and form the backbone of countless AI features. Major platforms like OpenAI, Cohere, and AWS Bedrock provide robust, commercially supported APIs for this purpose. Because they are simple and stateless, the infrastructure required to run them is minimal, making them easy to adopt.

Agentic Systems: Powerful but Evolving

Agentic AI represents the emerging frontier. While its potential is enormous, its stability in production environments is still evolving. The technology is rapidly maturing through structured frameworks like LangGraph, CrewAI, and Autogen, which provide necessary guardrails. However, their autonomous nature demands tighter controls to meet enterprise standards for security, data privacy (PII), and auditing, which adds to the implementation challenge.

Understanding where each technology stands today, as a proven tool or an emerging frontier which provides the final piece of context. It allows us to step back and view the entire landscape, leading to a simple, decisive conclusion about how to choose the right model for the job.

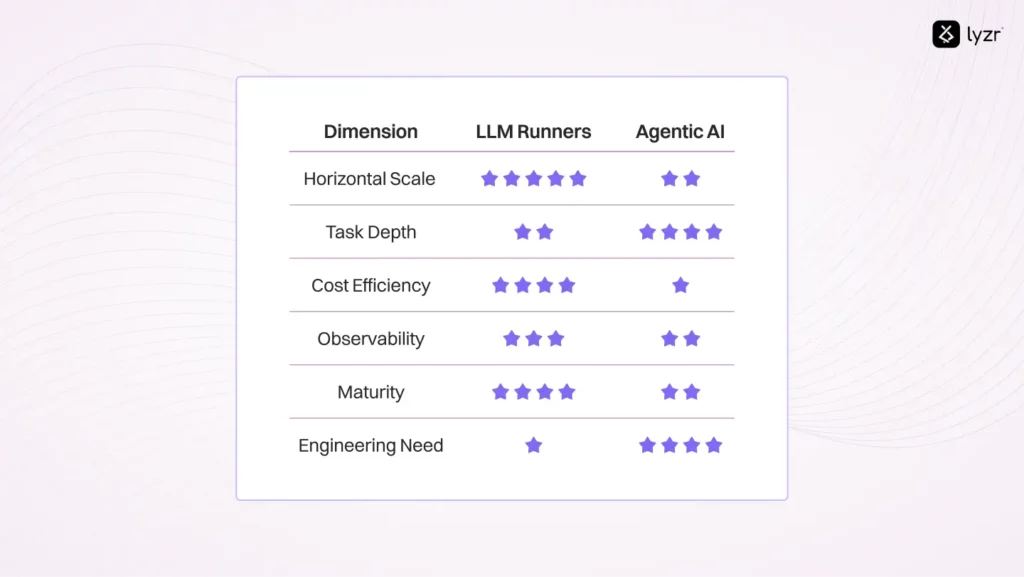

The Verdict on Scaling

When it comes to Agentic AI vs LLM, the right choice depends entirely on the problem you need to solve. Do you need to handle more volume, or manage more complexity? The following table summarizes the tradeoffs.

| Dimension | LLM Runners | Agentic AI |

| Horizontal Scale | ⭐⭐⭐⭐⭐ | ⭐⭐ |

| Task Depth | ⭐ | ⭐⭐⭐⭐⭐ |

| Cost Efficiency | ⭐⭐⭐⭐ | ⭐⭐ |

| Observability | ⭐⭐⭐⭐ | ⭐⭐ |

| Maturity | ⭐⭐⭐⭐⭐ | ⭐⭐ |

| Engineering Need | ⭐⭐⭐⭐ | ⭐⭐ |

LLM runners scale wider. Agentic AI scales deeper.

But the most effective systems do not force a choice between width and depth. They recognize that modern workflows require both. The true challenge is not choosing one model over the other, but skillfully blending them together.

Beyond the Choice: The Hybrid System

The most scalable AI architectures are not replacements, but integrations. They use a layered approach where each component is assigned the work it is best designed to handle.

Consider a customer support system. Stateless LLM components can provide instant answers to thousands of common questions, operating with high efficiency and low cost. When a query becomes complex, like a billing dispute, the system can activate an agentic workflow. This agent can then look up account details, check policies, and escalate the issue, managing the process from start to finish.

This hybrid model gives you the best of both worlds: the broad, horizontal scale of task runners and the deep, vertical scale of autonomous agents. As agentic platforms mature and become more efficient, this layered strategy will become the standard for building truly intelligent and responsive systems.

The question was never about which AI scales better. It was always about which architecture is right for which job. The most durable systems are not built on a single technology, but on the wisdom to know precisely when to use each one.

The Path to Scalable Automation

The Agentic AI vs LLM debate is not about picking a winner. It is about understanding the nature of the work you need to automate. One is designed for volume, the other for depth. One executes instructions, while the other fulfills intentions.

The most effective organizations do not choose between the two. They build hybrid systems that leverage the strengths of both, using simple runners for high-volume tasks and deploying sophisticated agents for complex, multi-step processes. This is the foundation of truly scalable automation.

Building these intelligent, layered systems requires a deliberate approach. The principles are clear, but the engineering can be complex.

Lyzr AI provides a platform designed to manage this complexity, allowing you to build and deploy robust hybrid workflows. If you are ready to see these principles in action, we invite you to book a demo and explore how to automate the work that truly matters.

Discover how Lyzr can elevate your automation. Schedule a demo today.

Compare how Lyzr is reviewed across trusted software marketplaces.

Book A Demo: Click Here

Join our Slack: Click Here

Link to our GitHub: Click Here